Mise-Unseen

Using Eye Tracking to Hide Virtual Reality Scene Changes in Plain Sight

ACM UIST 2019Abstract

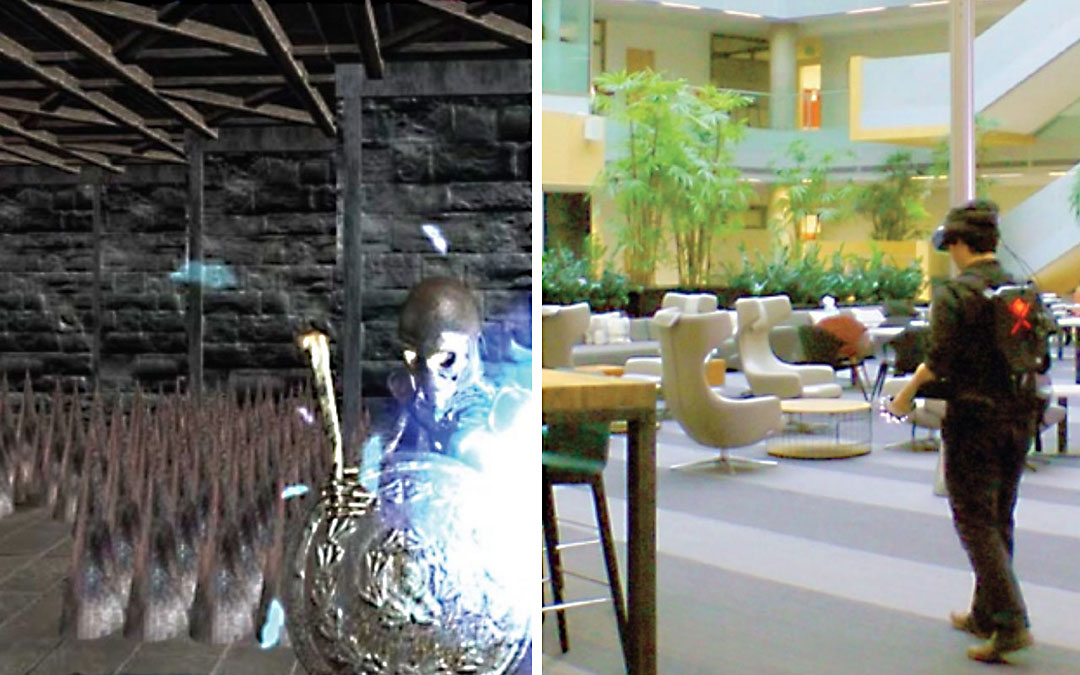

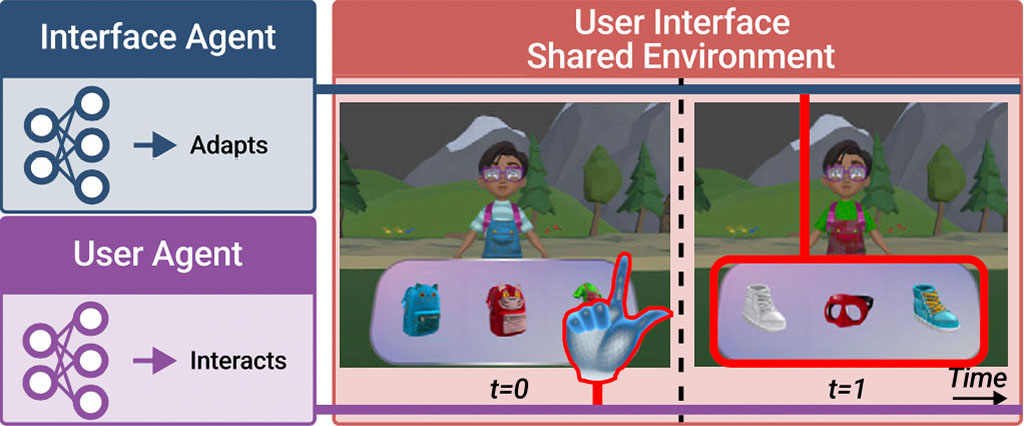

Creating or arranging objects at runtime is needed in many virtual reality applications, but such changes are noticed when they occur inside the user’s field of view. We present Mise-Unseen, a software system that applies such scene changes covertly inside the user’s field of view. Mise-Unseen leverages gaze tracking to create models of user attention, intention, and spatial memory to determine if and when to inject a change. We present seven applications of Mise-Unseen to unnoticeably modify the scene within view (i) to hide that task difficulty is adapted to the user, (ii) to adapt the experience to the user’s preferences, (iii) to time the use of low fidelity effects, (iv) to detect user choice for passive haptics even when lacking physical props, (v) to sustain physical locomotion despite a lack of physical space, (vi) to reduce motion sickness during virtual locomotion, and (vii) to verify user understanding during story progression. We evaluated Mise-Unseen and our applications in a user study with 15 participants and find that while gaze data indeed supports obfuscating changes inside the field of view, a change is rendered unnoticeably by using gaze in combination with common masking techniques.

Video

Reference

Sebastian Marwecki, Andrew D. Wilson, Eyal Ofek, Mar Gonzalez-Franco, and Christian Holz. Mise-Unseen: Using Eye Tracking to Hide Virtual Reality Scene Changes in Plain Sight. In Proceedings of ACM UIST 2019.