SIPLAB: Sensing, Interaction & Perception Lab

The SIPLAB is a research lab at ETH Zürich working on egocentric perception, computational interaction, and behavioral AI. We advance mixed reality, robotics, and human-computer interaction toward adaptive intelligence and human-aware systems. We are part of the Department of Computer Science and the AI Center.

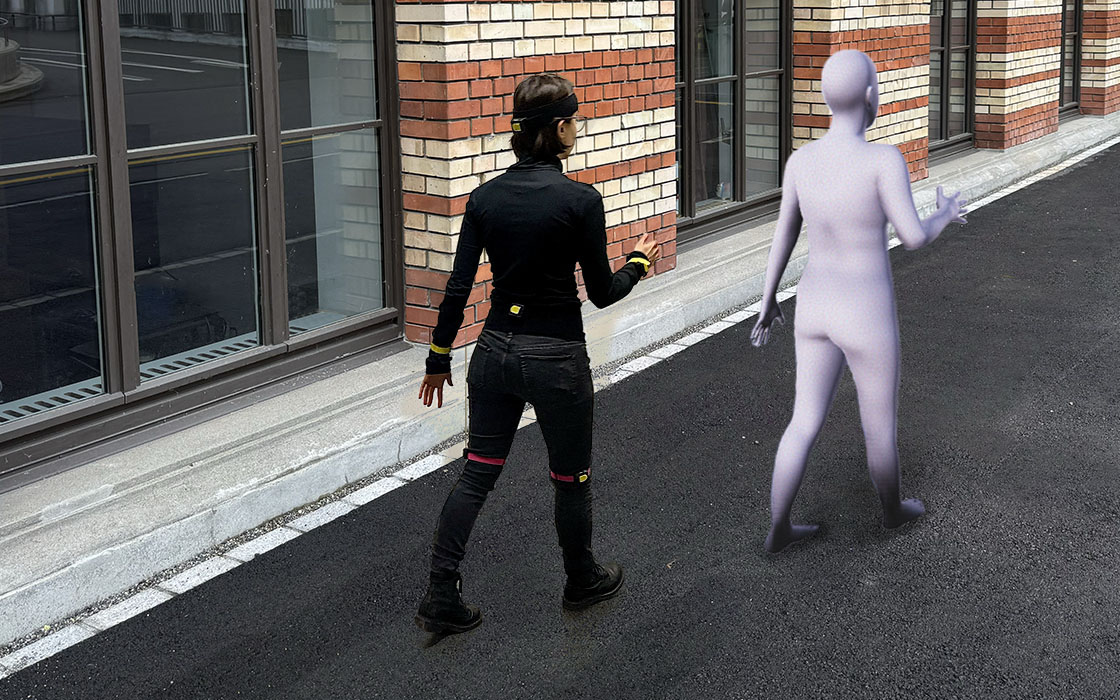

egocentric perception

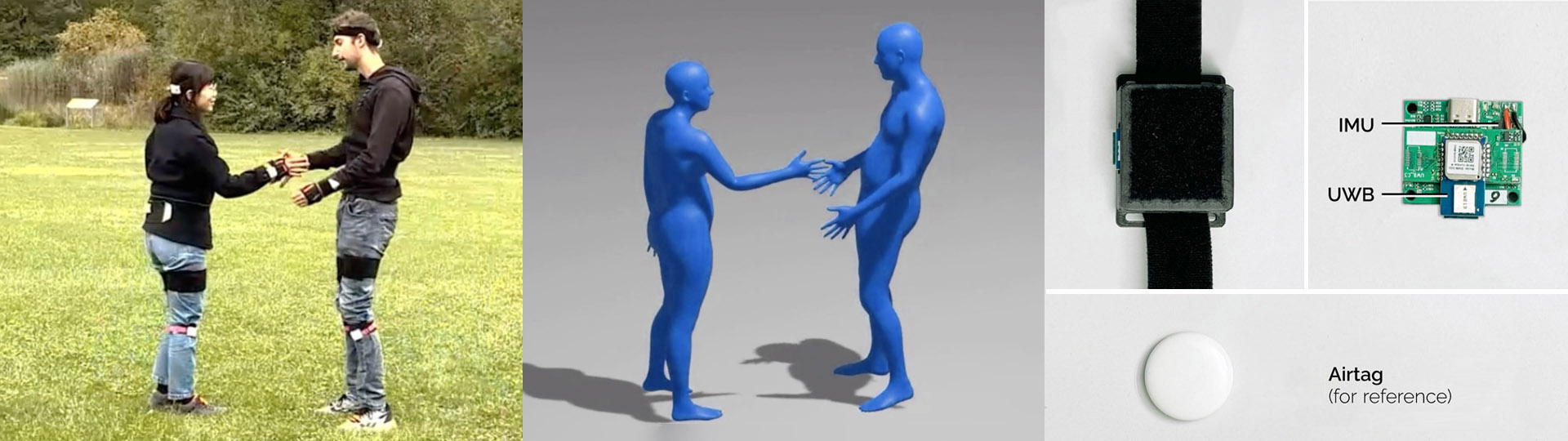

scalable methods for action & motion tracking

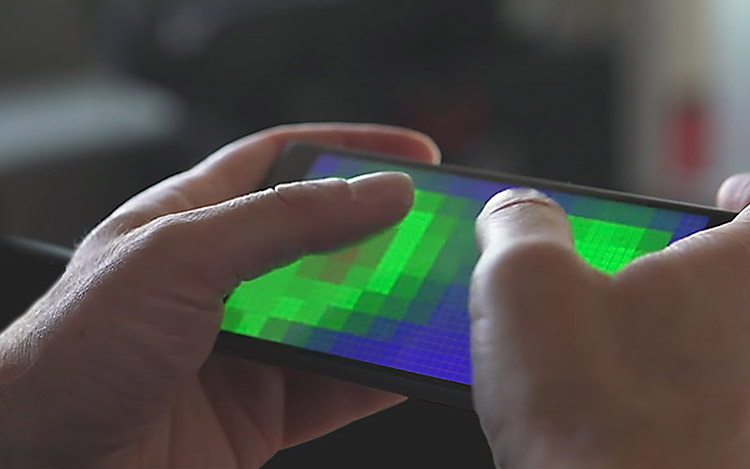

computational interaction

decoding input intent for adaptive systems

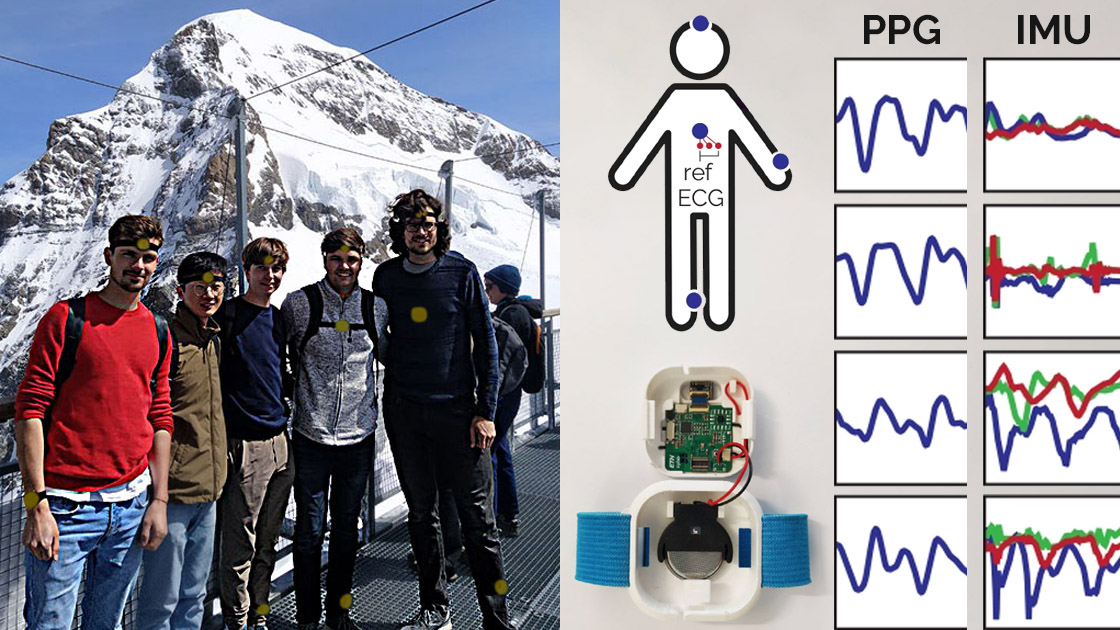

behavioral AI

human affect, emotions, and traits via digital biomarkers

Projects to appear

Ultra Diffusion Poser

Diffusion Motion Tracking from Sparse Inertial SensorsFrequency-Weighted Neural Kalman Filters

AutoOptimization

Automating UI Optimization through Multi-Agentic ReasoningPreference-Guided Prompt Optimization

Prompt Optimization for Image GenerationPoint & Grasp

Flexible Selection of Out-of-Reach Objects Through Probabilistic Cue IntegrationAffiliations and collaborations

- ETH AI Center

- ELLIS Unit Zürich

- Max Planck ETH Center for Learning Systems (CLS)

- Department of Computer Science (D-INF)

- Department of Information Technology & Electrical Engineering (D-ITET)

- Robotics, Systems and Control Program at ETH Zürich

- ETH Augmented Reality Research Hub (ETHAR)

- Human-Computer Interaction @ ETH Zürich (HCI@ETH)

- Competence Centre for Rehabilitation Engineering and Science (ETH RESC)

- Zurich Information Security & Privacy Center (ZISC)