MANIKIN

Biomechanically Accurate Neural Inverse Kinematics for Human Motion Estimation

ECCV 2024Abstract

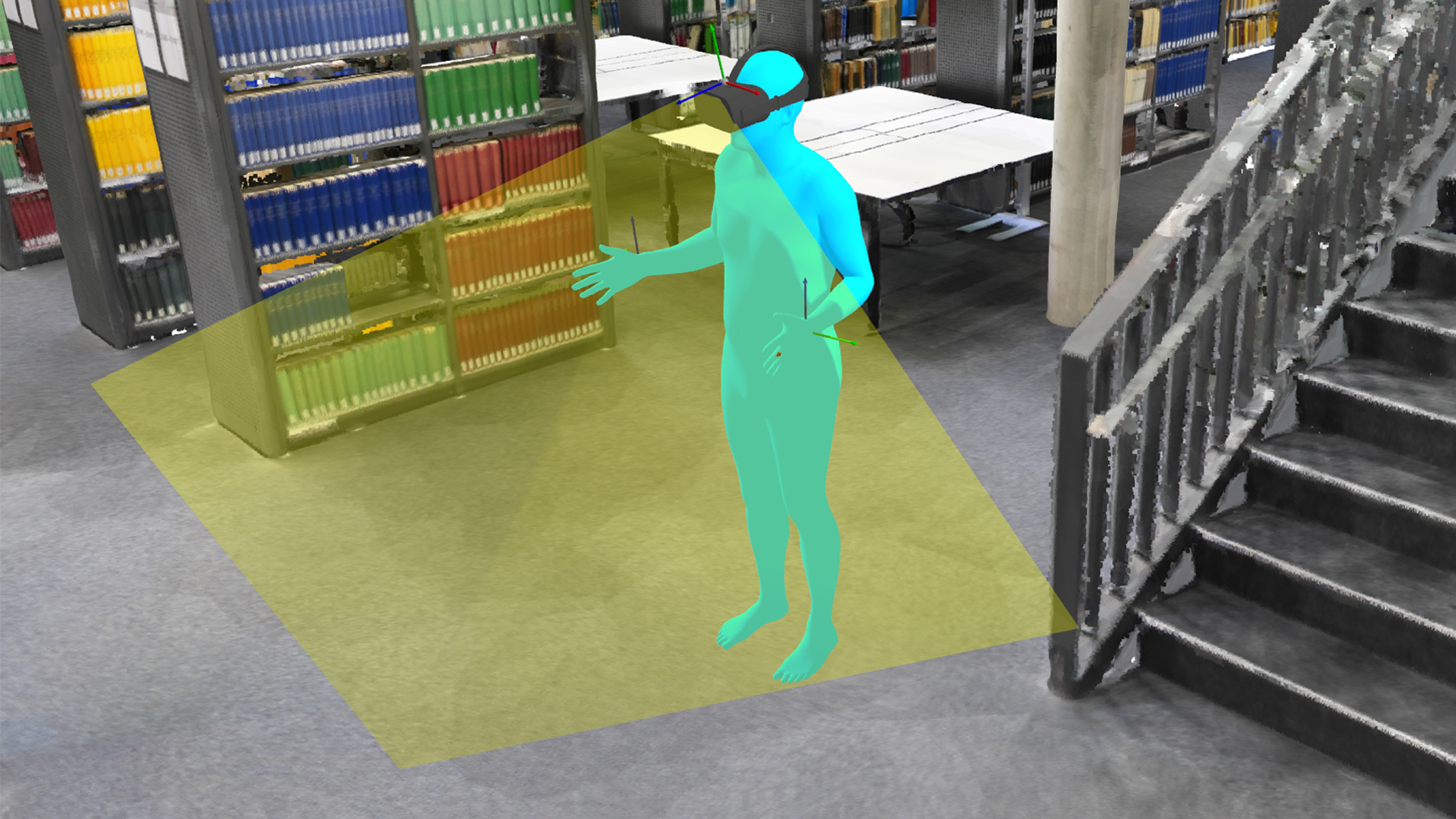

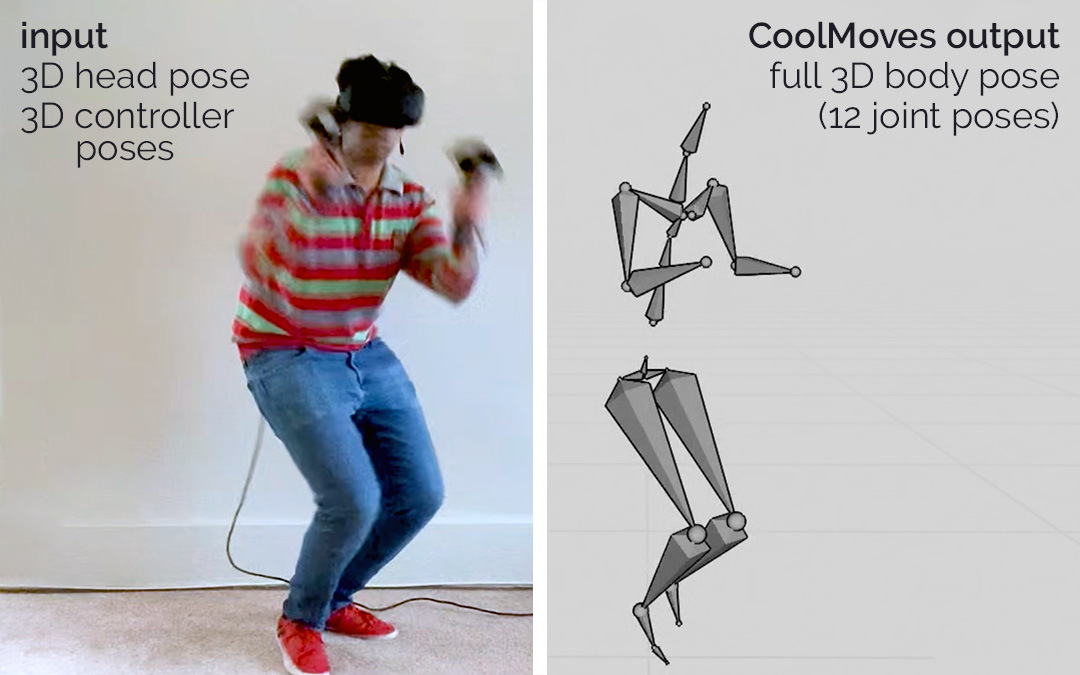

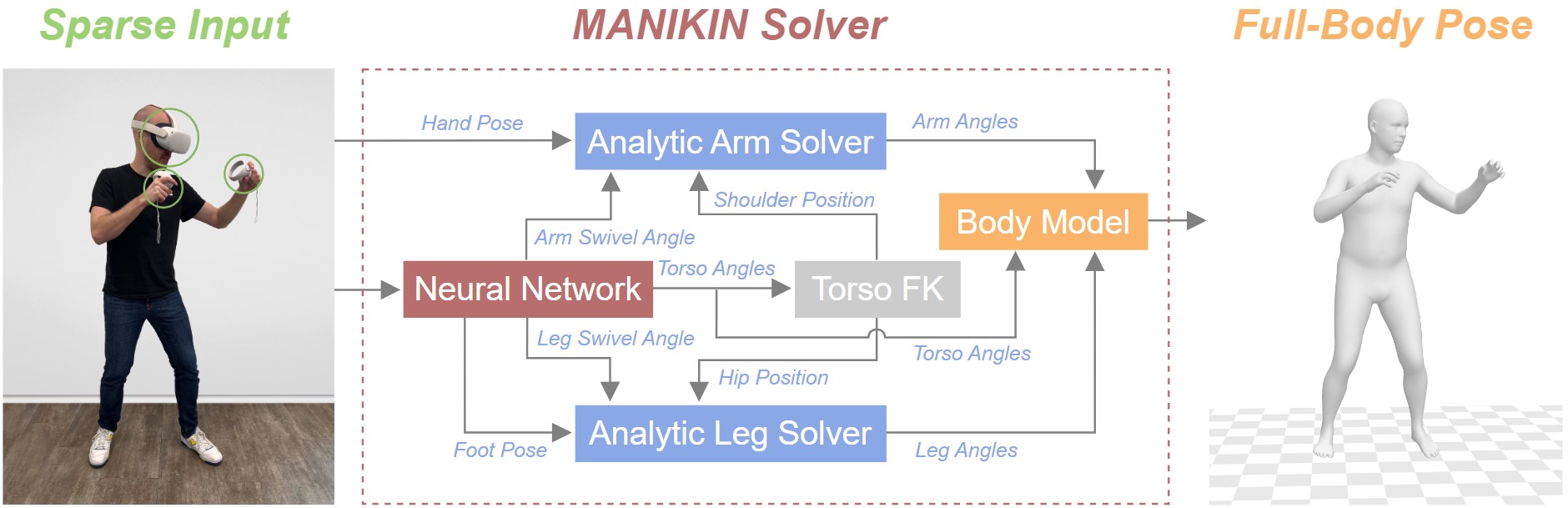

Mixed Reality systems aim to estimate a user’s full-body joint configurations from just the pose of the end effectors, primarily head and hand poses. Existing methods often involve solving inverse kinematics (IK) to obtain the full skeleton from just these sparse observations, usually directly optimizing the joint angle parameters of a human skeleton. Since this accumulates error through the kinematic tree, predicted end effector poses fail to align with the provided input pose. This leads to discrepancies between the predicted and the actual hand positions or feet that penetrate the ground. In this paper, we first refine the commonly used SMPL parametric model by embedding anatomical constraints that reduce the degrees of freedom for specific parameters to more closely mirror human biomechanics. This ensures that our model produces physically plausible pose predictions. We then propose a biomechanically accurate neural inverse kinematics solver (MANIKIN) for full-body motion tracking. MANIKIN is based on swivel angle prediction and perfectly matches input poses while avoiding ground penetration. We evaluate MANIKIN in extensive experiments on motion capture datasets and demonstrate that our method surpasses the state of the art in quantitative and qualitative results at fast inference speed.

Reference

Jiaxi Jiang, Paul Streli, Xuejing Luo, Christoph Gebhardt, and Christian Holz. MANIKIN: Biomechanically Accurate Neural Inverse Kinematics for Human Motion Estimation. In European Conference on Computer Vision 2024 (ECCV).

BibTeX citation

@inproceedings{jiang2024manikin, title={Manikin: Biomechanically accurate neural inverse kinematics for human motion estimation}, author={Jiang, Jiaxi and Streli, Paul and Luo, Xuejing and Gebhardt, Christoph and Holz, Christian}, booktitle={European Conference on Computer Vision}, year={2024}, organization={Springer} }

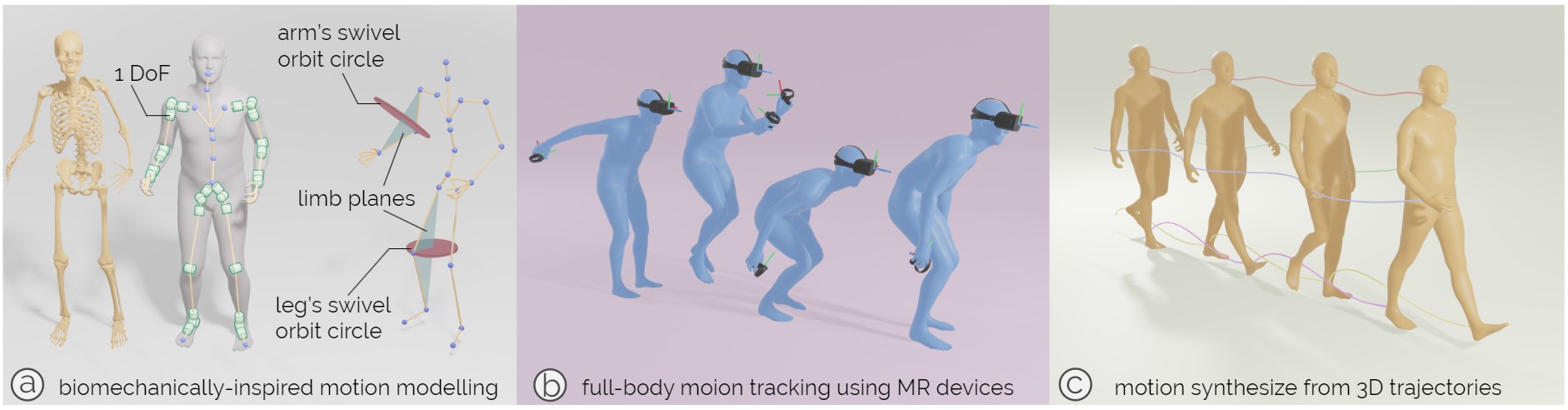

Human Motion Modeling

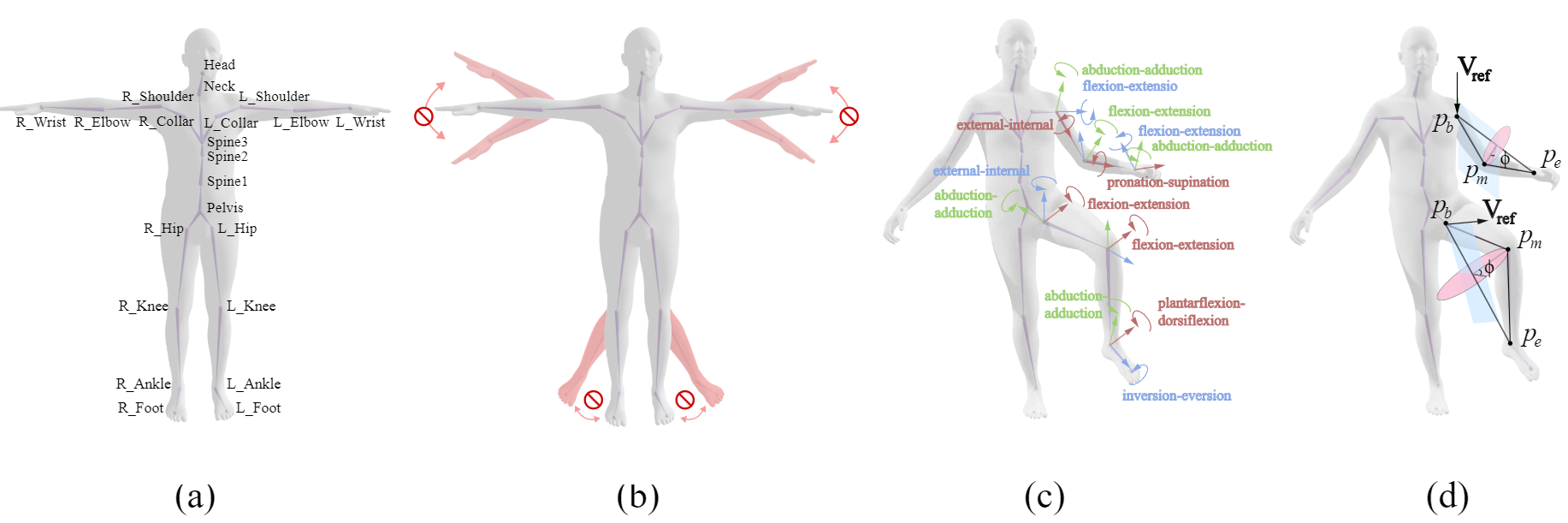

Figure 2: Comparison between SMPL and biomechanically constraint limb motion: (a) the skeleton of the SMPL model, (b) unrealistic SMPL joint configurations in the AMASS dataset, (c) biomechanically plausible joint configurations, (d) swivel angle parametrization of arm and leg.

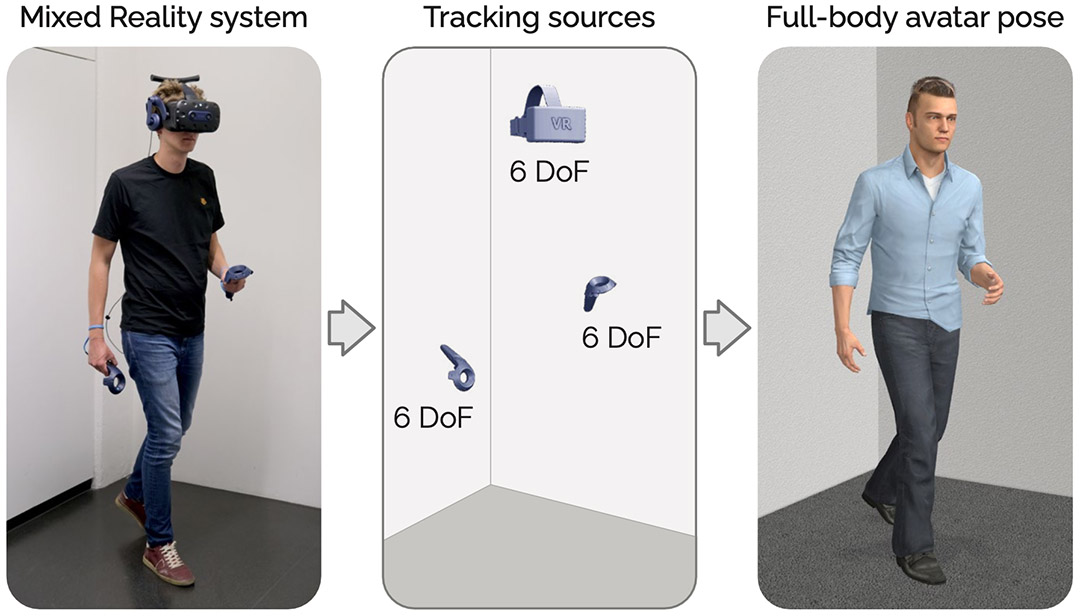

Neural Inverse Kinematics

Figure 3: Overview of MANIKIN for full-body pose estimation from sparse tracking signals. Given the 6 DoF of the head and two hands, the neural network predicts the foot pose, swivel angles for arms and legs, as well as joint angles in the torso. Next, the shoulder and hip position can be obtained through forward kinematics on the torso. Given the poses of an end effector (hand and foot), predicted swivel angles, and base joint (shoulder and hip) positions, we use analytic geometry to get all limb joint configurations. Combined with the predicted torso joints, we obtain a full-body pose.

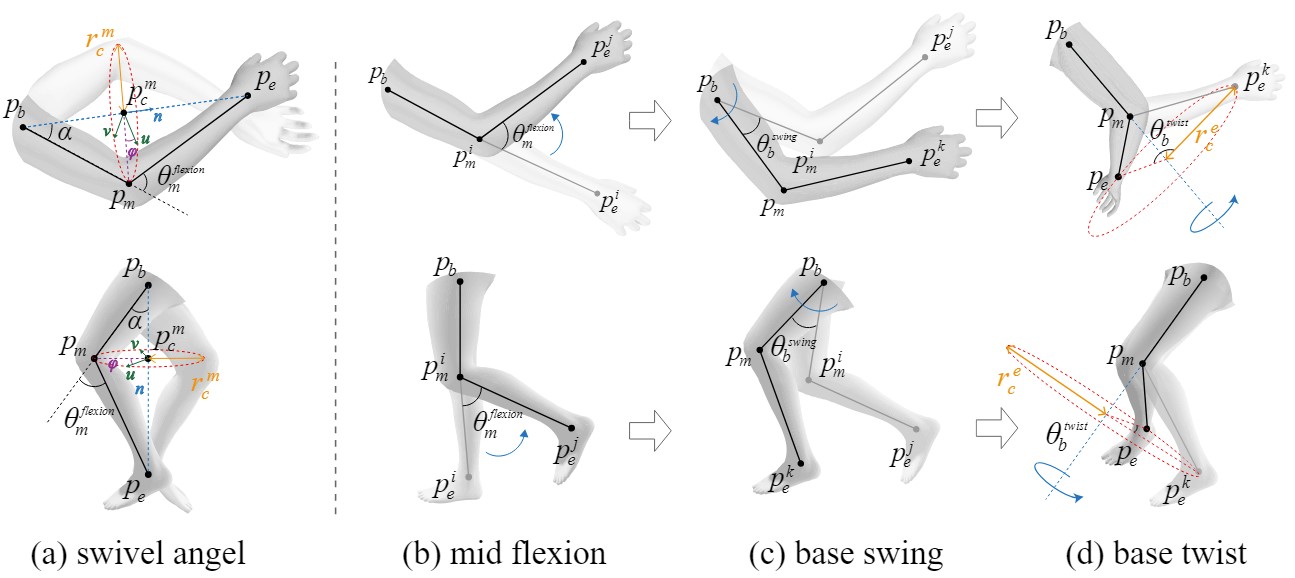

Figure 4: Illustrations of the triangular geometry of the human limbs. (a) shows the relationship between swivel angel and mid joint position. (b) to (d) show the procedure to rotate the limb from T-pose to desired positions.

Results

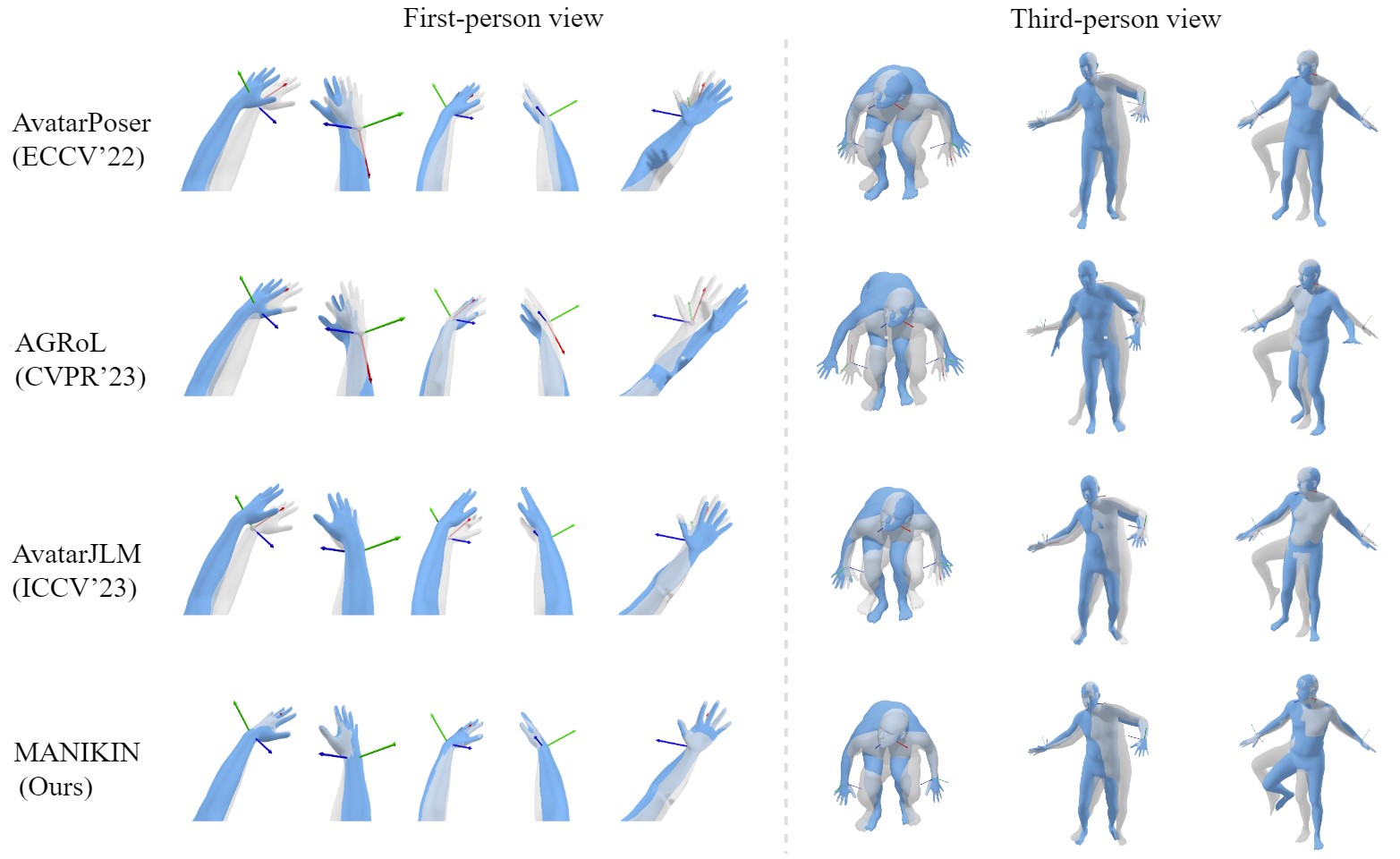

Figure 5: Visual comparisons of different methods under the first and third person views. The ground truth pose is colored in transparent gray. Our method can perfectly match the hand observation and has better full-body prediction results than other methods.