Ultra Inertial Poser

Scalable Motion Capture and Tracking from Sparse Inertial Sensors and Ultra-Wideband Ranging

ACM SIGGRAPH 2024Abstract

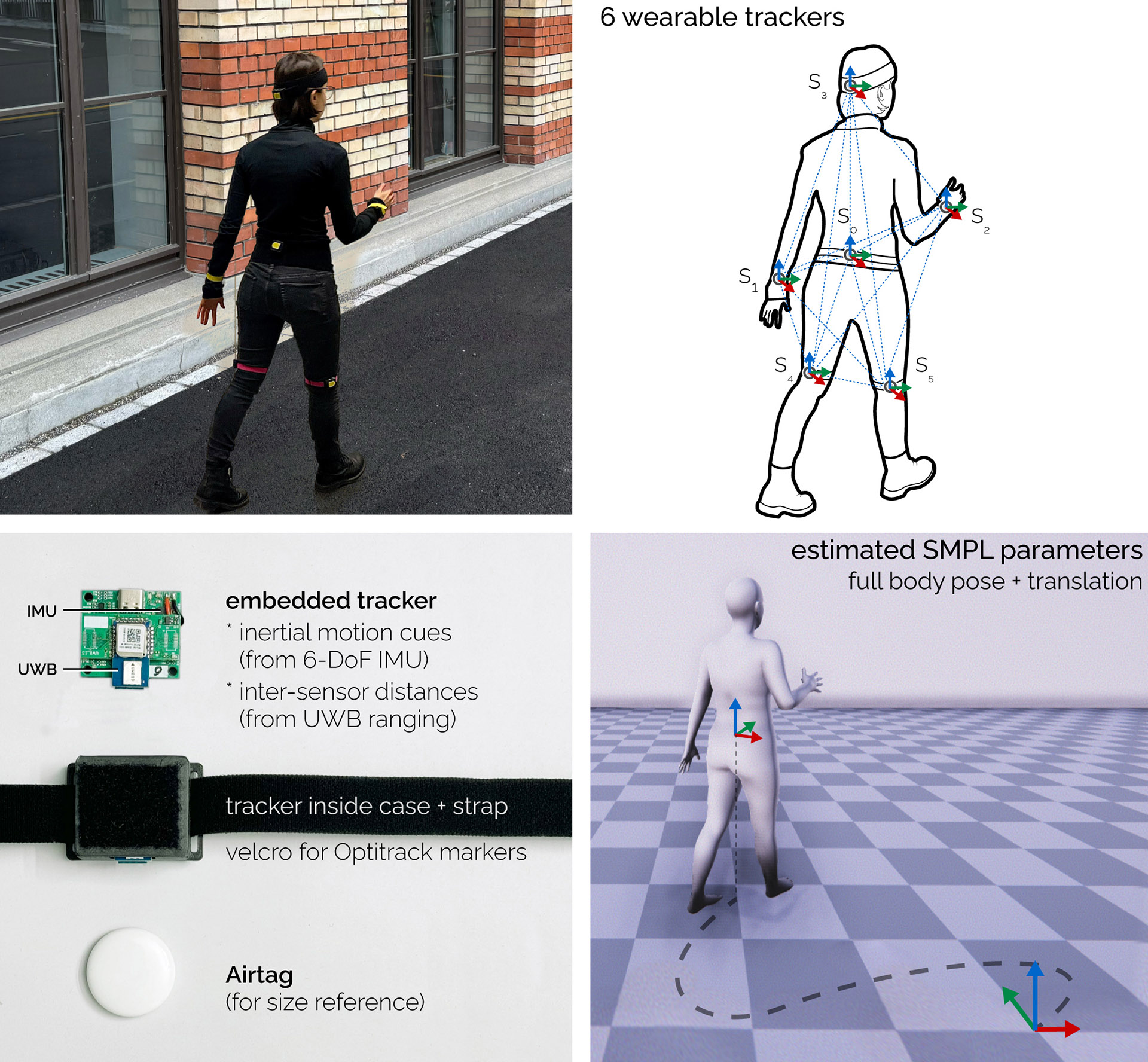

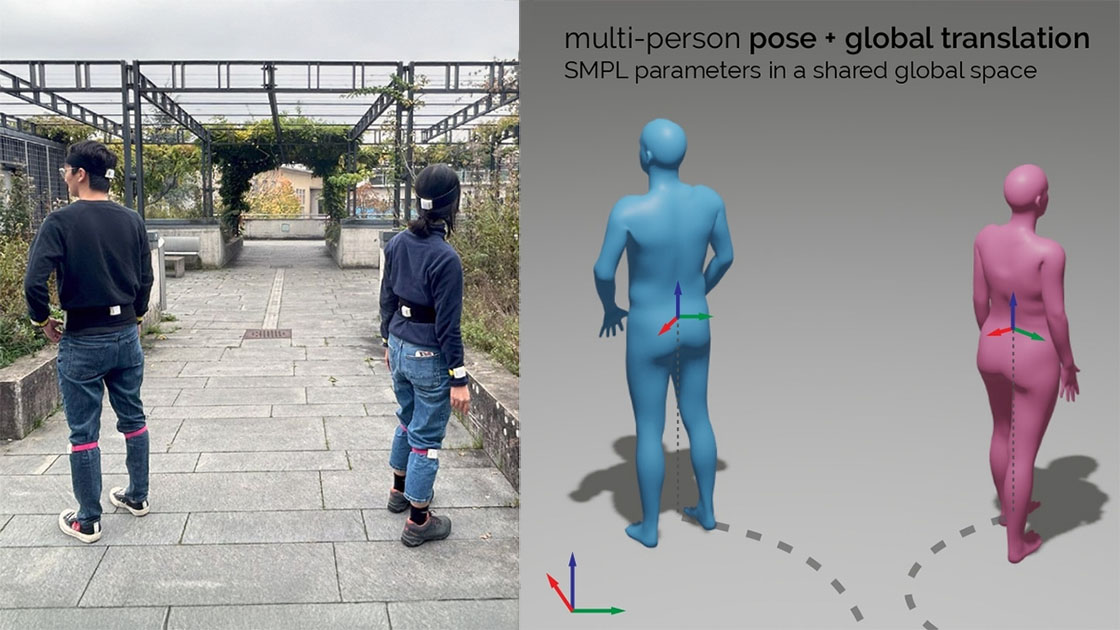

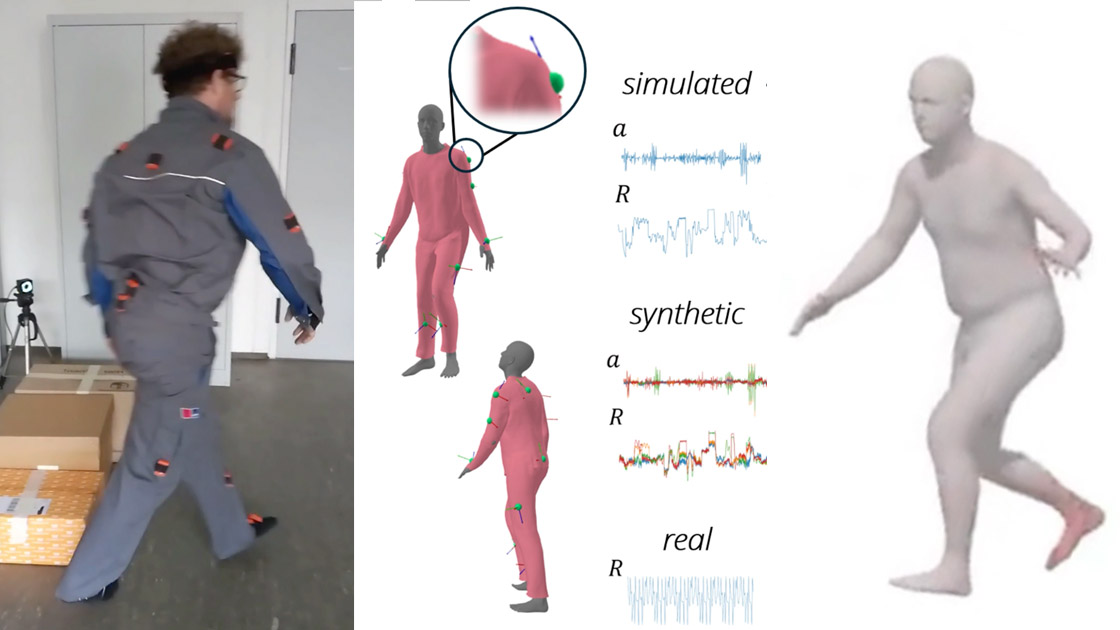

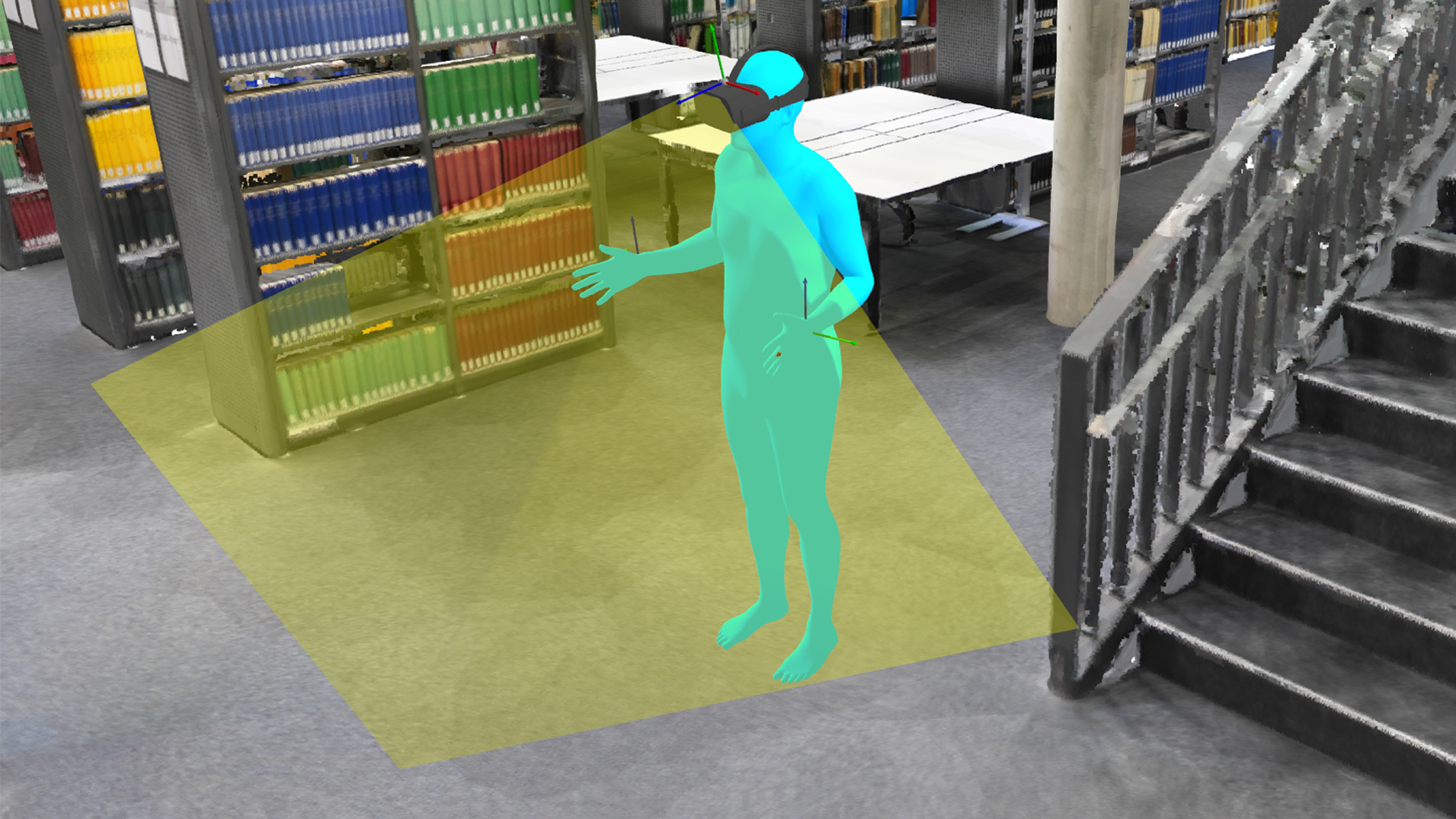

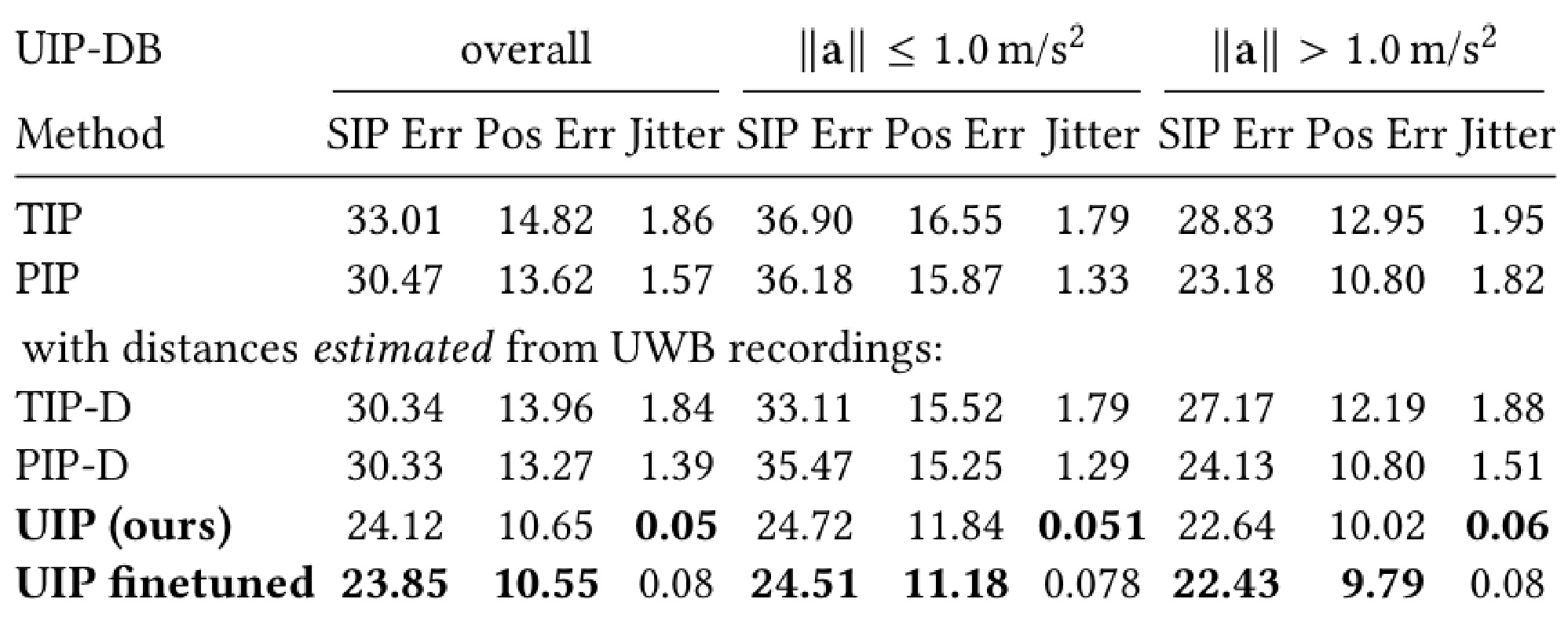

While camera-based capture systems remain the gold standard for recording human motion, learning-based tracking systems based on sparse wearable sensors are gaining popularity. Most commonly, they use inertial sensors, whose propensity for drift and jitter have so far limited tracking accuracy. In this paper, we propose Ultra Inertial Poser, a novel 3D full body pose estimation method that constrains drift and jitter in inertial tracking via inter-sensor distances. We estimate these distances across sparse sensor setups using a lightweight embedded tracker that augments inexpensive off-the-shelf 6D inertial measurement units with ultra-wideband radio-based ranging—dynamically and without the need for stationary reference anchors. Our method then fuses these inter-sensor distances with the 3D states estimated from each sensor. Our graph-based machine learning model processes the 3D states and distances to estimate a person’s 3D full body pose and translation. To train our model, we synthesize inertial measurements and distance estimates from the motion capture database AMASS. For evaluation, we contribute a novel motion dataset of 10 participants who performed 25 motion types, captured by 6 wearable IMU+UWB trackers and an optical motion capture system, totaling 200 minutes of synchronized sensor data (UIP-DB). Our extensive experiments show state-of-the-art performance for our method over PIP and TIP, reducing position error from 13.62 to 10.65 cm (22% better) and lowering jitter from 1.56 to 0.055 km/s^3 (a reduction of 97%).

Video

Reference

Rayan Armani, Changlin Qian, Jiaxi Jiang, and Christian Holz. Ultra Inertial Poser: Scalable Motion Capture and Tracking from Sparse Inertial Sensors and Ultra-Wideband Ranging. In Proceedings of ACM SIGGRAPH 2024.

BibTeX citation

@inproceedings{armani2024ultrainertialposer, author = {Armani, Rayan and Qian, Changlin and Jiang, Jiaxi and Holz, Christian}, title = {{Ultra Inertial Poser}: Scalable Motion Capture and Tracking from Sparse Inertial Sensors and Ultra-Wideband Ranging}, year = {2024}, isbn = {9798400705250}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3641519.3657465}, doi = {10.1145/3641519.3657465}, booktitle = {ACM SIGGRAPH 2024 Conference Papers}, articleno = {51}, numpages = {11}, keywords = {Human pose estimation, IMU, UWB, sparse tracking}, location = {Denver, CO, USA}, series = {SIGGRAPH '24} }

More images

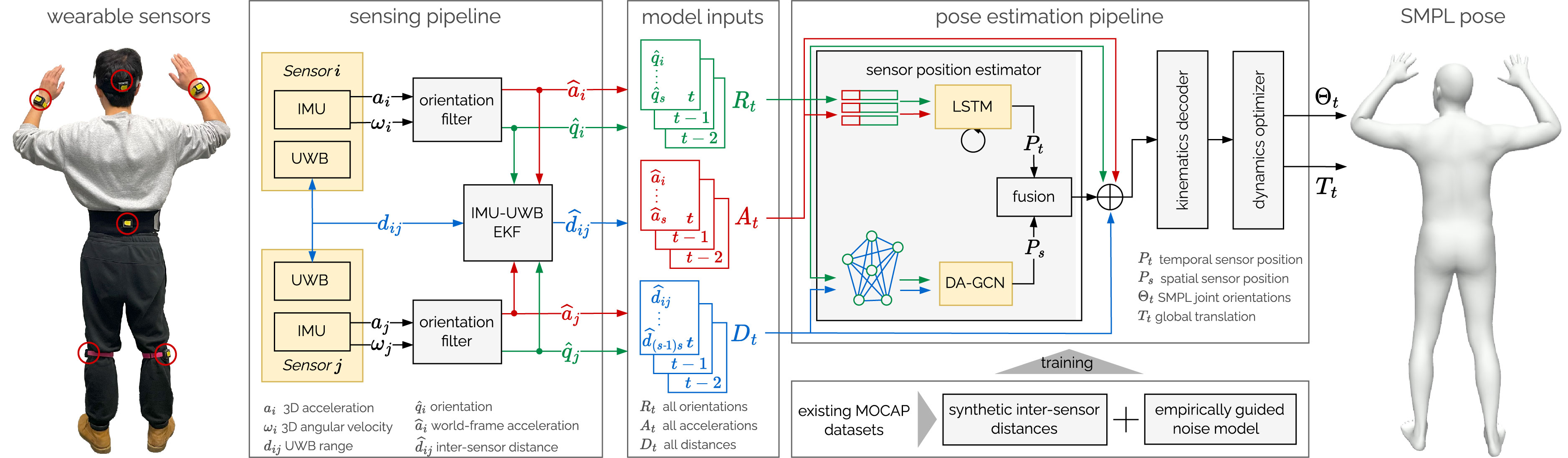

Figure 2: Overview of our method. The person is wearing 6 sensor nodes, each featuring an IMU and a UWB radio. Our method processes the raw data from each sensor to estimate sequences of global orientations, global accelerations, and inter-sensor distances. These serve as input into our learning-based pose estimation to predict leaf-joint angles as well as the global root orientation and translation as SMPL parameters.

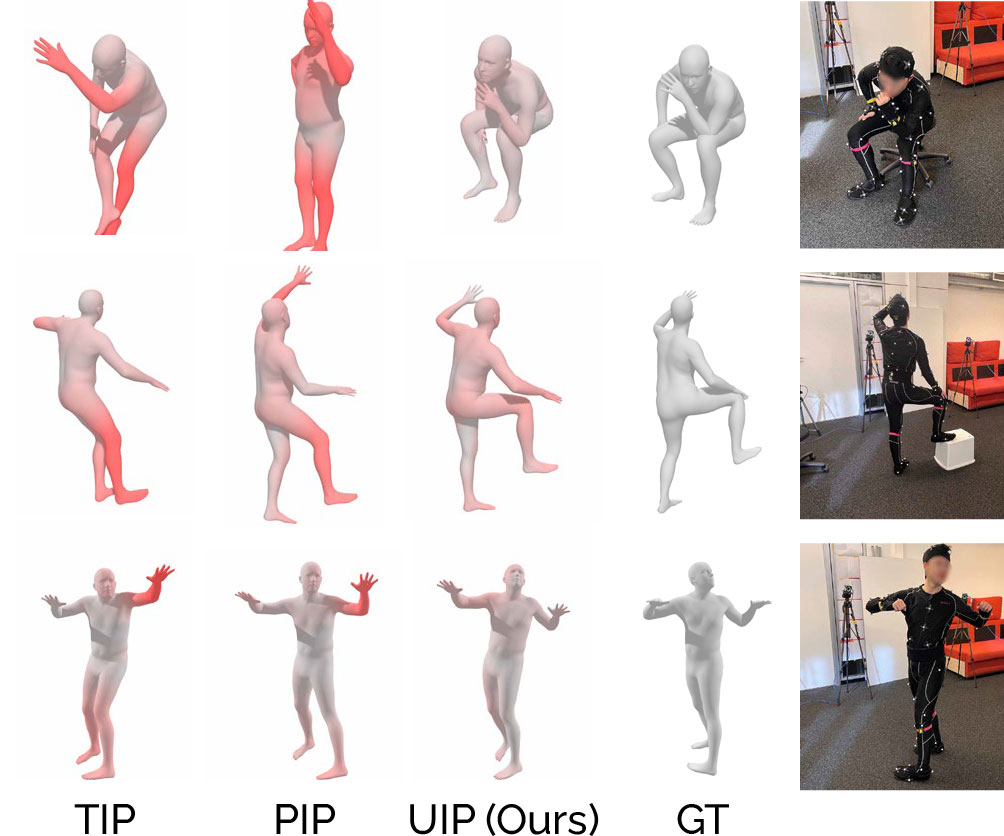

Figure 3: Qualitative comparison of pose estimates from the recordings in our dataset

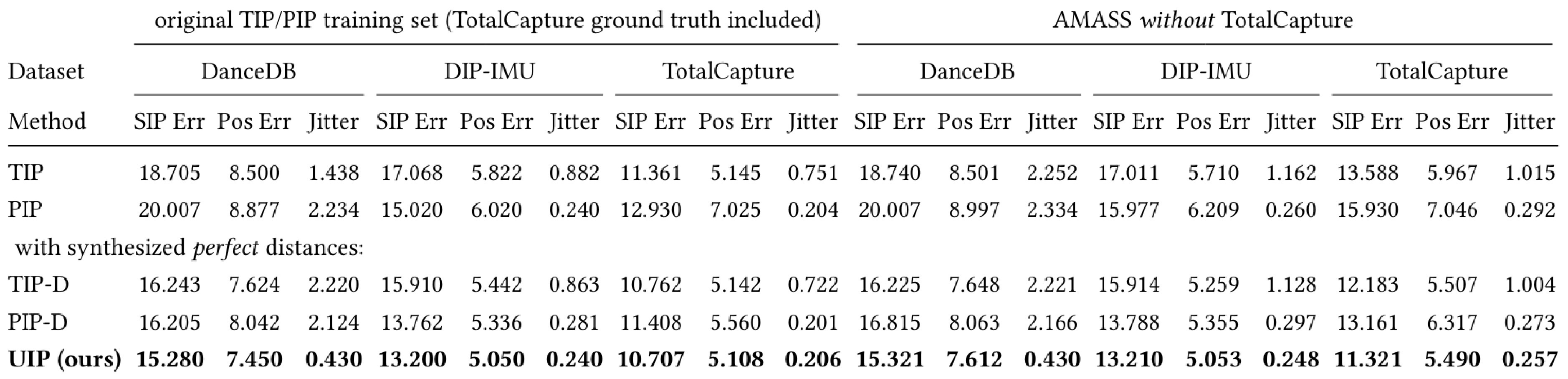

Table 1: Comparison with state-of-the-art methods on existing datasets augmented with simulated, ideal inter-sensor distances.

Table 2: Comparisons on UIP-DB with acceleration, orientation and inter-sensor distances from real off-the-shelf sensors.

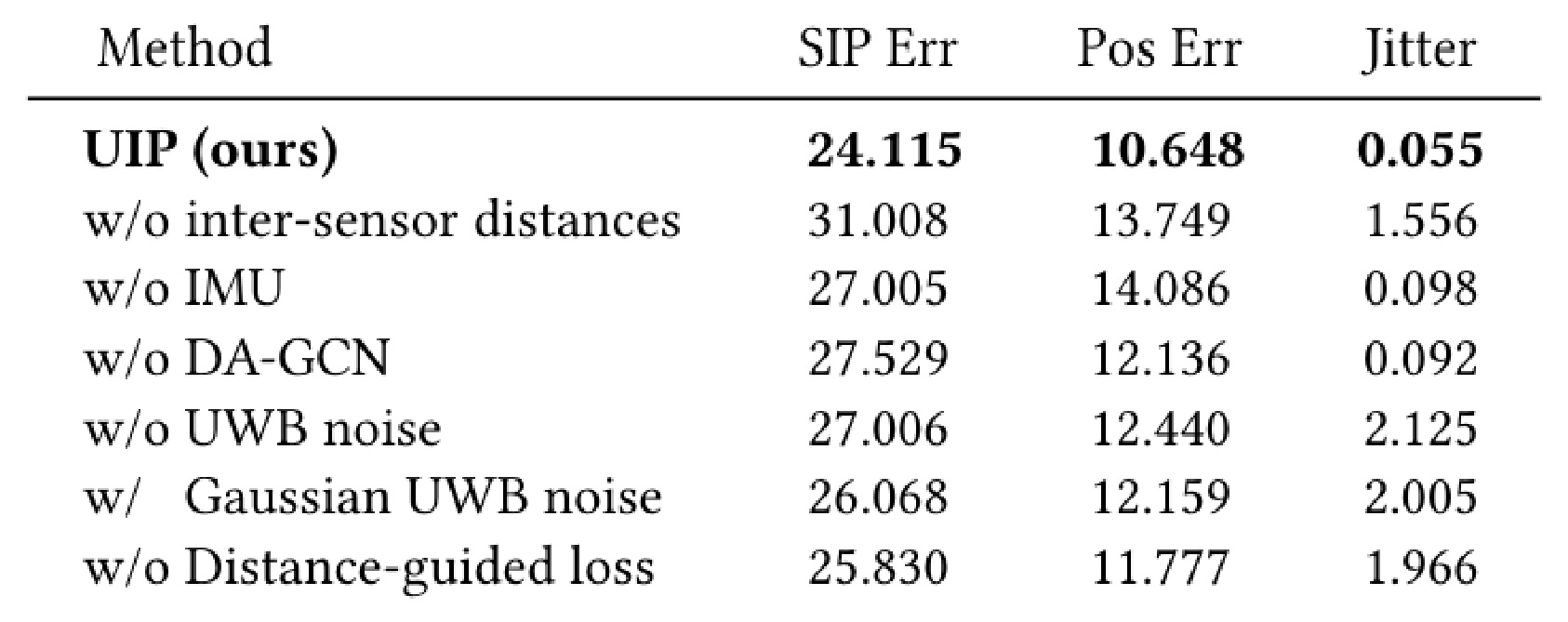

Table 3: Results of our ablation studies.