AvatarPoser

Articulated Full-Body Pose Tracking from Sparse Motion Sensing

ECCV 2022Abstract

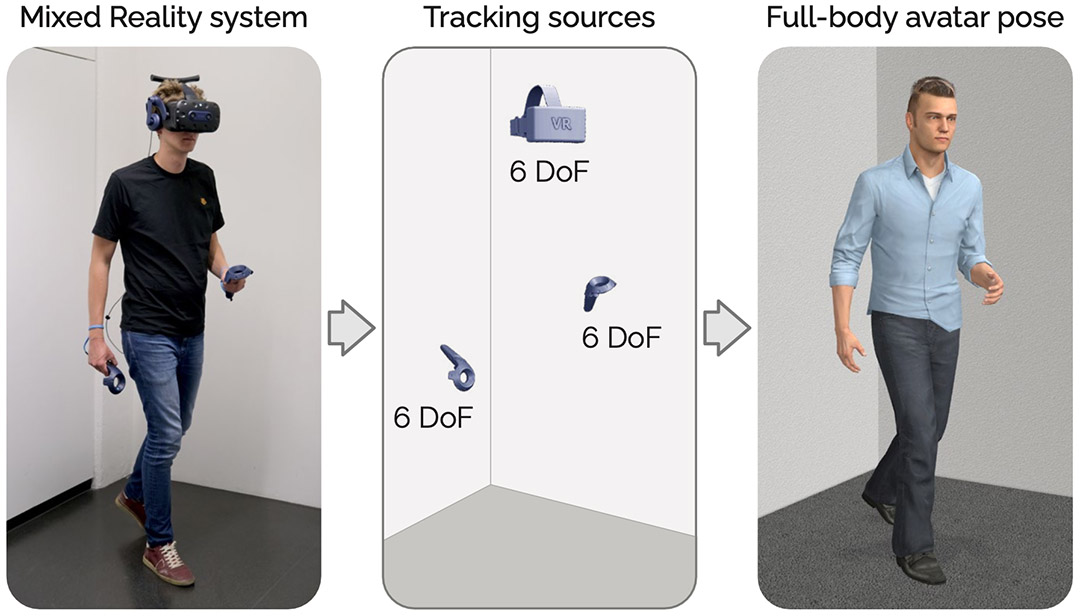

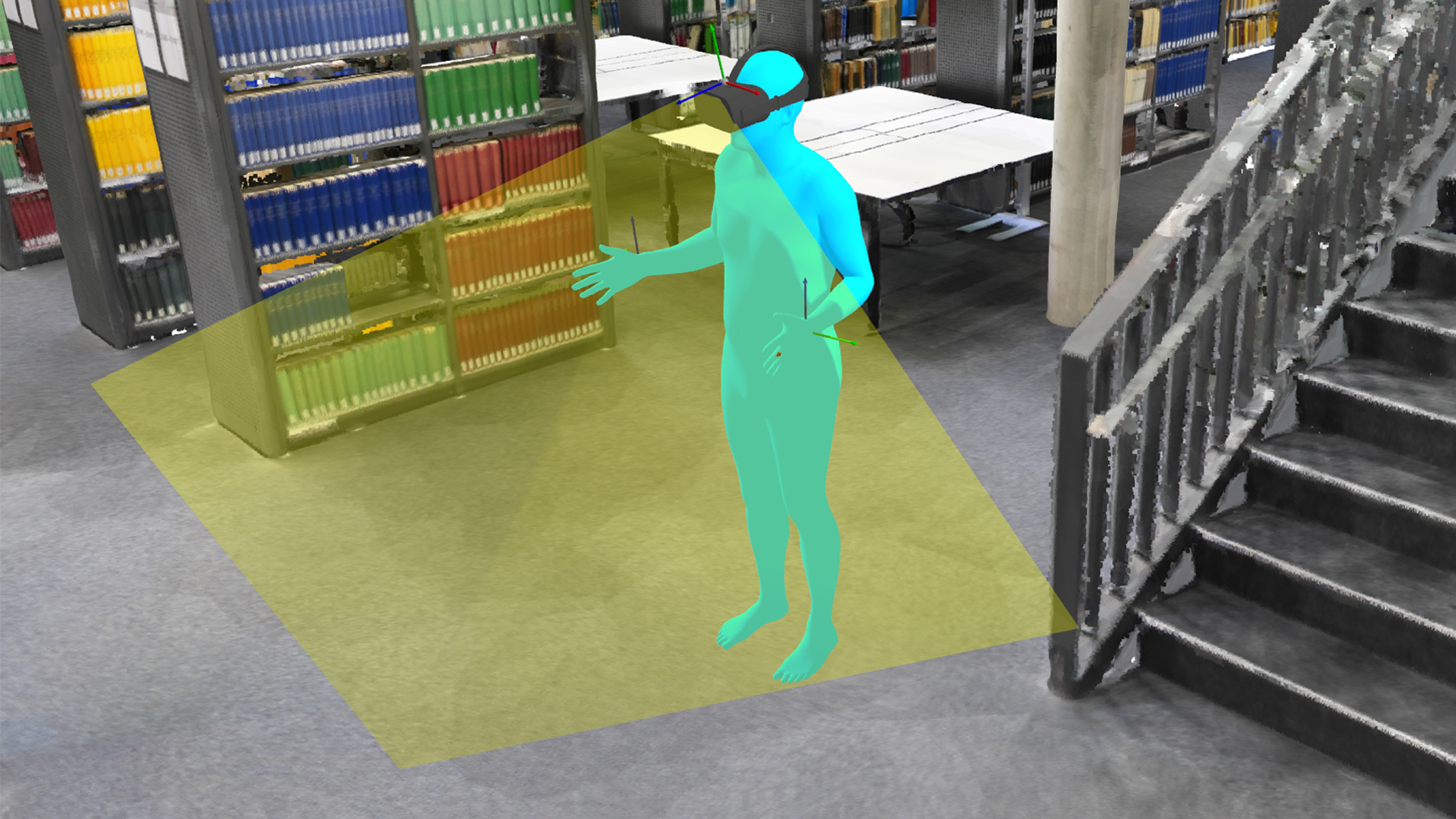

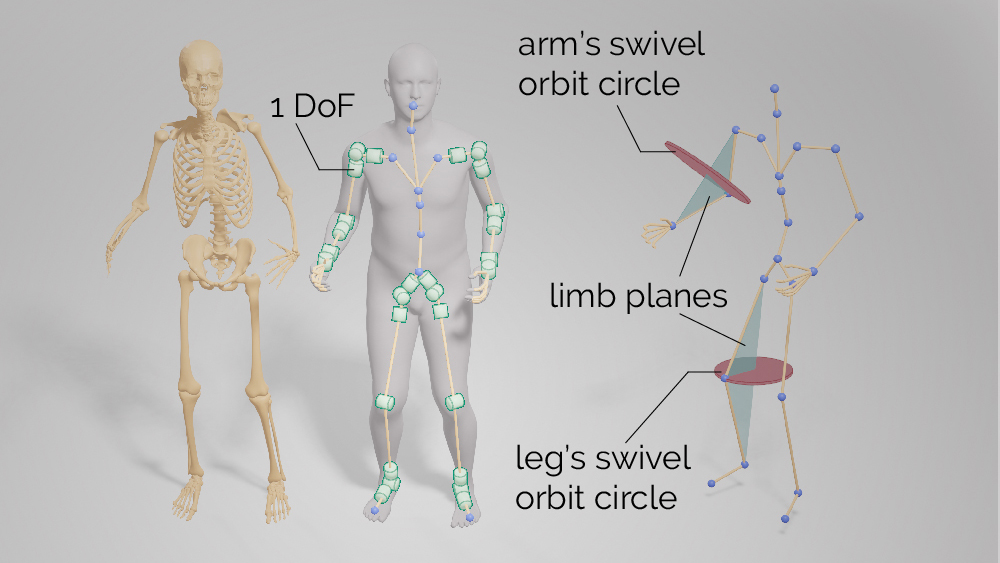

Today’s Mixed Reality head-mounted displays track the user’s head pose in world space as well as the user’s hands for interaction in both Augmented Reality and Virtual Reality scenarios. While this is adequate to support user input, it unfortunately limits users’ virtual representations to just their upper bodies. Current systems thus resort to floating avatars, whose limitation is particularly evident in collaborative settings. To estimate full-body poses from the sparse input sources, prior work has incorporated additional trackers and sensors at the pelvis or lower body, which increases setup complexity and limits practical application in mobile settings. In this paper, we present AvatarPoser, the first learning-based method that predicts full-body poses in world coordinates using only motion input from the user’s head and hands. Our method builds on a Transformer encoder to extract deep features from the input signals and decouples global motion from the learned local joint orientations to guide pose estimation. To obtain accurate full-body motions that resemble motion capture animations, we refine the arm joints’ positions using an optimization routine with inverse kinematics to match the original tracking input. In our evaluation, AvatarPoser achieved new state-of-the-art results in evaluations on large motion capture datasets (AMASS). At the same time, our method’s inference speed supports real-time operation, providing a practical interface to support holistic avatar control and representation for Metaverse applications.

Video

Reference

Jiaxi Jiang, Paul Streli, Huajian Qiu, Andreas Fender, Larissa Laich, Patrick Snape, and Christian Holz. AvatarPoser: Articulated Full-Body Pose Tracking from Sparse Motion Sensing. In European Conference on Computer Vision 2022 (ECCV).

BibTeX citation

@inproceedings{jiang2022avatarposer, title={AvatarPoser: Articulated Full-Body Pose Tracking from Sparse Motion Sensing}, author={Jiang, Jiaxi and Streli, Paul and Qiu, Huajian and Fender, Andreas and Laich, Larissa and Snape, Patrick and Holz, Christian}, booktitle={Proceedings of European Conference on Computer Vision}, year={2022}, organization={Springer} }

Method

![]()

Figure 2: Overview of AvatarPoser. The framework of our proposed AvatarPoser for Mixed Reality avatar full-pose estimation integrates four parts: a Transformer Encoder, a Stabilizer, a Forward-Kinematics Module, and an Inverse-Kinematics Module. The Transformer Encoder extracts deep pose features from previous time step signals from the headset and hands, which are split into global and local branches and correspond to global and local pose estimation, respectively. The Stabilizer is responsible for global motion navigation by decoupling global orientation from pose features and estimating global translation from the head position through the body’s kinematic chain. The Forward-Kinematics Module calculates joint positions from a human skeleton model and a predicted body pose. The Inverse-Kinematics Module adjusts the estimated rotation angles of joints on the shoulder and elbow to reduce hand position errors.

Results

![]()

Figure 3: Visual comparisons of different methods based on given sparse inputs for various motions. Avatars are color-coded to show errors in red.

![]()

Figure 4: Visual results of our proposed method AvatarPoser compared to SOTA alternatives for a running motion. The change of color denotes different timestamp.

![]()

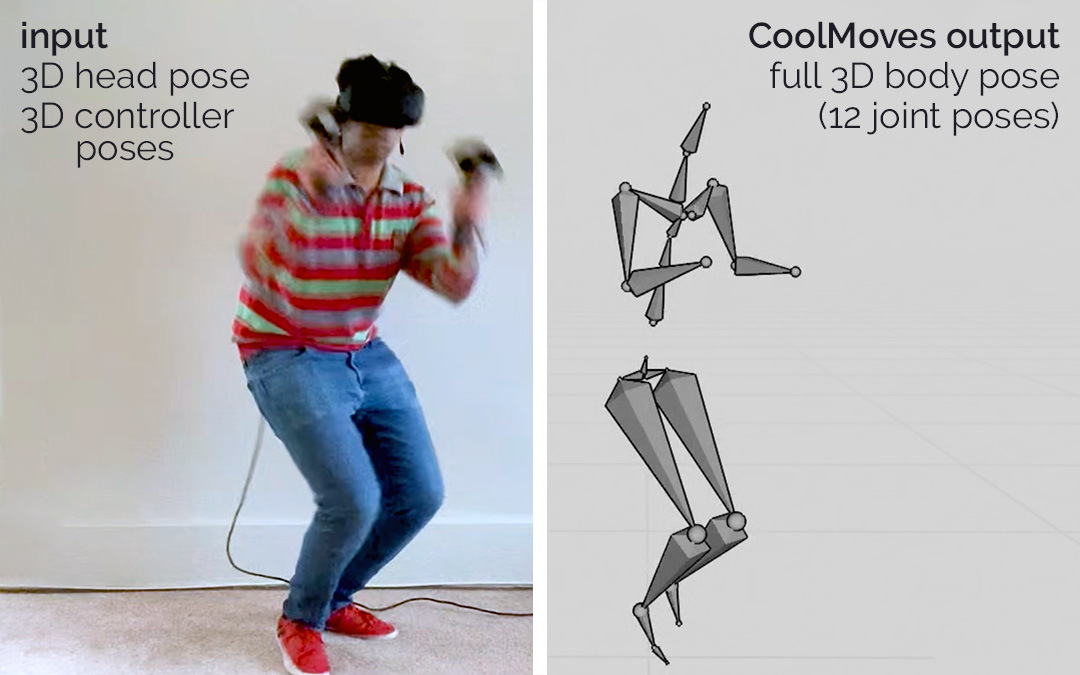

Figure 5: We tested our method on recorded motion data from a VIVE Pro headset and two VIVE controllers. Columns show the user’s pose (top) and our prediction (bottom).