Causality-preserving Asynchronous Reality

ACM CHI 2022 Best PaperAbstract

Mixed Reality is gaining interest as a platform for collaboration and focused work to a point where it may supersede current office settings in future workplaces. At the same time, we expect that interaction with physical objects and face-to-face communication will remain crucial for future work environments, which is a particular challenge in fully immersive Virtual Reality. In this work, we reconcile those requirements through a user’s individual Asynchronous Reality, which enables seamless physical interaction across time. When a user is unavailable, e.g., focused on a task or in a call, our approach captures co-located or remote physical events in real-time, constructs a causality graph of co-dependent events, and lets immersed users revisit them at a suitable time in a causally accurate way. Enabled by our system AsyncReality, we present a workplace scenario that includes walk-in interruptions during a person’s focused work, physical deliveries, and transient spoken messages. We then generalize our approach to a use-case agnostic concept and system architecture. We conclude by discussing the implications of an Asynchronous Reality for future offices.

Video

Reference

Andreas Fender and Christian Holz. Causality-preserving Asynchronous Reality. In Proceedings of ACM CHI 2022.

More images

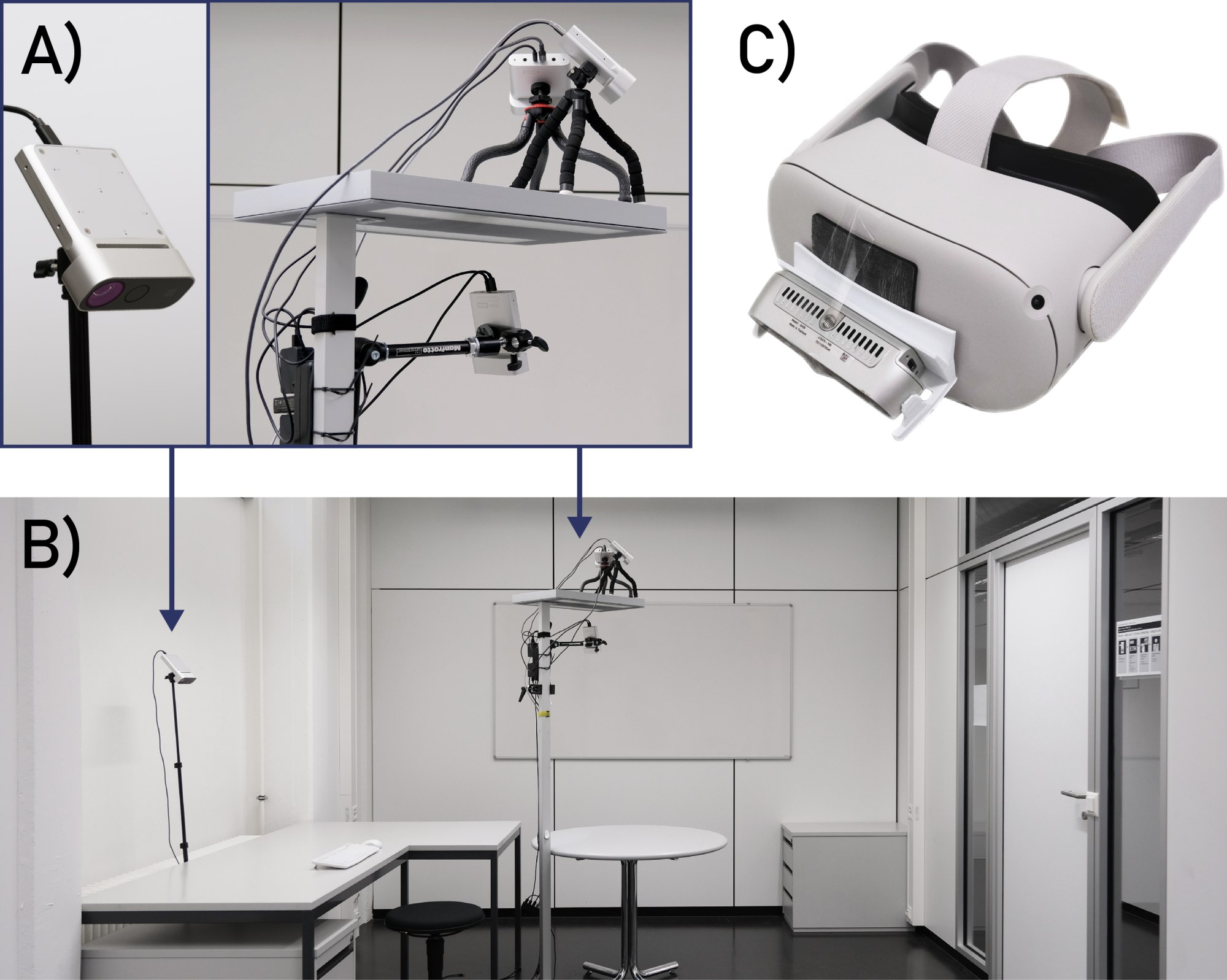

We installed four Azure Kinect cameras (A) in an office (B). The user wears an Oculus Quest 2 (C) with a RealSense D435 camera mounted to it (slightly angled downwards to capture hands and objects).

A virtual replication of the office in our scenario. Instead of the real office, the user sees this reduced virtual representation. Depending on the system state, we augment this static replication with virtual content as well as parts of real-time point clouds (live or recorded).

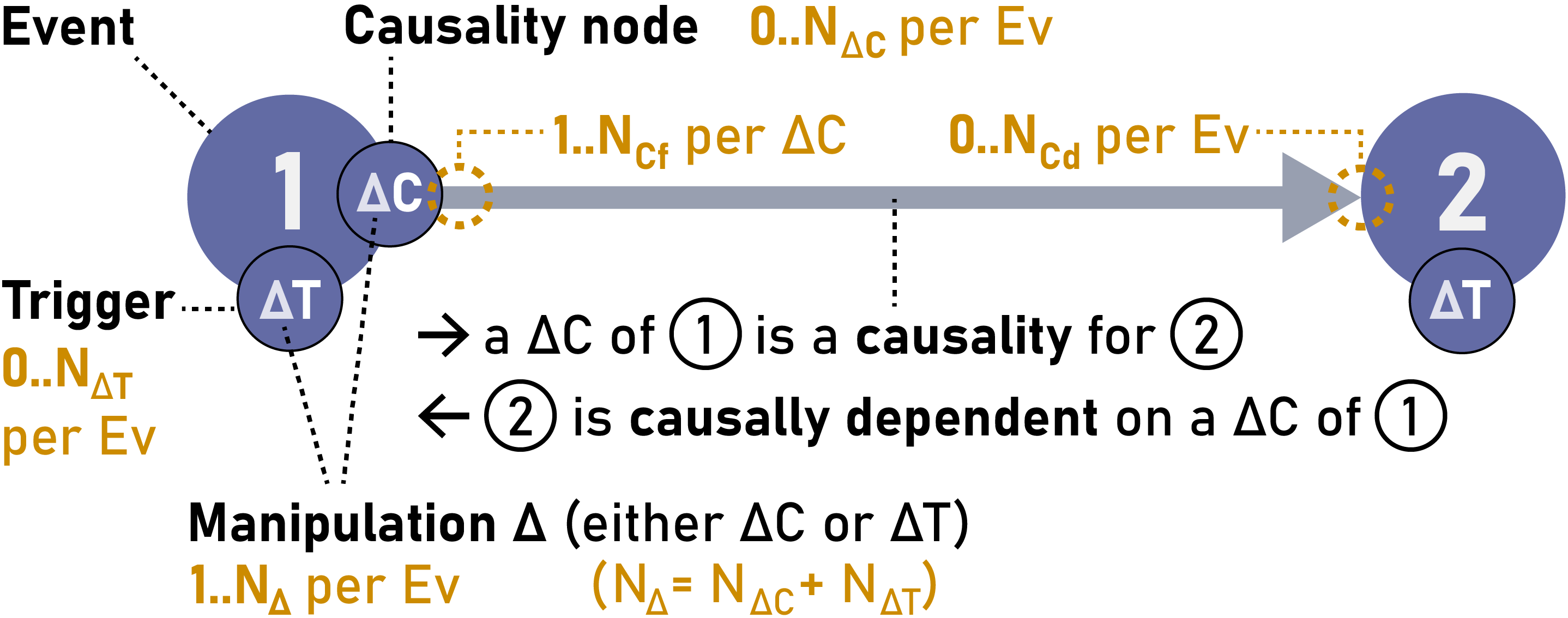

Generalized components of a Causality Graph. Every event has one or more differences. Each Manipulation is either a Causality Node (C), which acts as dependency for at least one other event, or a Trigger (T), if the Manipulation is not a dependency for any other event. An event needs to have all of its dependencies ‘fulfilled’ before it can be played back. An event can depend on one or more Causality Nodes (not necessarily from the same event).

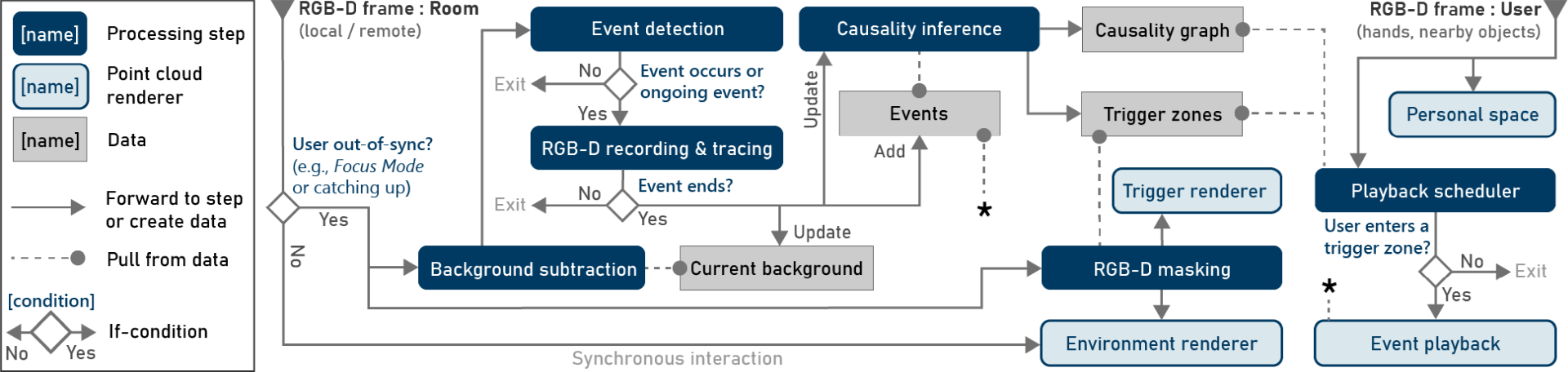

Overview of the general system architecture and data flow for implementing an Asynchronous Reality. The system receives RGB-D data from the local or remote room (top-left) as well as from the space around the user (top-right). If the user unavailable (e.g., Focus Mode) or currently catching up with reality, then the asynchronous processing and rendering pipeline is used (see left-most condition). Otherwise, the system simply renders the point clouds live (local space and/or from remote space during calls).