InteractionAdapt

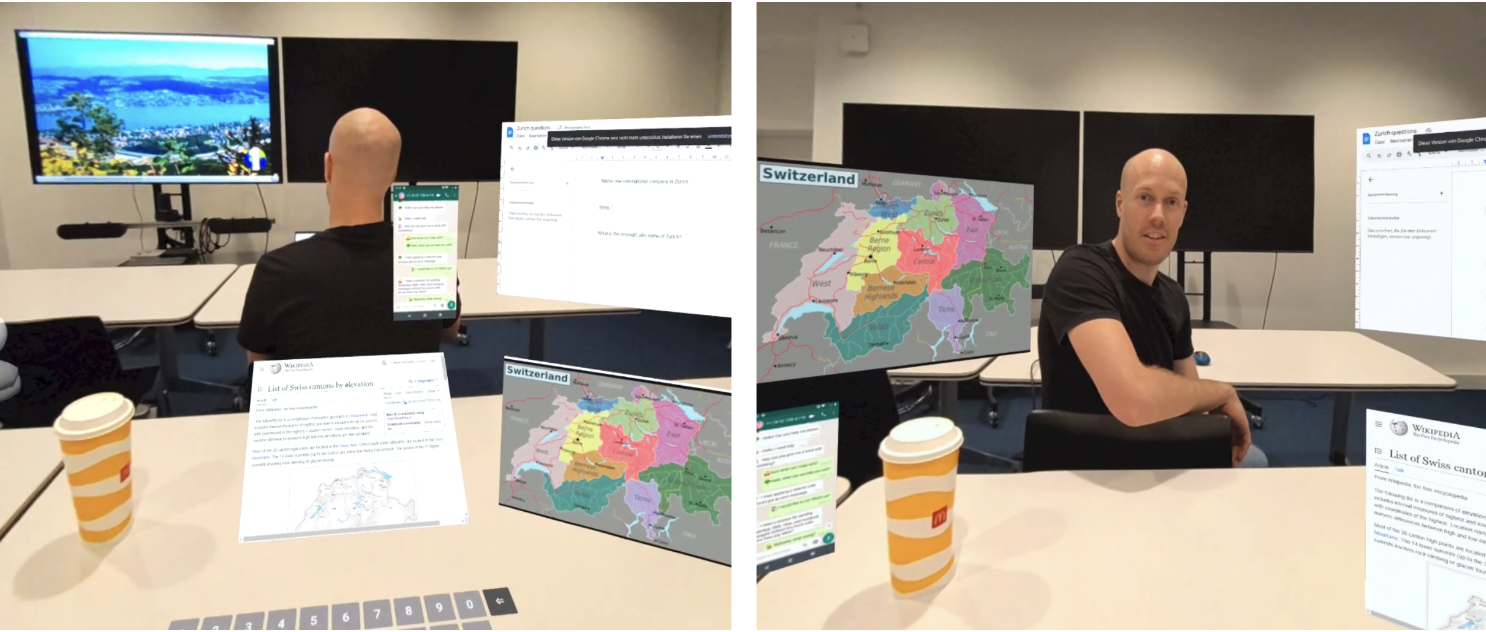

Interaction-driven Workspace Adaptation for Situated Virtual Reality Environments

ACM UIST 2023Abstract

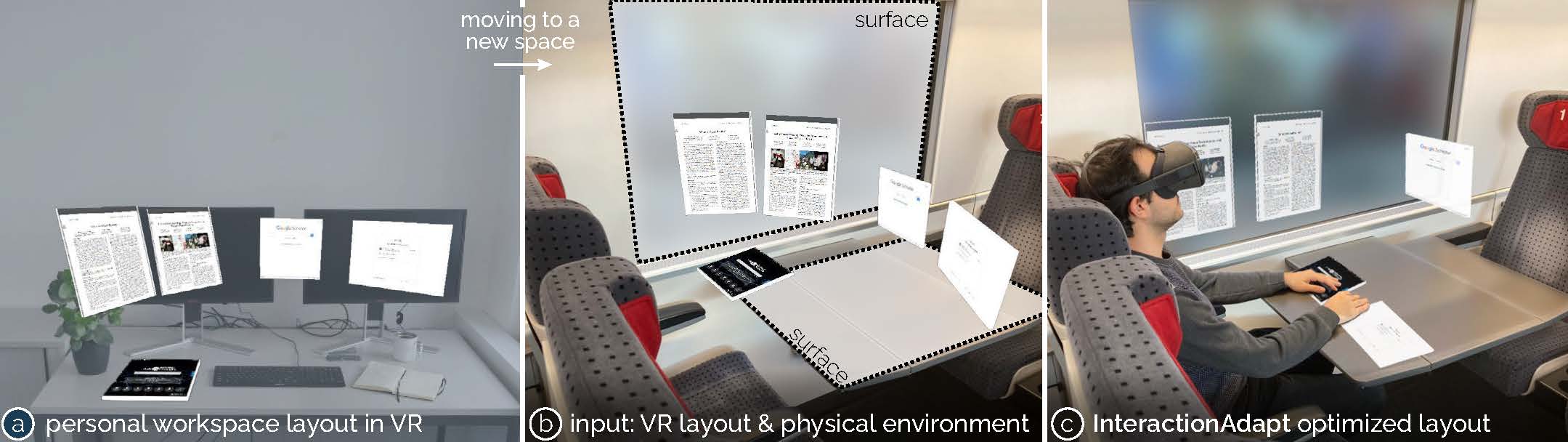

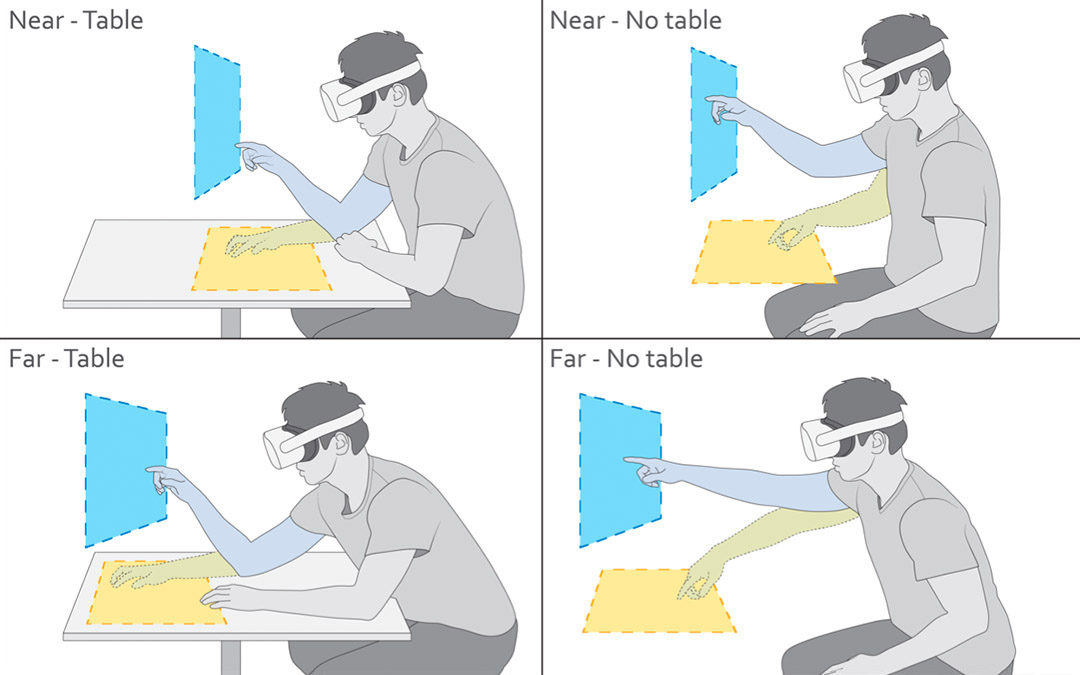

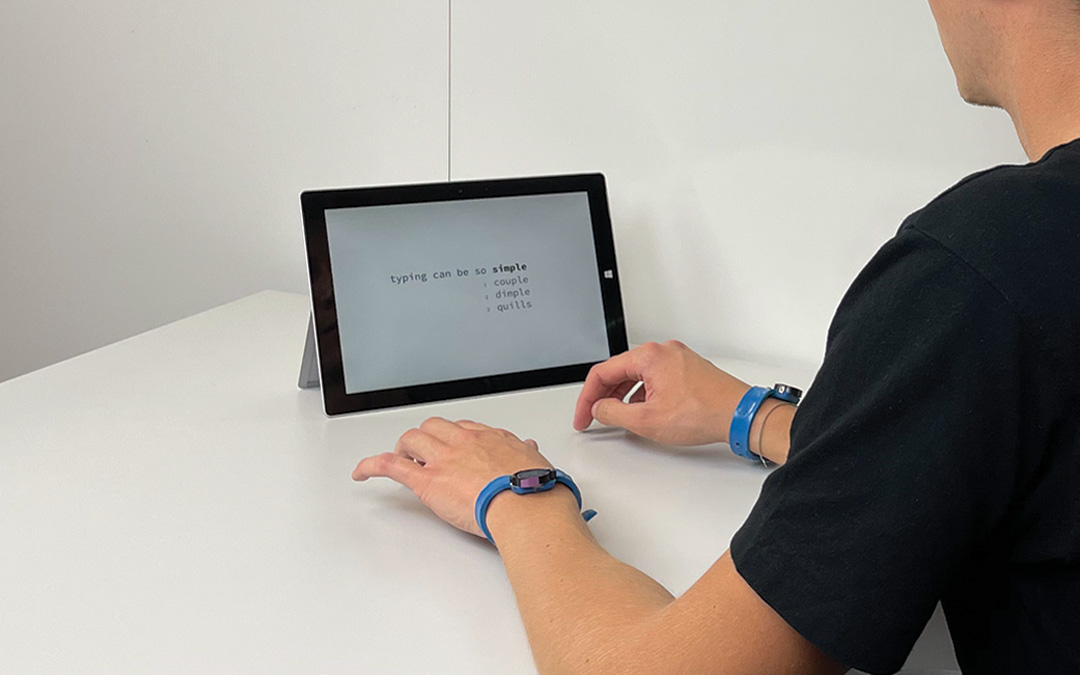

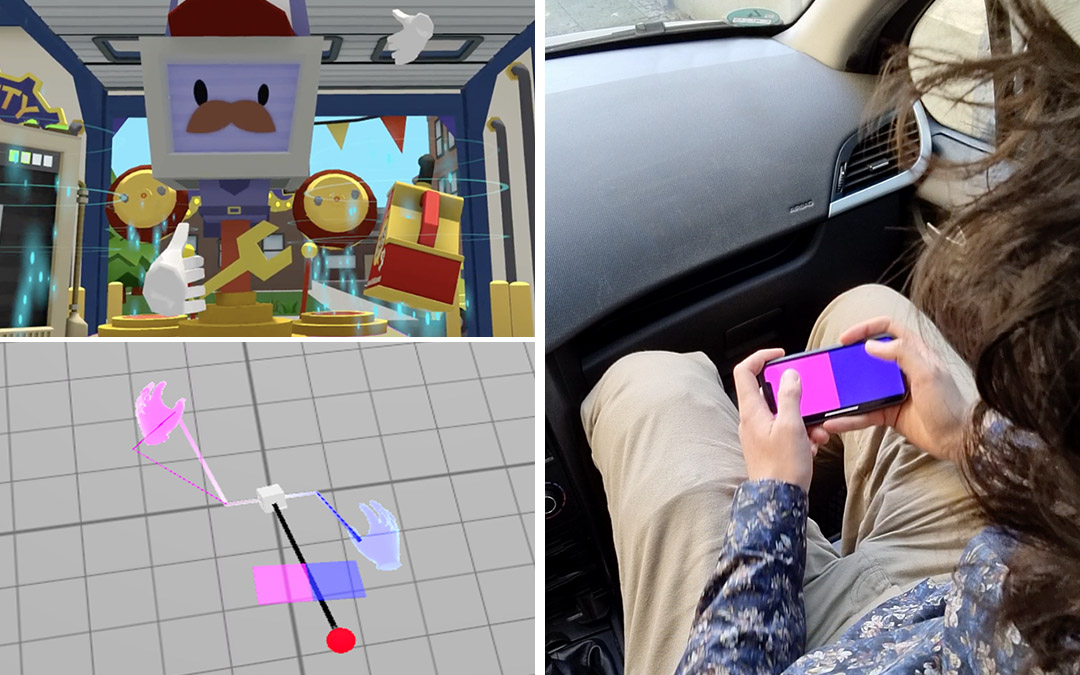

Virtual Reality (VR) has the potential to transform how we work: it enables flexible and personalized workspaces beyond what is possible in the physical world. However, while most VR applications are designed to operate in a single empty physical space, work environments are often populated with real-world objects and increasingly diverse due to the growing amount of work in mobile scenarios. In this paper, we present InteractionAdapt, an optimization-based method for adapting VR workspaces for situated use in varying everyday physical environments, allowing VR users to transition between real-world settings while retaining most of their personalized VR environment for efficient interaction to ensure temporal consistency and visibility. InteractionAdapt leverages physical affordances in the real world to optimize UI elements for the respectively most suitable input technique, including on-surface touch, mid-air touch and pinch, and cursor control. Our optimization term thereby models the trade-off across these interaction techniques based on experimental findings of 3D interaction in situated physical environments. Our two evaluations of InteractionAdapt in a selection task and a travel planning task established its capability of supporting efficient interaction, during which it produced adapted layouts that participants preferred to several baselines. We further showcase the versatility of our approach through applications that cover a wide range of use cases.

Video

Reference

Yi Fei Cheng, Christoph Gebhardt, and Christian Holz. InteractionAdapt: Interaction-driven Workspace Adaptation for Situated Virtual Reality Environments. In Proceedings of ACM UIST 2023.