Enabling People with Visual Impairments to Navigate Virtual Reality with a Haptic and Auditory Cane Simulation

ACM CHI 2018Abstract

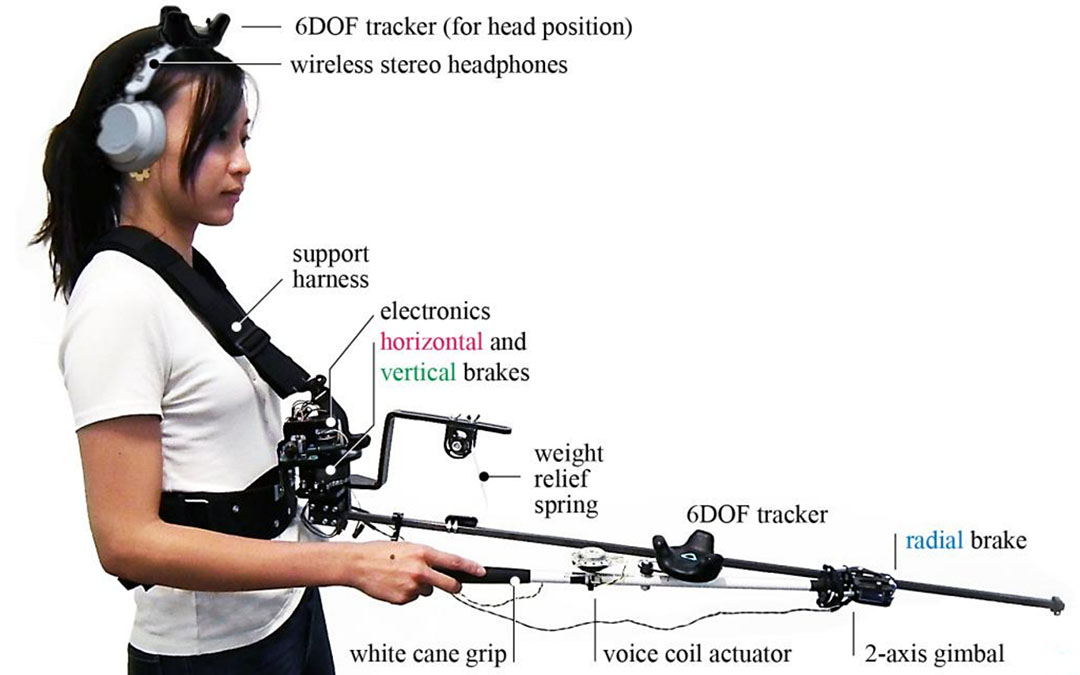

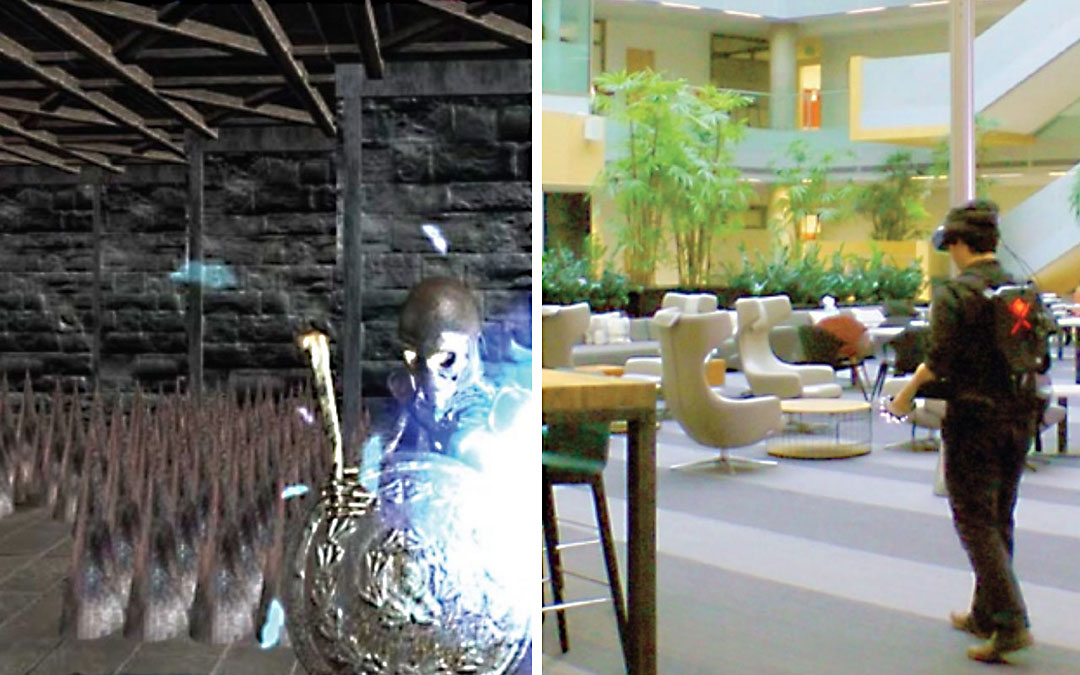

Traditional virtual reality (VR) mainly focuses on visual feedback, which is not accessible for people with visual impairments. We created Canetroller, a haptic cane controller that simulates white cane interactions, enabling people with visual impairments to navigate a virtual environment by transferring their cane skills into the virtual world. Canetroller provides three types of feedback: (1) physical resistance generated by a wearable programmable brake mechanism that physically impedes the controller when the virtual cane comes in contact with a virtual object; (2) vibrotactile feedback that simulates the vibrations when a cane hits an object or touches and drags across various surfaces; and (3) spatial 3D auditory feedback simulating the sound of real-world cane interactions. We designed indoor and outdoor VR scenes to evaluate the effectiveness of our controller. Our study showed that Canetroller was a promising tool that enabled visually impaired participants to navigate different virtual spaces. We discuss potential applications supported by Canetroller ranging from entertainment to mobility training.

Video

Reference

Yuhang Zhao, Cynthia Bennett, Hrvoje Benko, Ed Cutrell, Christian Holz, Meredith Morris, and Mike Sinclair. Enabling People with Visual Impairments to Navigate Virtual Reality with a Haptic and Auditory Cane Simulation. In Proceedings of ACM CHI 2018.