Virtual Reality Without Vision

A Haptic and Auditory White Cane to Navigate Complex Virtual Worlds

ACM CHI 2020 Honorable MentionAbstract

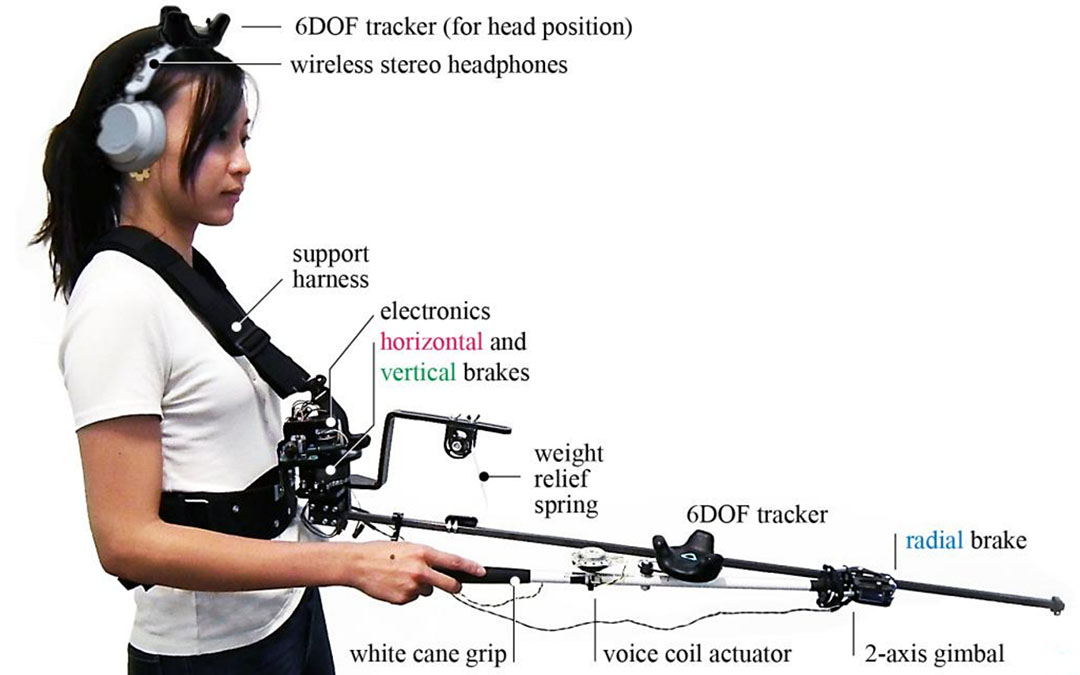

Current Virtual Reality (VR) technologies focus on rendering visuospatial effects, and thus are inaccessible for blind or low vision users. We examine the use of a novel white cane controller that enables navigation without vision of large virtual environments with complex architecture, such as winding paths and occluding walls and doors. The cane controller employs a lightweight three-axis brake mechanism to provide large-scale shape of virtual objects. The multiple degrees-of-freedom enables users to adapt the controller to their preferred techniques and grip. In addition, surface textures are rendered with a voice coil actuator based on contact vibrations; and spatialized audio is determined based on the progression of sound through the geometry around the user. We design a scavenger hunt game that demonstrates how our device enables blind users to navigate a complex virtual environment. Seven out of eight users were able to successfully navigate the virtual room (6x6m) to locate targets while avoiding collisions. We conclude with design consideration on creating immersive non-visual VR experiences based on user preferences for cane techniques, and cane material properties.

Video

Reference

Alexa Fay Siu, Mike Sinclair, Robert Kovacs, Eyal Ofek, Christian Holz, and Edward Cutrell. Virtual Reality Without Vision: A Haptic and Auditory White Cane to Navigate Complex Virtual Worlds. In Proceedings of ACM CHI 2020.