Contimask

Explaining Irregular Time Series via Perturbations in Continuous Time

NeurIPS 2025Abstract

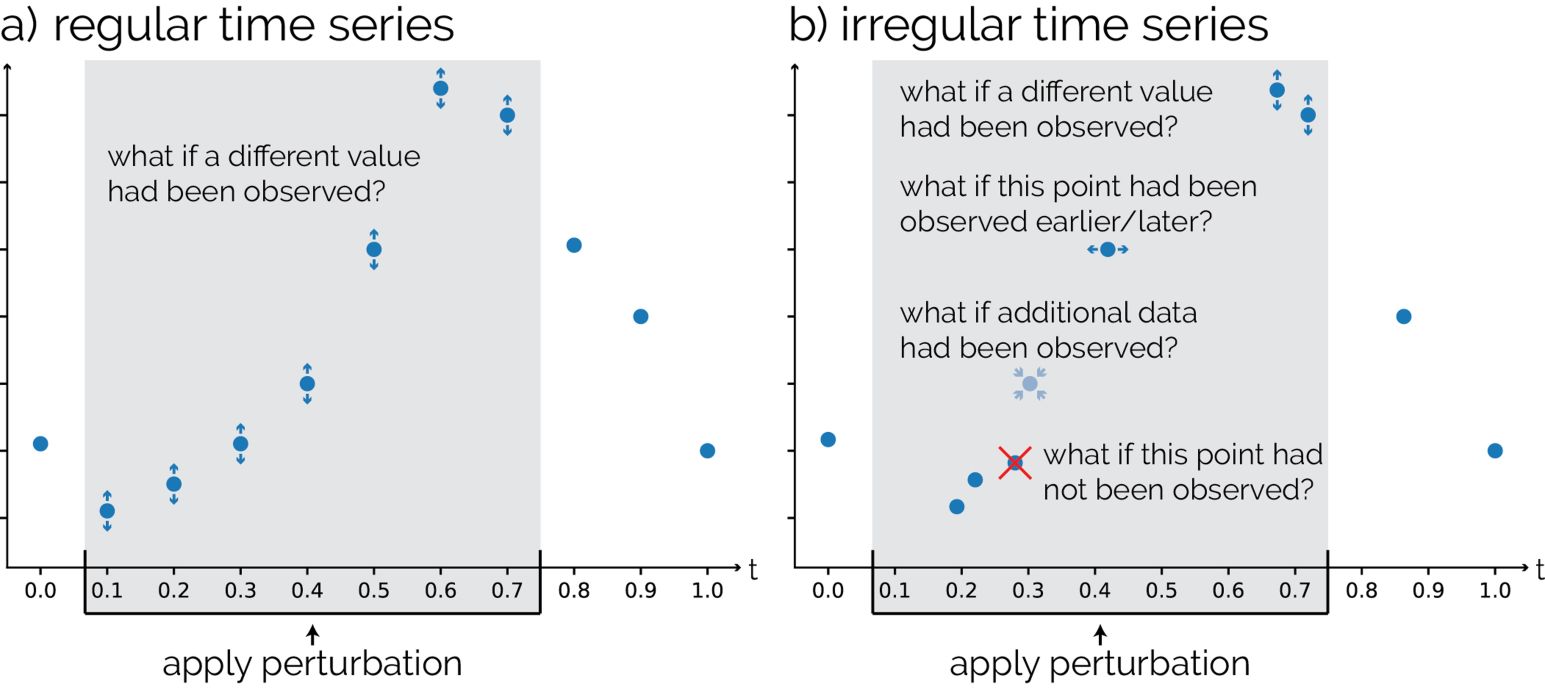

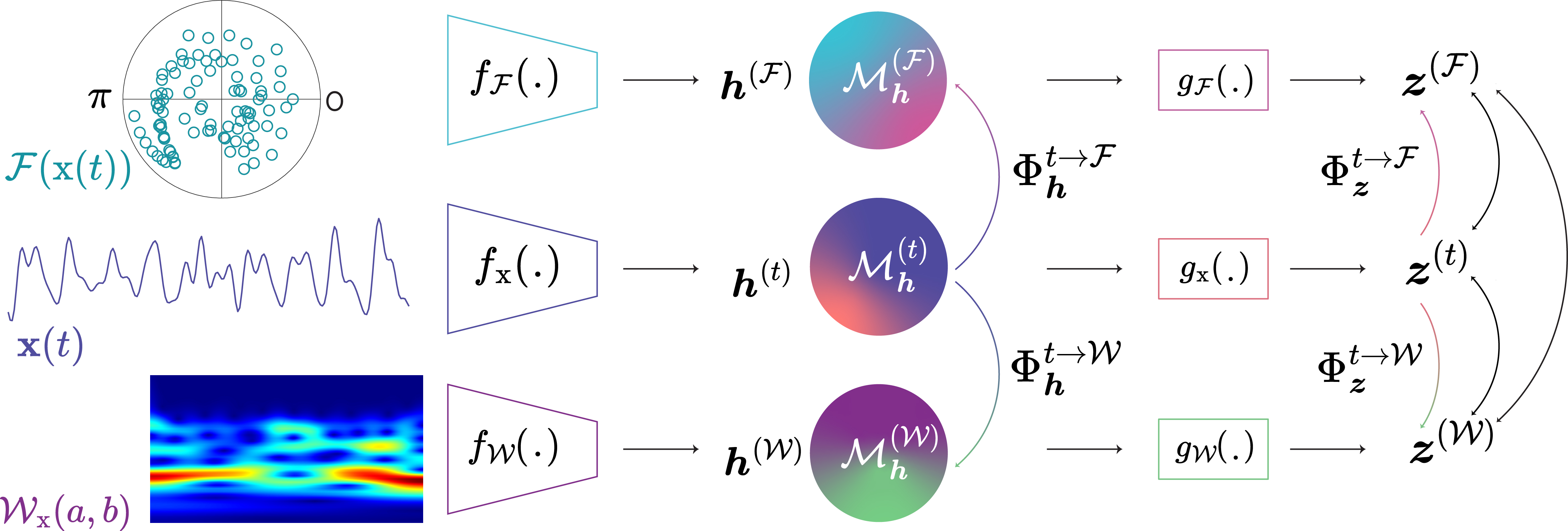

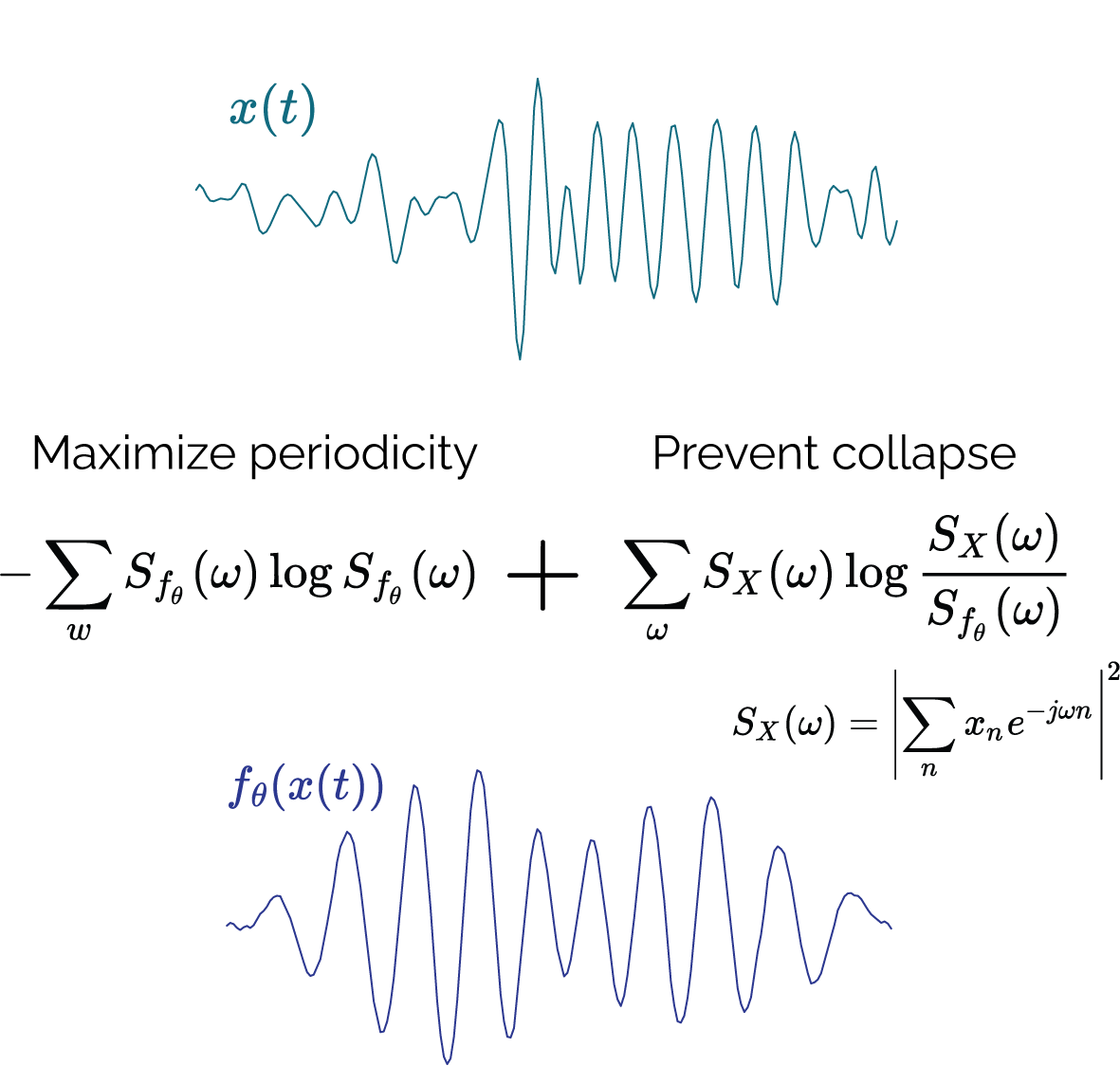

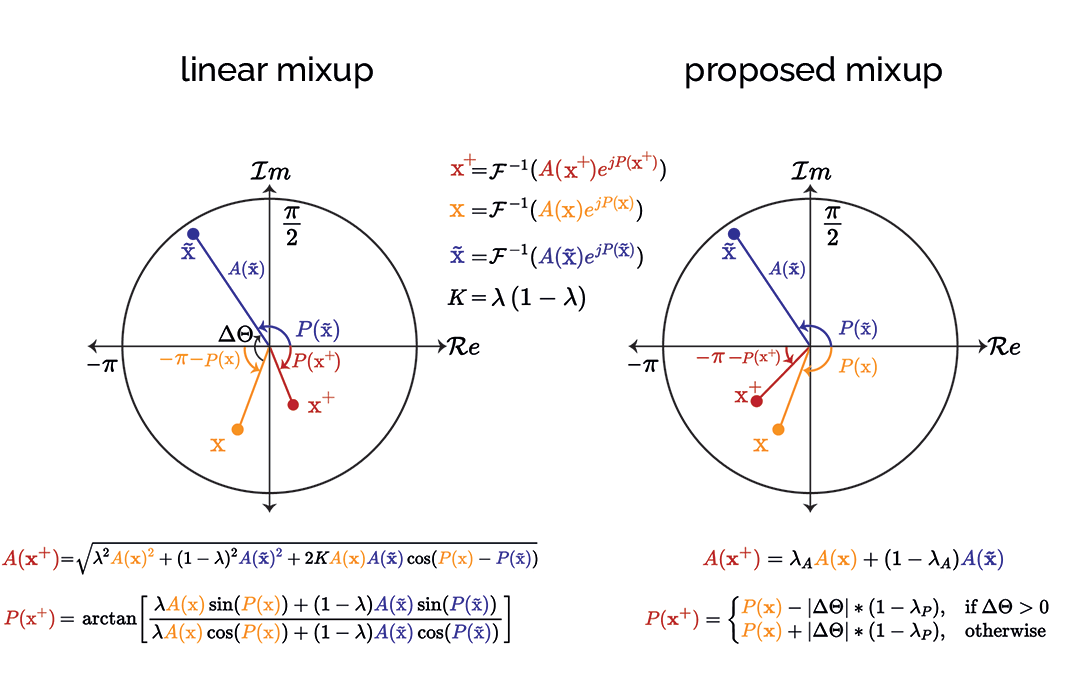

Explaining black-box models for time series data is critical for the wide-scale adoption of deep learning techniques across domains such as healthcare. Recently, explainability methods for deep time series models have seen significant progress by adopting saliency methods that perturb masked segments of time series to uncover their importance towards the prediction of black-box models. Thus far, such methods have been largely restricted to regular time series. Irregular time series, however, sampled at irregular time intervals and potentially with missing values, are the dominant form of time series in various critical domains (e.g., hospital records). In this paper, we conduct the first evaluation of saliency methods for the interpretation of irregular time series models. We first translate techniques for regular time series into the continuous time realm of irregular time series and show under which circumstances such techniques are still applicable. However, existing perturbation techniques neglect the timing and structure of observed data, e.g., informative missingness when data is not missing at random. Thus, we propose Contimask, a simple framework to also apply non-differentiable perturbations, such as simulating that parts of the data had not been observed using NeuroEvolution. Doing so, we are first to successfully detect saliency that is independent of the value of observed data and achieve significant improvements at interpreting irregular time series models on real-world data where 90% of the data is missing.

Reference

Max Moebus, Bjoern Braun, and Christian Holz. Contimask: Explaining Irregular Time Series via Perturbations in Continuous Time. In Conference on Neural Information Processing Systems 2025 (NeurIPS).