Shifting the Paradigm

A Diffeomorphism Between Time Series Data Manifolds for Achieving Shift-Invariancy in Deep Learning

ICLR 2025Abstract

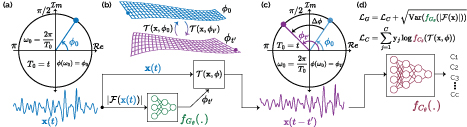

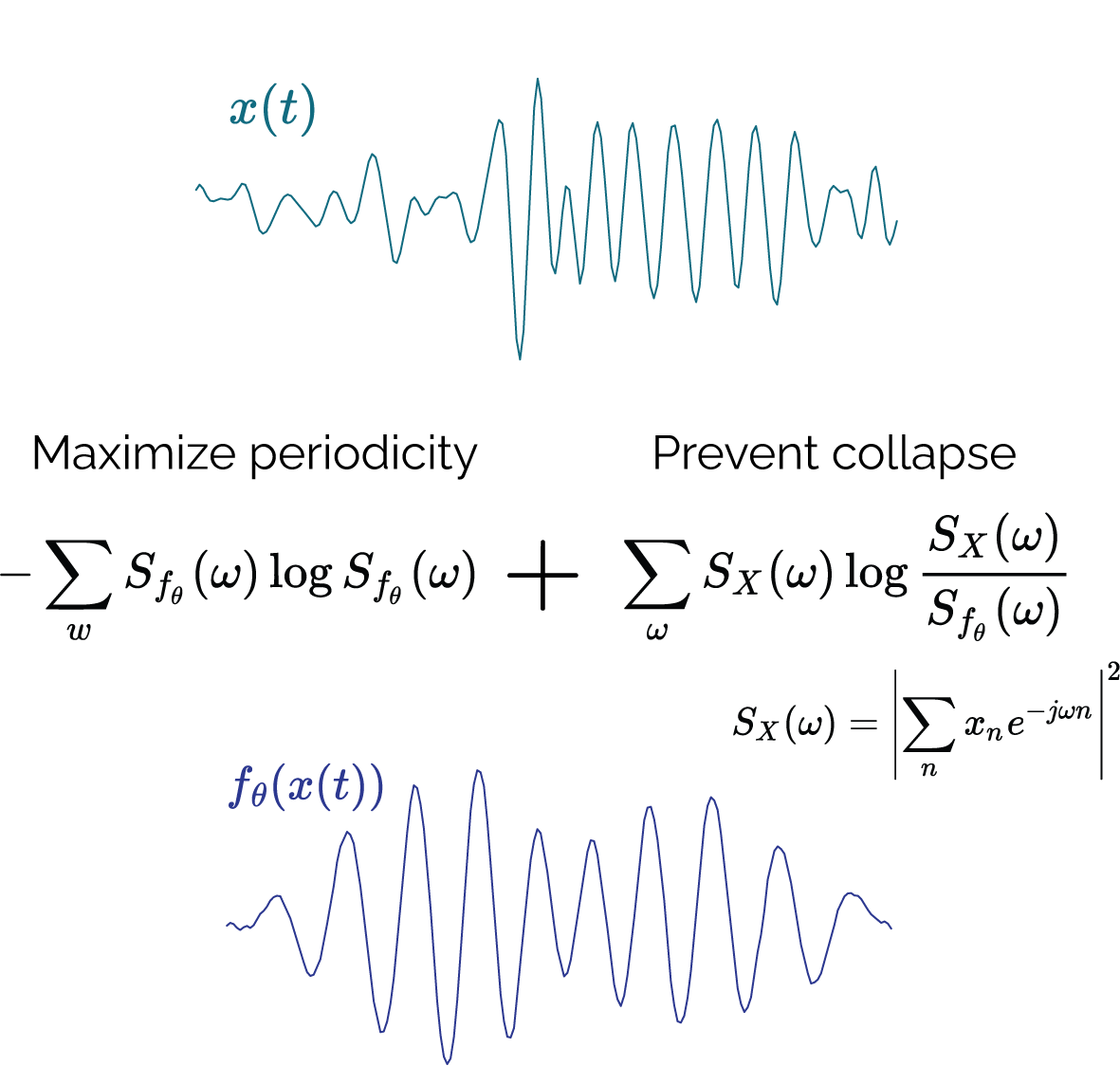

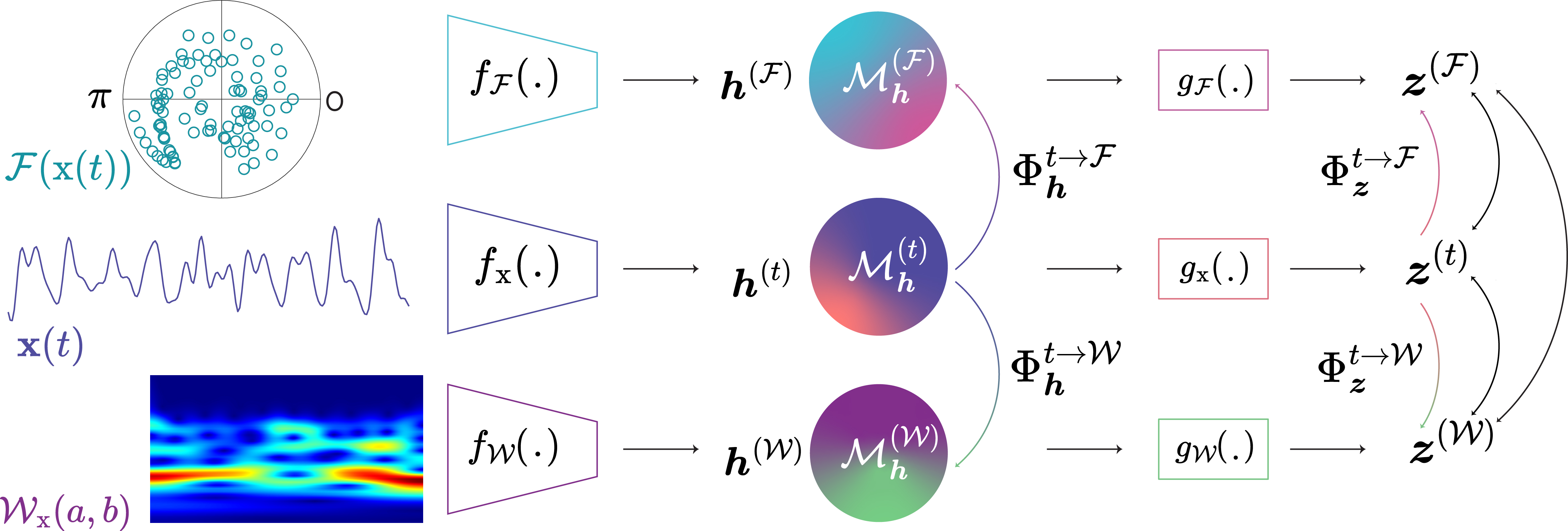

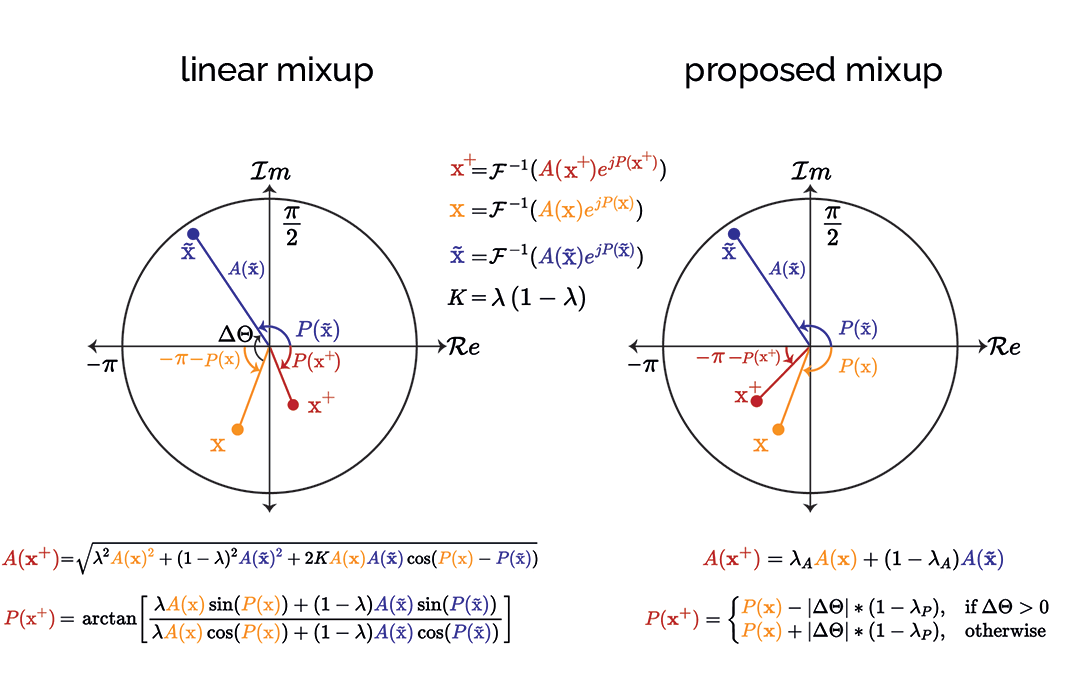

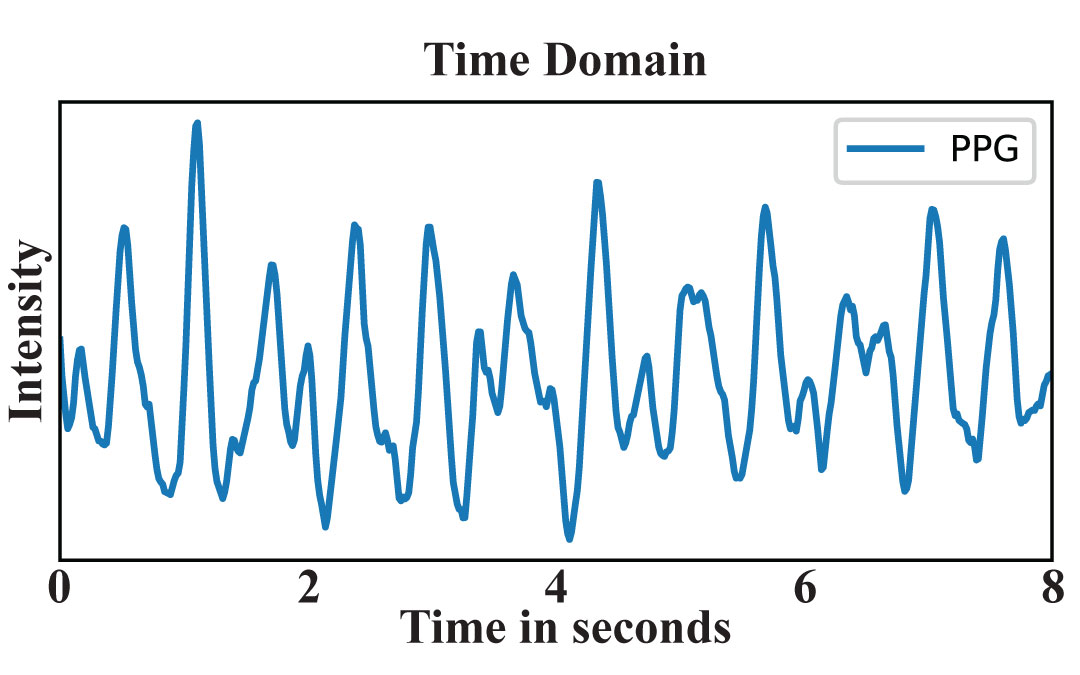

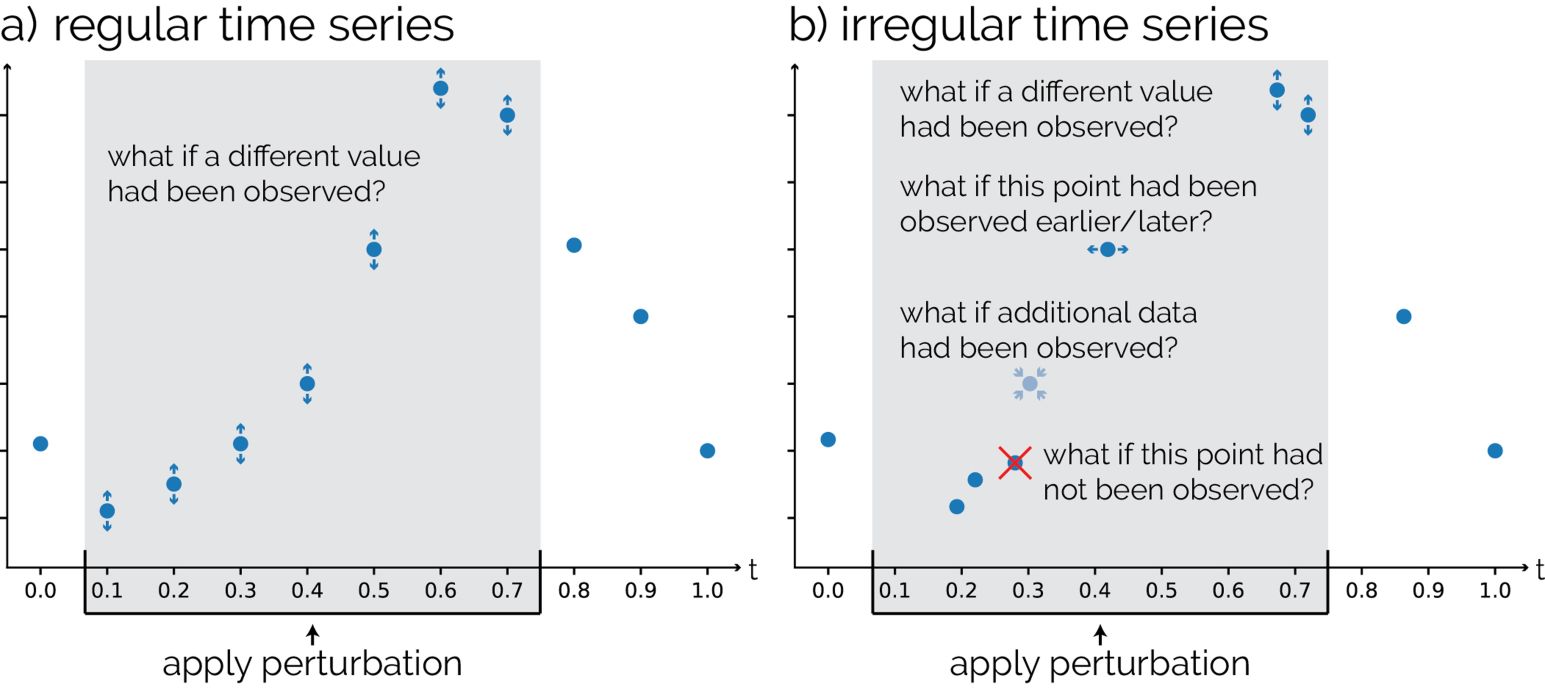

Deep learning models lack shift invariance, making them sensitive to input shifts that cause changes in output. While recent techniques seek to address this for images, our findings show that these approaches fail to provide shift-invariance in time series, where the data generation mechanism is more challenging due to the interaction of low and high frequencies. Worse, they also decrease performance across several tasks. In this paper, we propose a novel differentiable bijective function that maps samples from their high-dimensional data manifold to another manifold of the same dimension, without any dimensional reduction. Our approach guarantees that samples—when subjected to random shifts—are mapped to a unique point in the data manifold while preserving all task-relevant information without loss. We theoretically and empirically demonstrate that the proposed transformation guarantees shift-invariance in deep learning models without imposing any limits to the shift. Our experiments on five time series tasks with state-of-the-art methods show that our proposed approach consistently improves the performance while enabling models to achieve complete shift-invariance without modifying or imposing restrictions on the model’s topology. The source code is available on GitHub.

Reference

Berken Utku Demirel and Christian Holz. Shifting the Paradigm: A Diffeomorphism Between Time Series Data Manifolds for Achieving Shift-Invariancy in Deep Learning. In International Conference on Learning Representations 2025 (ICLR).