Data Miming

Inferring Spatial Object Descriptions from Human Gesture

ACM CHI 2011Abstract

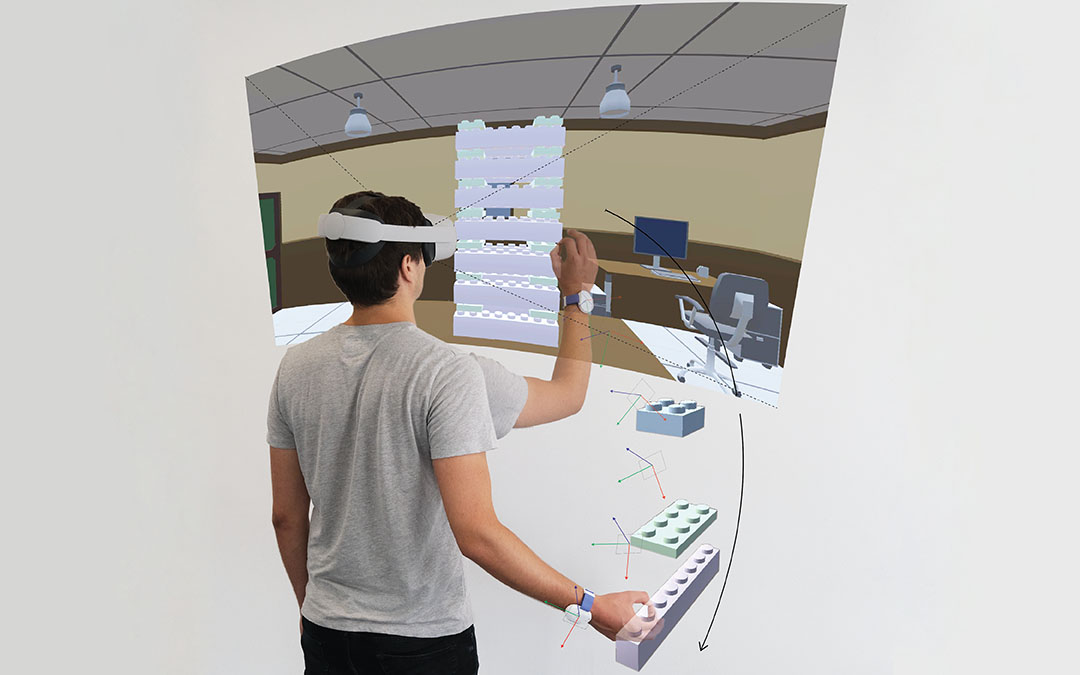

Speakers often use hand gestures when talking about or describing physical objects. Such gesture is particularly useful when the speaker is conveying distinctions of shape that are difficult to describe verbally. We present data miming—an approach to making sense of gestures as they are used to describe concrete physical objects. We first observe participants as they use gestures to describe real-world objects to another person. From these observations, we derive the data miming approach, which is based on a voxel representation of the space traced by the speaker’s hands over the duration of the gesture. In a final proof-of-concept study, we demonstrate a prototype implementation of matching the input voxel representation to select among a database of known physical objects.

Video

Reference

Christian Holz and Andrew D. Wilson. Data Miming: Inferring Spatial Object Descriptions from Human Gesture. In Proceedings of ACM CHI 2011.