Sensing Posture-Aware Pen+Touch Interaction on Tablets

ACM CHI 2019 Honorable MentionAbstract

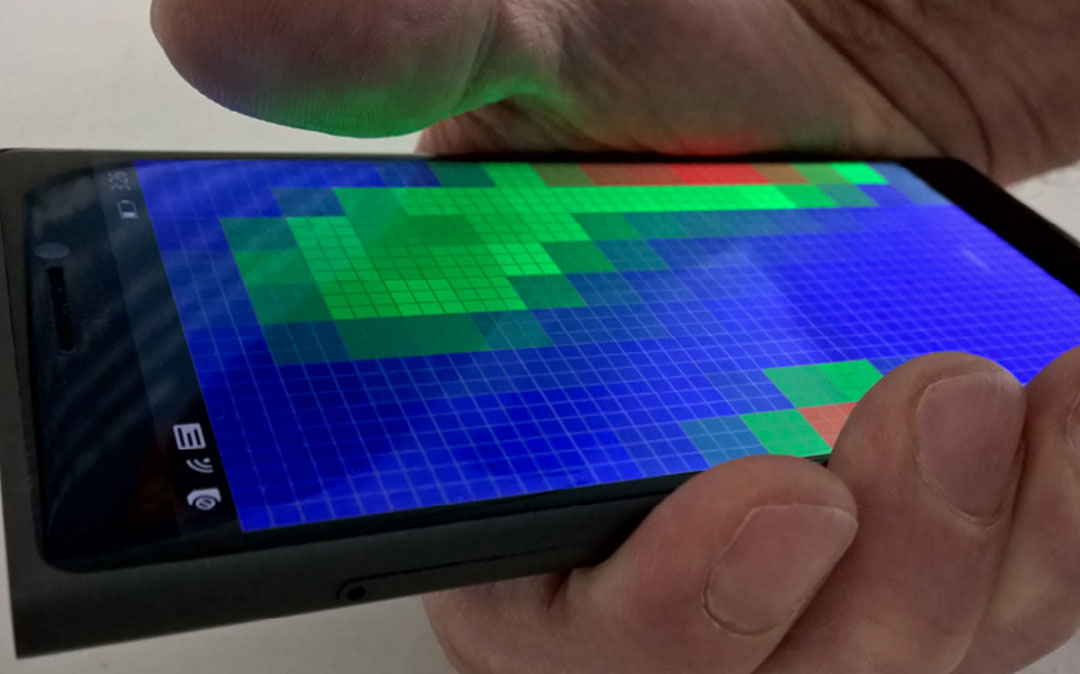

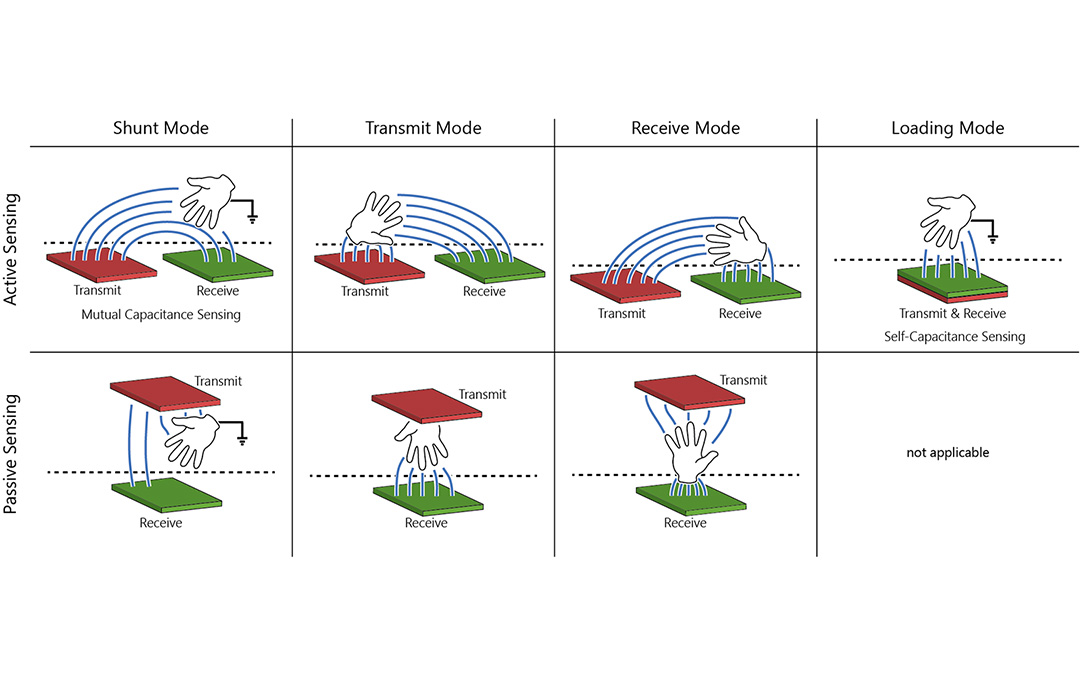

Many status-quo interfaces for tablets with pen + touch input capabilities force users to reach for device-centric UI widgets at fixed locations, rather than sensing and adapting to the user-centric posture. To address this problem, we propose sensing techniques that transition between various nuances of mobile and stationary use via postural awareness. These postural nuances include shifting hand grips, varying screen angle and orientation, planting the palm while writing or sketching, and detecting what direction the hands approach from. To achieve this, our system combines three sensing modalities: 1) raw capacitance touchscreen images, 2) inertial motion, and 3) electric field sensors around the screen bezel for grasp and hand proximity detection. We show how these sensors enable posture-aware pen+touch techniques that adapt interaction and morph user interface elements to suit fine-grained contexts of body-, arm-, hand-, and grip-centric frames of reference.

Video

Reference

Yang Zhang, Michel Pahud, Christian Holz, Haijun Xia, Gierad Laput, Michael McGuffin, Xiao Tu, Andrew Mittereder, Fei Su, William Buxton, and Ken Hinckley. Sensing Posture-Aware Pen+Touch Interaction on Tablets. In Proceedings of ACM CHI 2019.