egoPPG

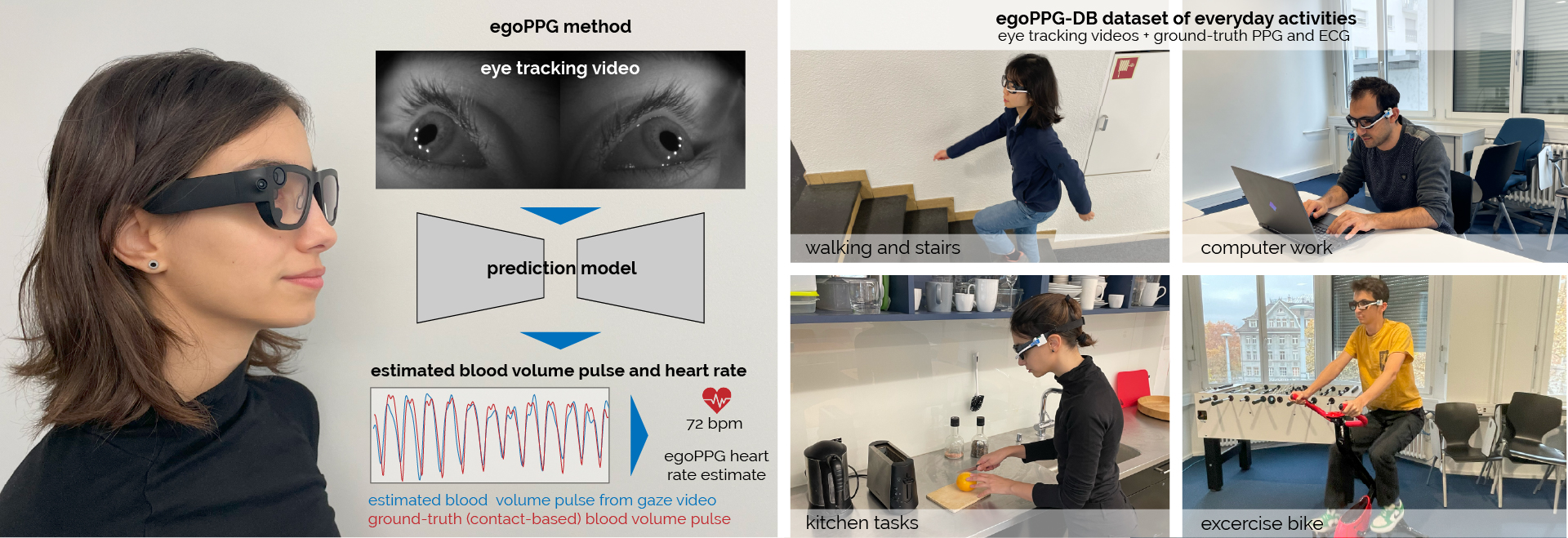

Heart Rate Estimation from Eye-Tracking Cameras in Egocentric Systems to Benefit Downstream Vision Tasks

ICCV 2025Abstract

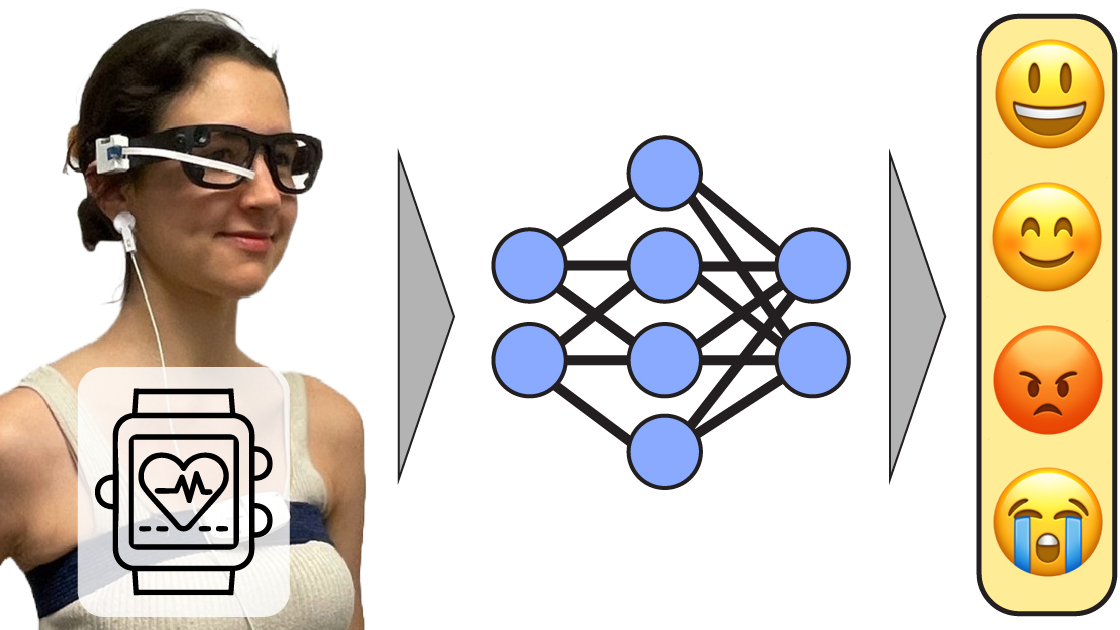

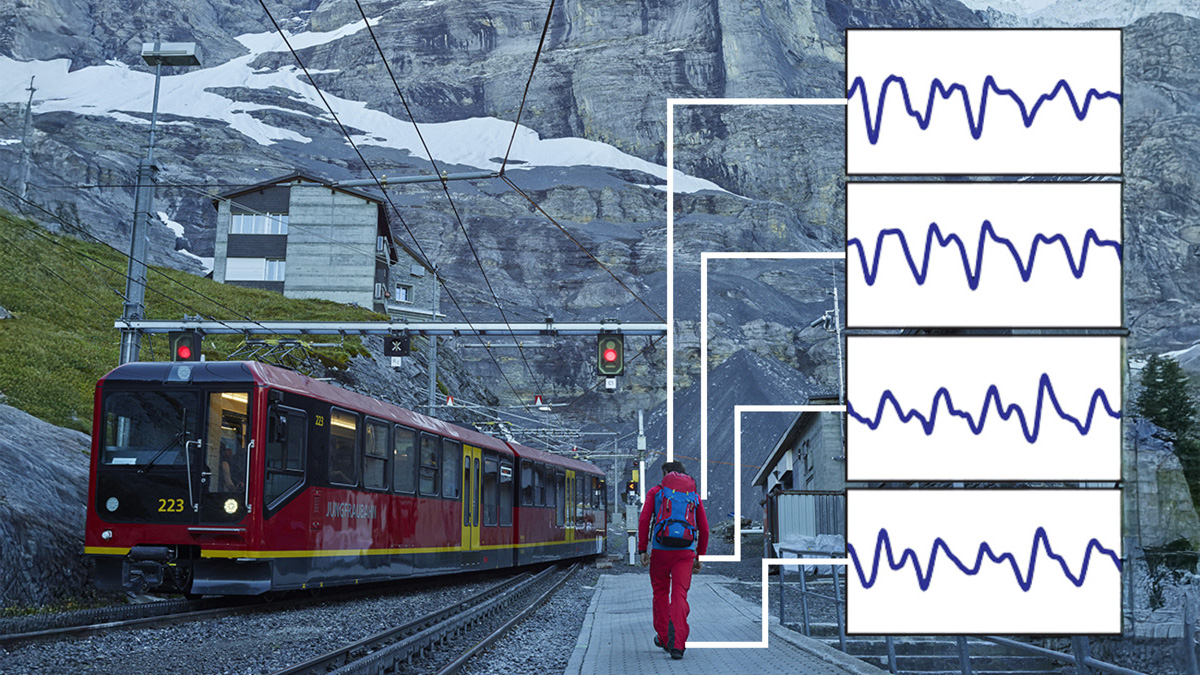

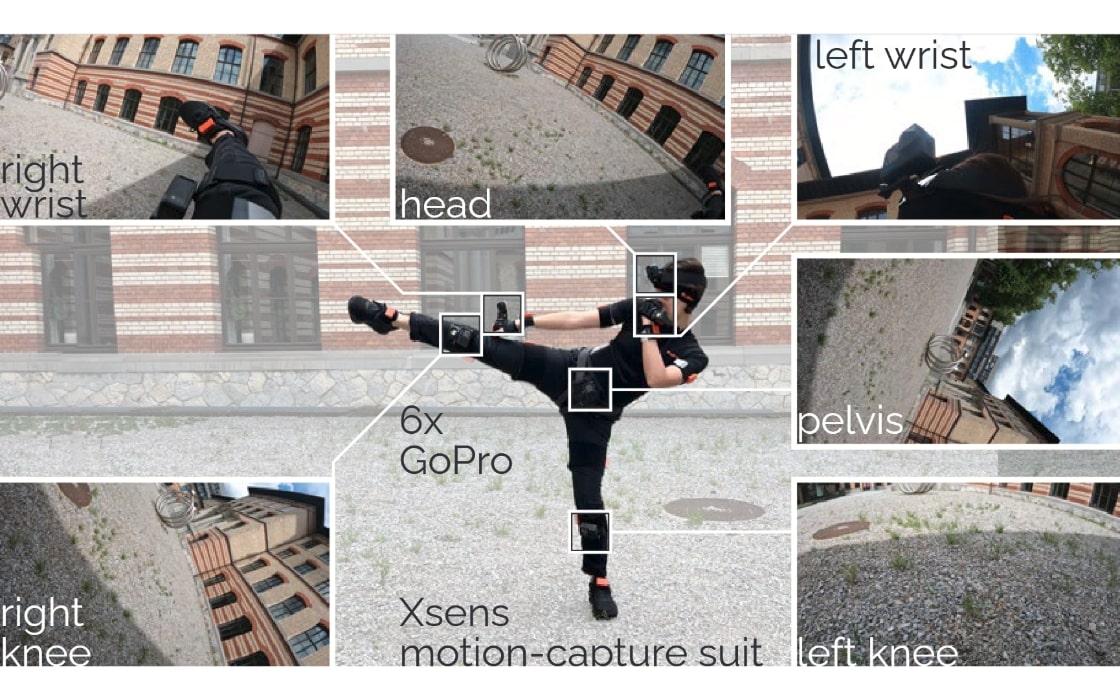

Egocentric vision systems aim to understand the spatial surroundings and the wearer’s behavior inside it, including motions, activities, and interactions. We argue that egocentric systems must additionally detect physiological states to capture a person’s attention and situational responses, which are critical for context-aware behavior modeling. In this paper, we propose egoPPG, a novel vision task for egocentric systems to recover a person’s cardiac activity to aid downstream vision tasks. We introduce PulseFormer, a method to extract heart rate as a key indicator of physiological state from the eye tracking cameras on unmodified egocentric vision systems. PulseFormer continuously estimates the photoplethysmogram (PPG) from areas around the eyes and fuses motion cues from the headset’s inertial measurement unit to track HR values. We demonstrate egoPPG’s downstream benefit for a key task on EgoExo4D, an existing egocentric dataset for which we find PulseFormer’s estimates of HR to improve proficiency estimation by 14%. To train and validatePulseFormer, we collected a dataset of 13+ hours of eye tracking videos from Project Aria and contact-based PPG signals as well as an electrocardiogram (ECG) for ground-truth HR values. Similar to EgoExo4D, 25 participants performed diverse everyday activities such as office work, cooking, dancing, and exercising, which induced significant natural motion and HR variation (44–164 bpm). Our model robustly estimates HR (MAE=7.67 bpm) and captures patterns (r=0.85). Our results show how egocentric systems may unify environmental and physiological tracking to better understand users and that egoPPG as a complementary task provides meaningful augmentations for existing datasets and tasks. We release our code, dataset, and HR augmentations for EgoExo4D to inspire research on physiology-aware egocentric tasks.

Video

Reference

Bjoern Braun, Rayan Armani, Manuel Meier, Max Moebus, and Christian Holz. egoPPG: Heart Rate Estimation from Eye-Tracking Cameras in Egocentric Systems to Benefit Downstream Vision Tasks. In International Conference on Computer Vision 2025 (ICCV).

Study Apparatus

Figure 2. Apparatus used to record the egoPPG-DB dataset. We used Project Aria glasses to record eye tracking videos at 30 fps. To capture ground truth PPG measurements, with which we train our model, we developed a custom sensor that records PPG data offline at 128 Hz. To validate our custom PPG sensor, we also recorded gold-standard ECG data using a movisens ECGMove 4 chest belt sampling at 1024 Hz. We synchronized all devices at the start and end of each recording with a synchronization pattern, using their built-in IMUs.

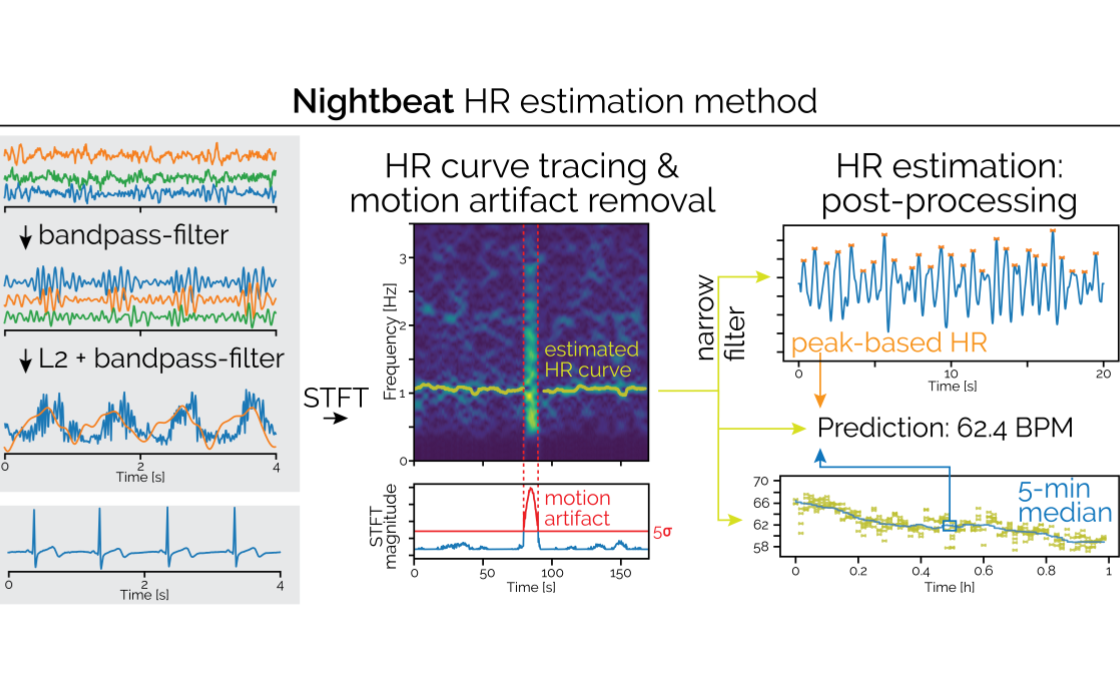

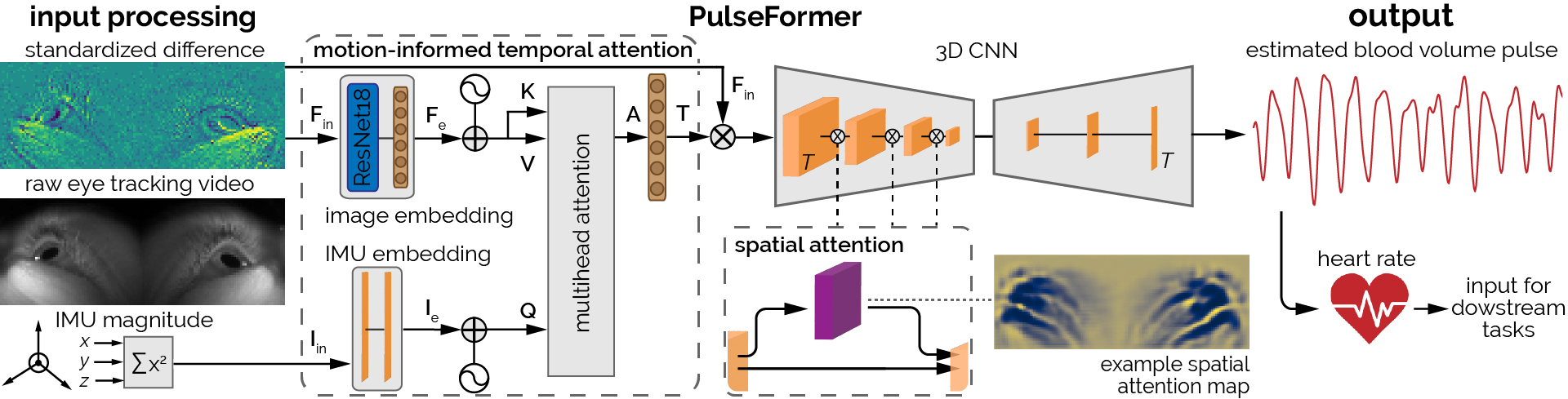

PulseFormer

Figure 3. Architecture of our model for continuous BVP estimation from eye tracking videos and consecutive HR computation. Our architecture is built upon a 3D CNN backbone (PhysNet) with a temporal input length of T=128 frames (corresponding to 4.3 seconds). The input in our network is the consecutive standardized frame differences of the eye tracking videos to help the network focus on the changes between frames. As labels, we use the standardized consecutive differences of the PPG signals. As egocentric glasses are body-worn and subject to considerable motion artifacts when the user moves, we propose to leverage the IMU within the glasses to obtain a motion-informed temporal attention. Additionally, as eyes typically move strongly during everyday situations and are closed while blinking, extracting the blood volume pulse (BVP) from the eye regions would introduce substantial motion artifacts. To address this, we introduce spatial attention modules before each pooling to allow our network to focus on high-SNR regions, such as the skin.

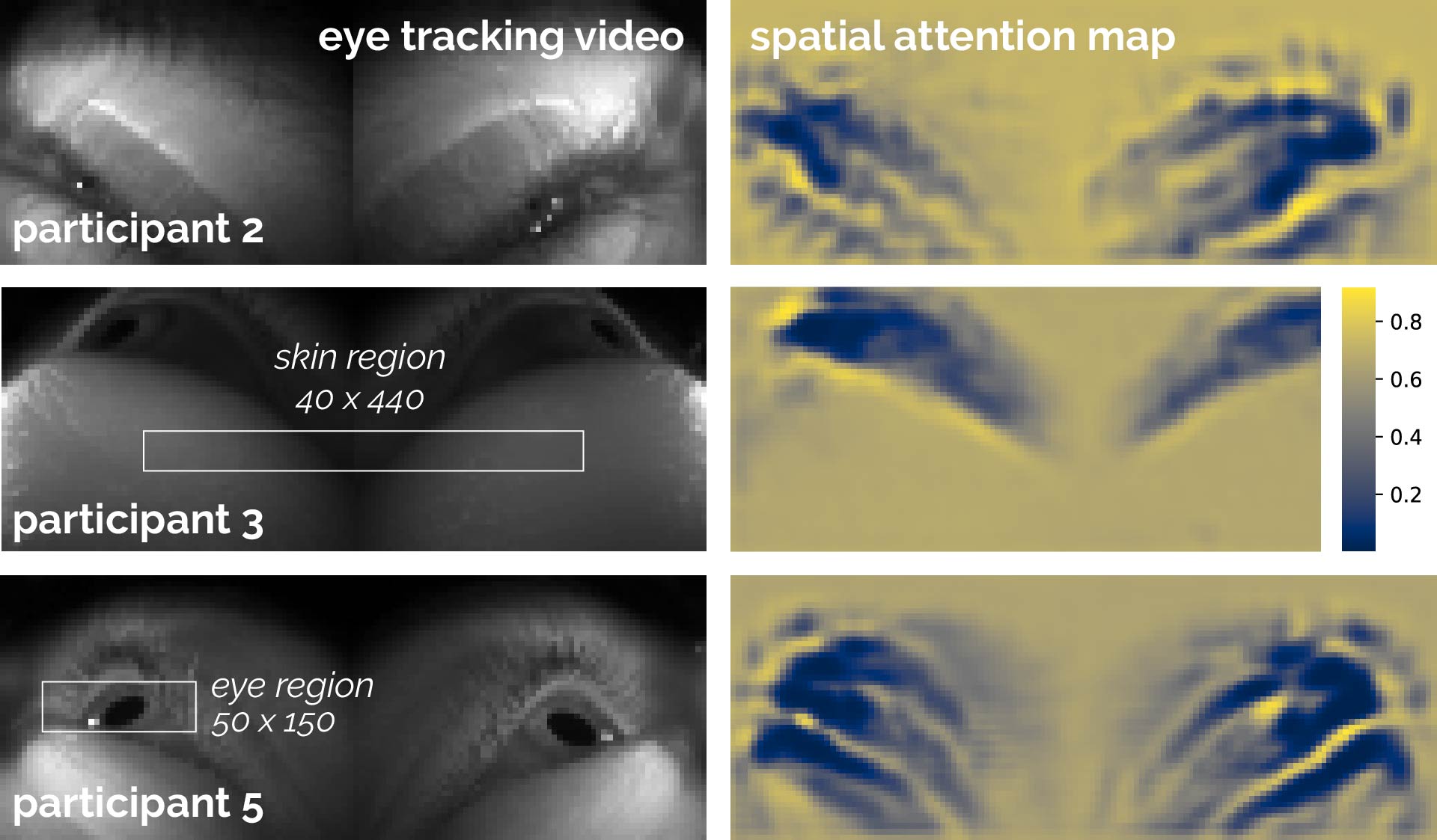

Spatial Attention Maps

Figure 4. Eye tracking images and the learnt spatial attention maps of different participants. Left: Head geometry determines the regions that the eye tracker captures. Right: Learned spatial attention maps show that eye regions are excluded and PulseFormer instead extracts BVP from the surrounding skin regions, which move less than the eyes.

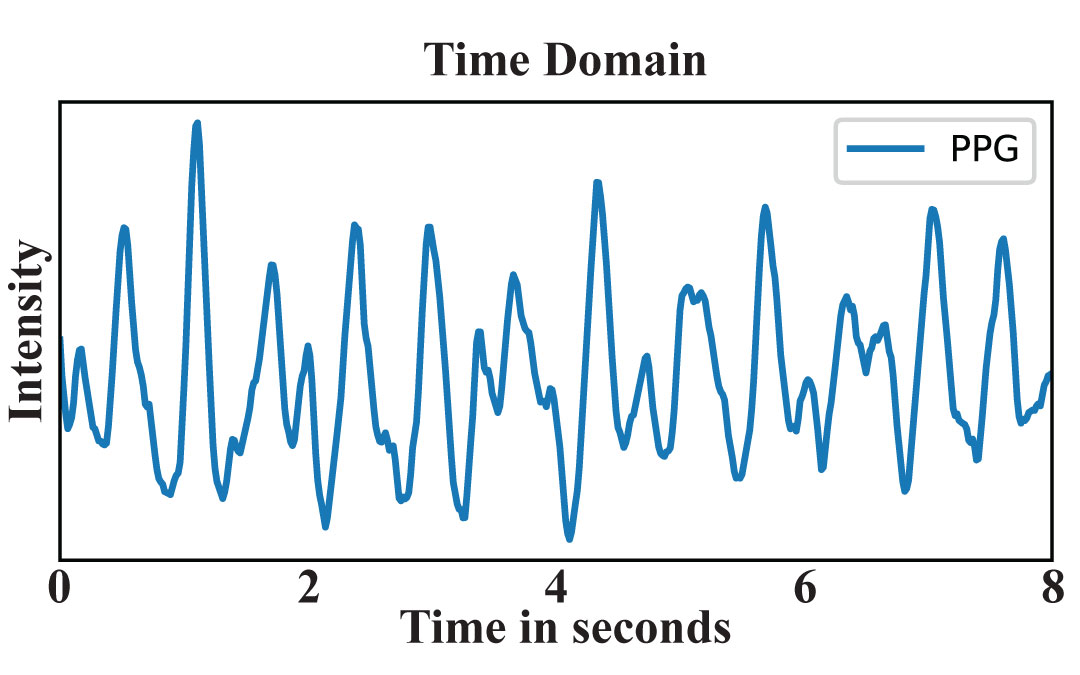

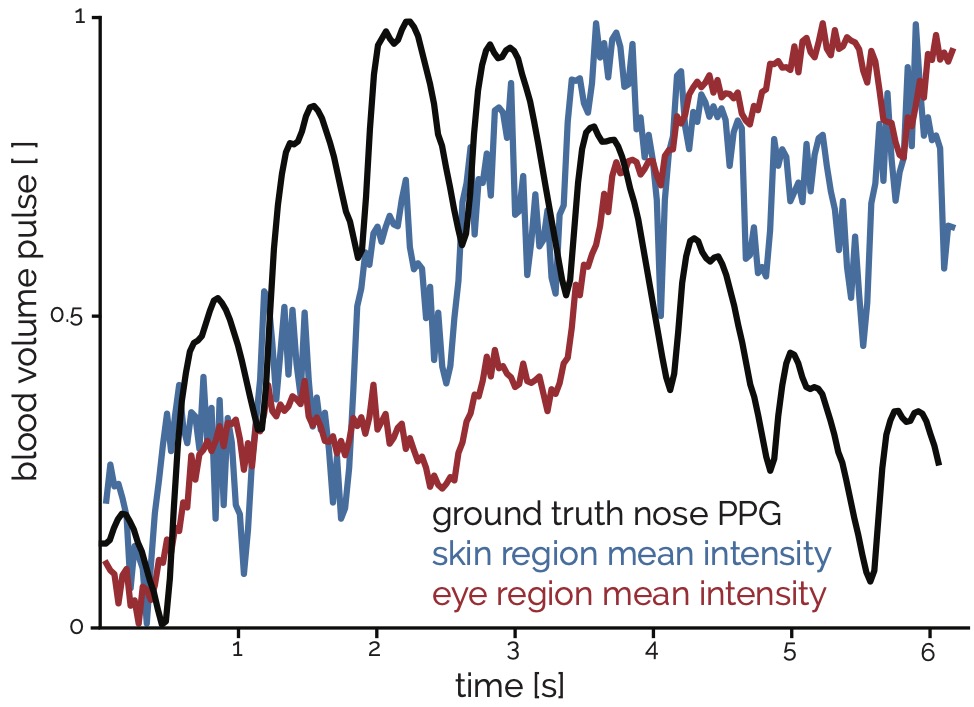

Raw Signal Intensities

Figure 5. Example raw mean intensity of the skin and eye region compared to the ground truth BVP, showing the higher SNR for the skin region around the eyes compared to the eyes.

Dataset Overview

Figure 6. Additional images of the data recording showing the variety of everyday activities our dataset includes.