Gaze Comes in Handy

Predicting and Preventing Erroneous Hand Actions in AR-Supported Manual Tasks

IEEE ISMAR 2021Abstract

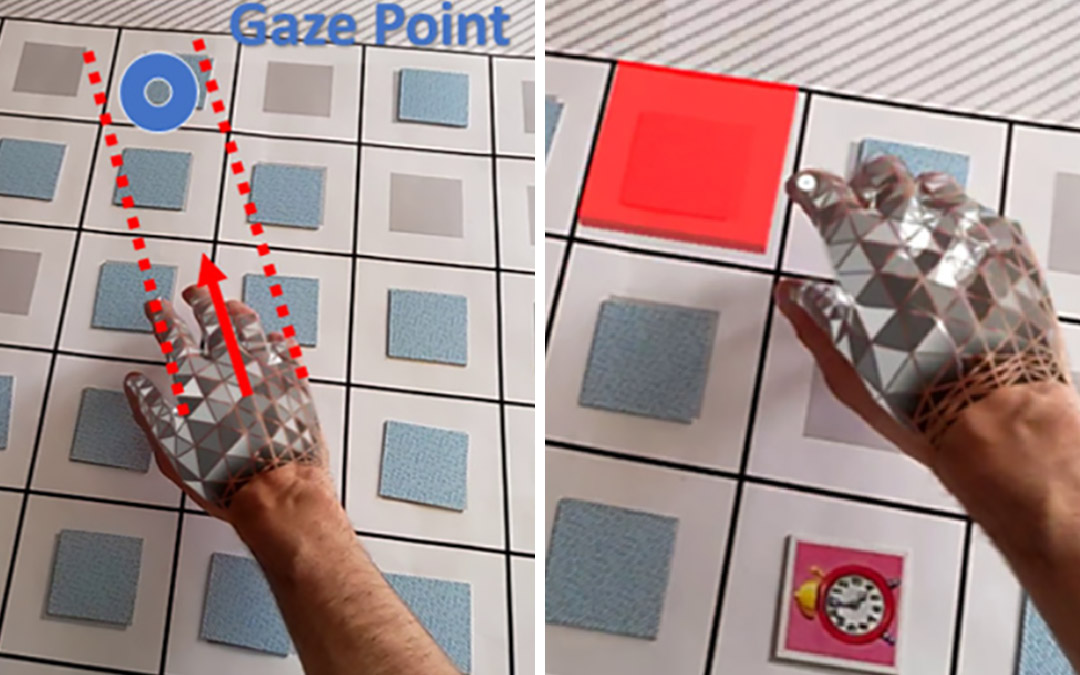

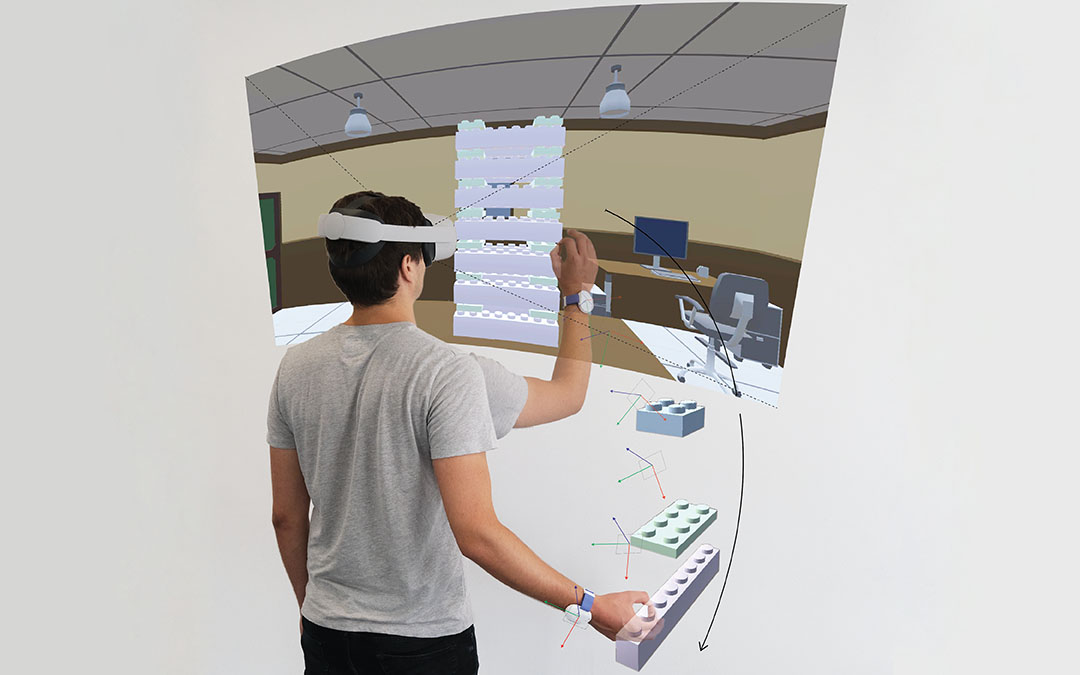

Emerging Augmented Reality headsets incorporate gaze and hand tracking and can, thus, observe the user’s behavior without interfering with ongoing activities. In this paper, we analyze hand-eye coordination in real-time to predict hand actions during target selection and warn users of potential errors before they occur. In our first user study, we recorded 10 participants playing a memory card game, which involves frequent hand-eye coordination with little task-relevant information. We found that participants’ gaze locked onto target cards 350ms before the hands touched them in 73.3% of all cases, which coincided with the peak velocity of the hand moving to the target. Based on our findings, we then introduce a closed-loop support system that monitors the user’s fingertip position to detect the first card turn and analyzes gaze, hand velocity and trajectory to predict the second card before it is turned by the user. In a second study with 12 participants, our support system correctly displayed color-coded visual alerts in a timely manner with an accuracy of 85.9%. The results indicate the high value of eye and hand tracking features for behavior prediction and provide a first step towards predictive real-time user support.

Video

Reference

Julian Wolf, Quentin Lohmeyer, Christian Holz, and Mirko Meboldt. Gaze Comes in Handy: Predicting and Preventing Erroneous Hand Actions in AR-Supported Manual Tasks. In Proceedings of International Symposium on Mixed and Augmented Reality 2021 (IEEE ISMAR).