The Personality Dimensions GPT-3 Expresses During Human-Chatbot Interactions

ACM IMWUT 2024Abstract

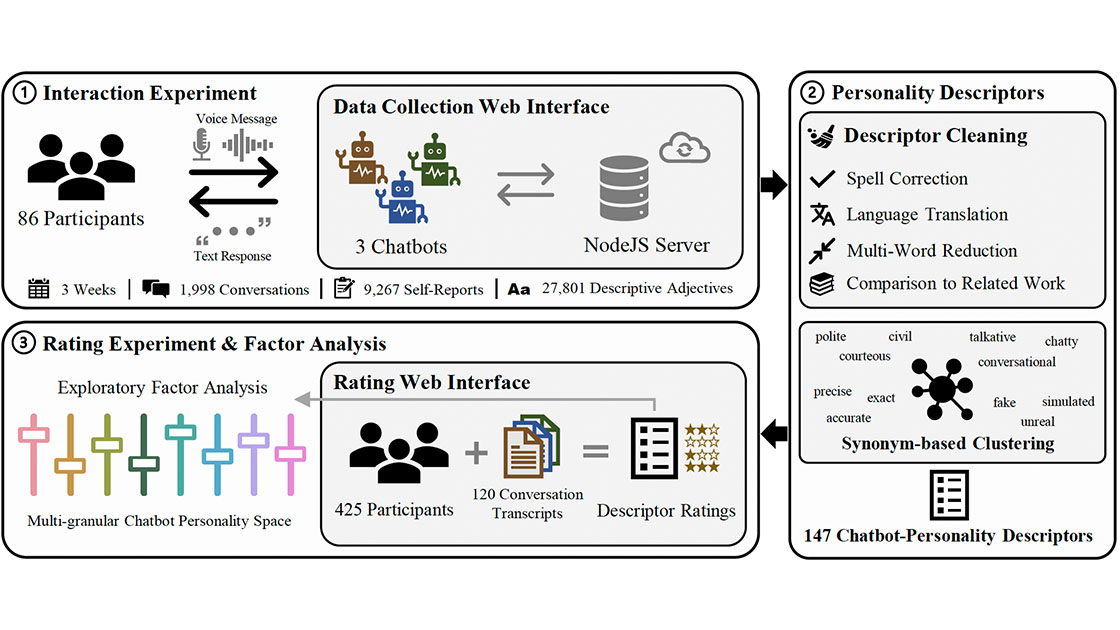

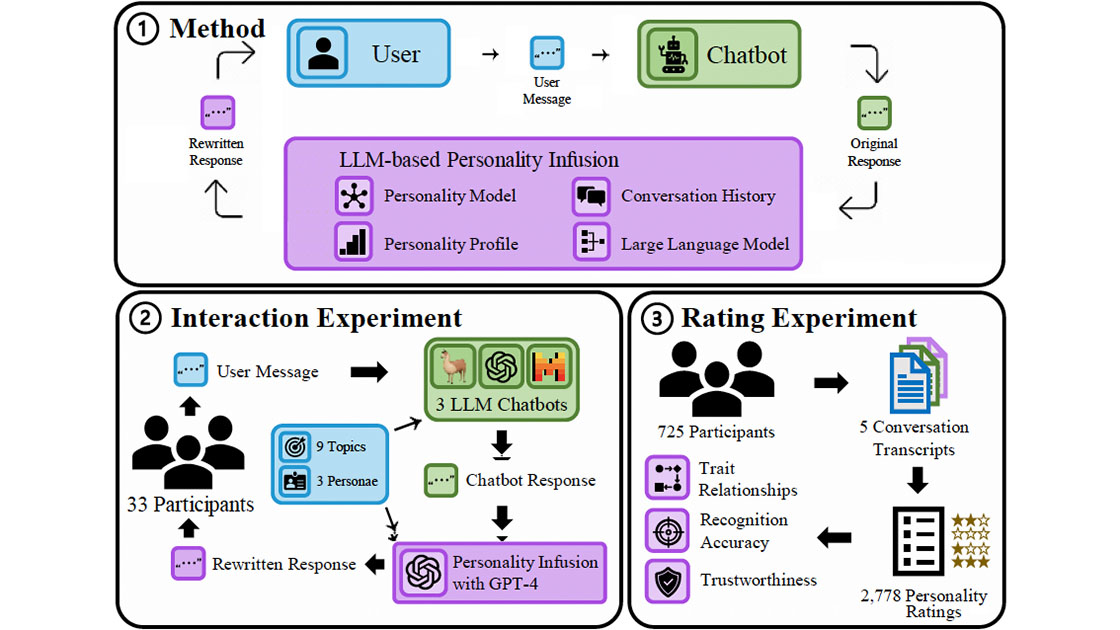

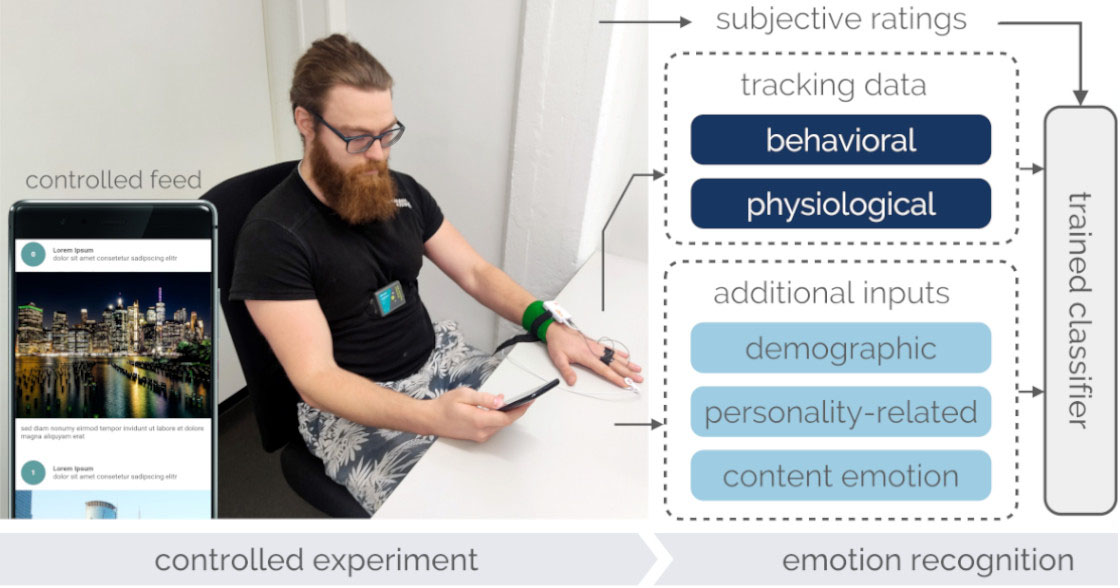

Large language models such as GPT-3 and ChatGPT can mimic human-to-human conversation with unprecedented fidelity, which enables many applications such as conversational agents for education and non-player characters in video games. In this work, we investigate the underlying personality structure that a GPT-3-based chatbot expresses during conversations with a human. We conducted a user study to collect 147 chatbot personality descriptors from 86 participants while they interacted with the GPT-3-based chatbot for three weeks. Then, 425 new participants rated the 147 personality descriptors in an online survey. We conducted an exploratory factor analysis on the collected descriptors and show that, though overlapping, human personality models do not fully transfer to the chatbot’s personality as perceived by humans. We also show that the perceived personality is significantly different from that of virtual personal assistants, where users focus rather on serviceability and functionality. We discuss the implications of ever-evolving large language models and the change they affect in users’ perception of agent personalities.

Reference

Nikola Kovacevic, Christian Holz, Markus Gross, and Rafael Wampfler. The Personality Dimensions GPT-3 Expresses During Human-Chatbot Interactions. In Proceedings on Interactive, Mobile, Wearable and Ubiquitous Technologies 2024 (ACM IMWUT).