Structured Light Speckle

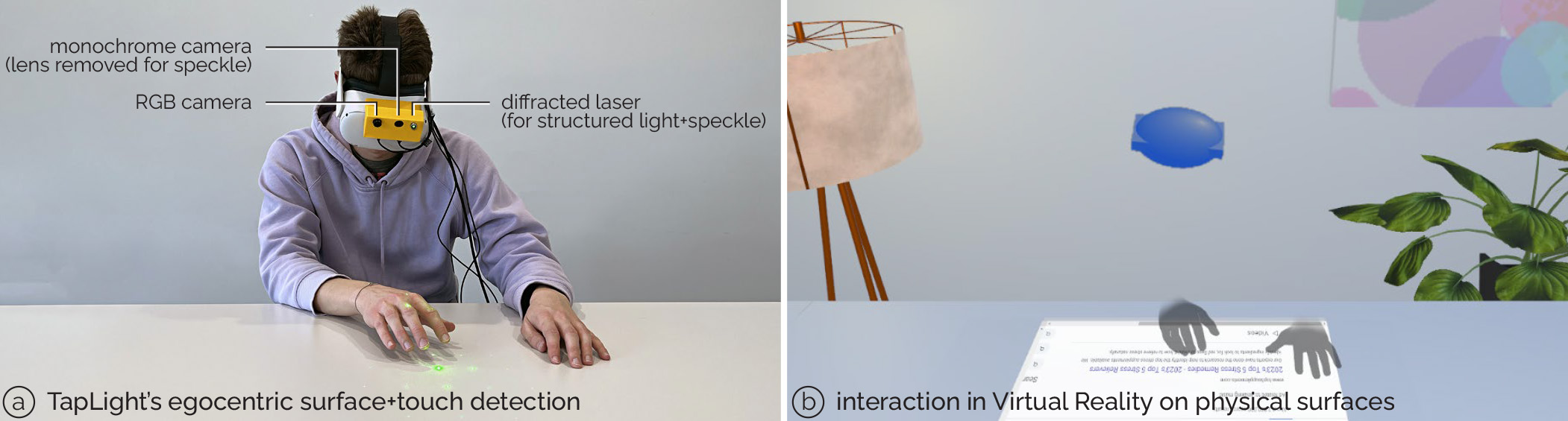

Joint egocentric depth estimation and low-latency contact detection via remote vibrometry

ACM UIST 2023Abstract

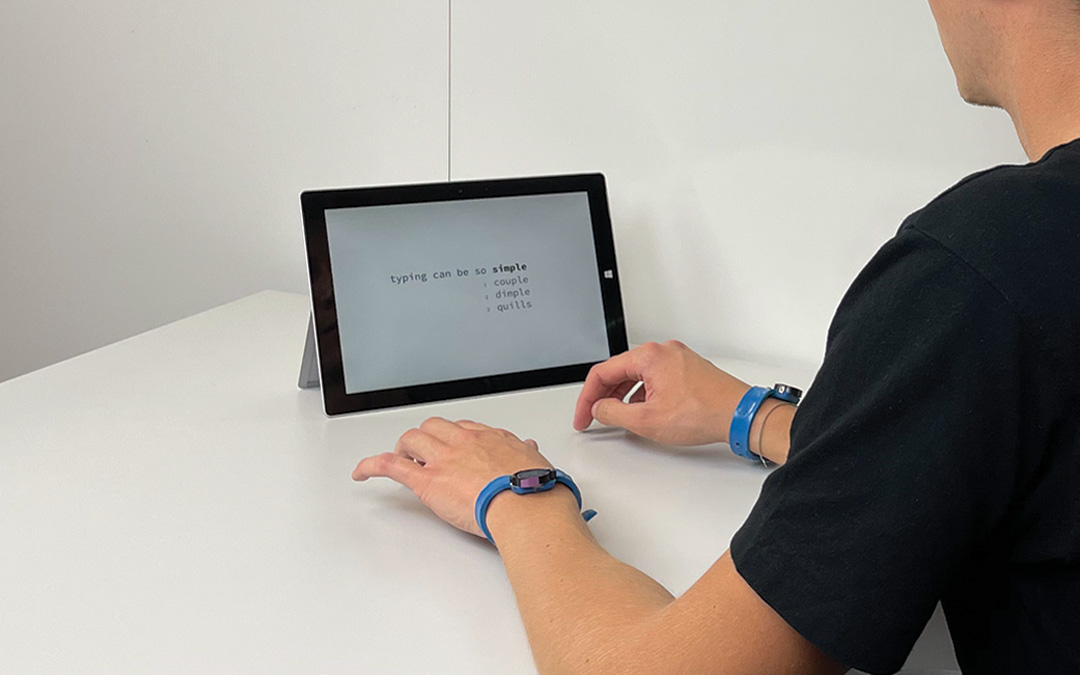

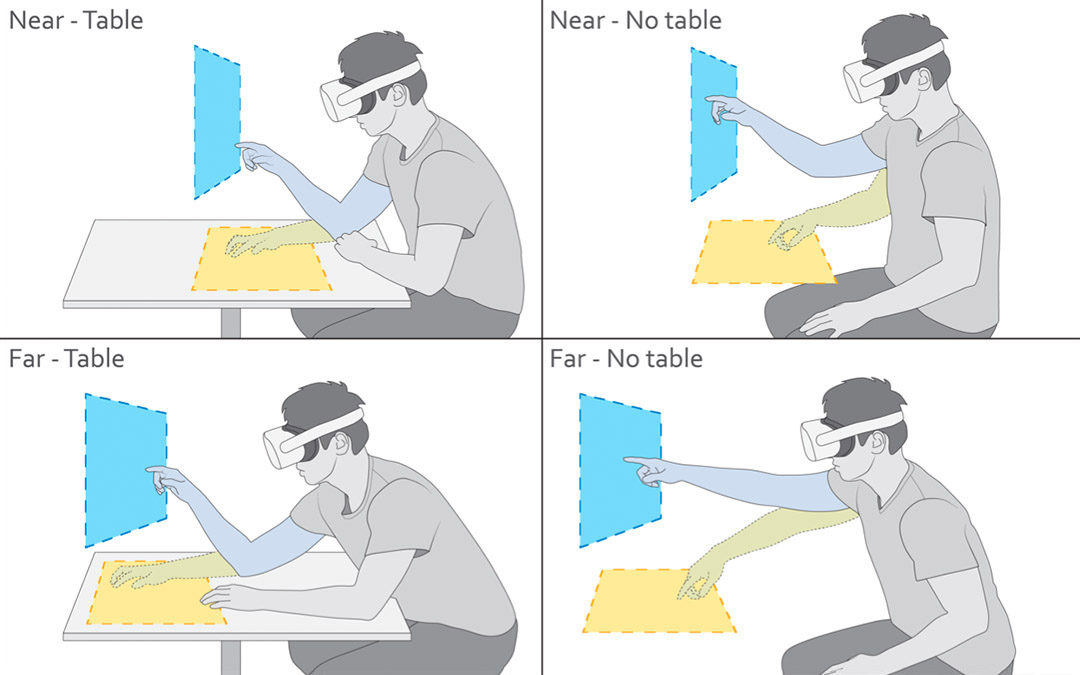

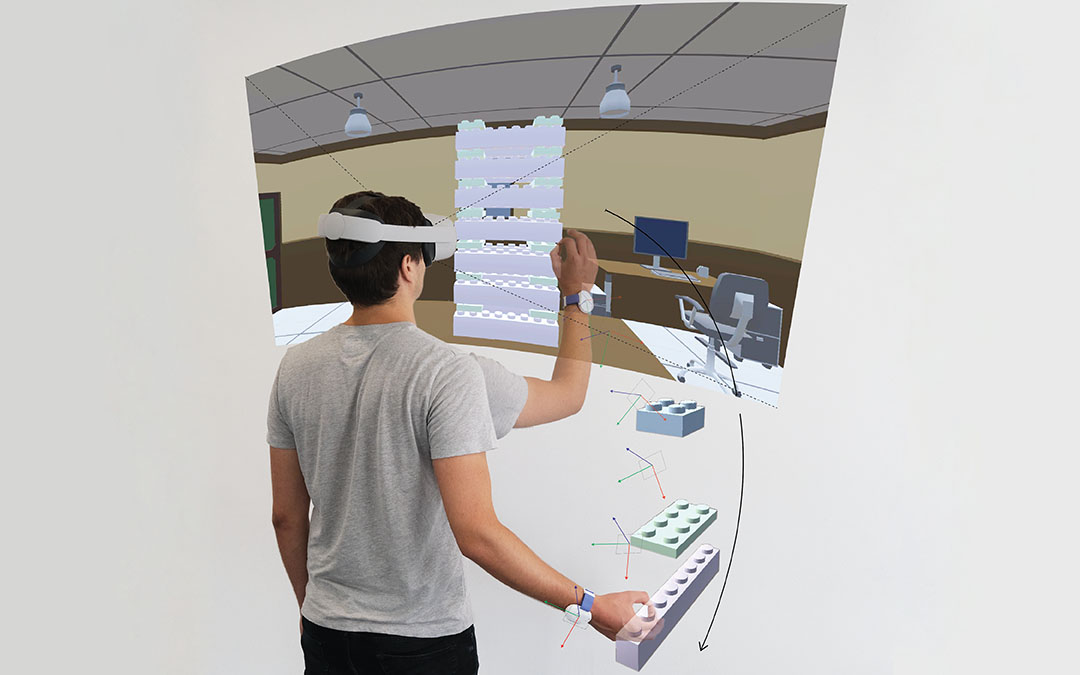

Despite advancements in egocentric hand tracking using head-mounted cameras, contact detection with real-world objects remains challenging, particularly for the quick motions often performed during interaction in Mixed Reality. In this paper, we introduce a novel method for detecting touch on discovered physical surfaces purely from an egocentric perspective using optical sensing. We leverage structured laser light to detect real-world surfaces from the disparity of reflections in real-time and, at the same time, extract a time series of remote vibrometry sensations from laser speckle motions. The pattern caused by structured laser light reflections enables us to simultaneously sample the mechanical vibrations that propagate through the user’s hand and the surface upon touch. We integrated Structured Light Speckle into TapLight, a prototype system that is a simple add-on to Mixed Reality headsets. In our evaluation with a Quest 2, TapLight—while moving—reliably detected horizontal and vertical surfaces across a range of surface materials. TapLight also reliably detected rapid touch contact and robustly discarded other hand motions to prevent triggering spurious input events. Despite the remote sensing principle of Structured Light Speckle, our method achieved a latency for event detection in realistic settings that matches body-worn inertial sensing without needing such additional instrumentation. We conclude with a series of VR demonstrations for situated interaction that leverage the quick touch interaction TapLight supports.

Video

Reference

Paul Streli, Jiaxi Jiang, Juliete Rossie, and Christian Holz. Structured Light Speckle: Joint egocentric depth estimation and low-latency contact detection via remote vibrometry. In Proceedings of ACM UIST 2023.

Acknowledgments

We thank NVIDIA for the provision of computing resources through the NVIDIA Academic Grant.