CapContact

Super-resolution Contact Areas from Capacitive Touchscreens

ACM CHI 2021 Best PaperAbstract

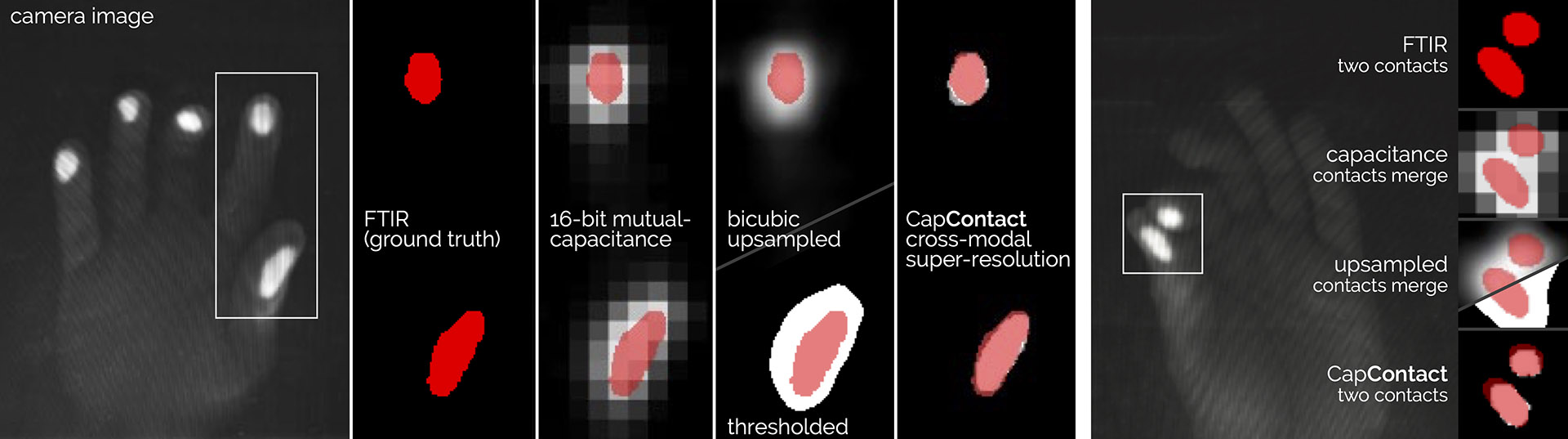

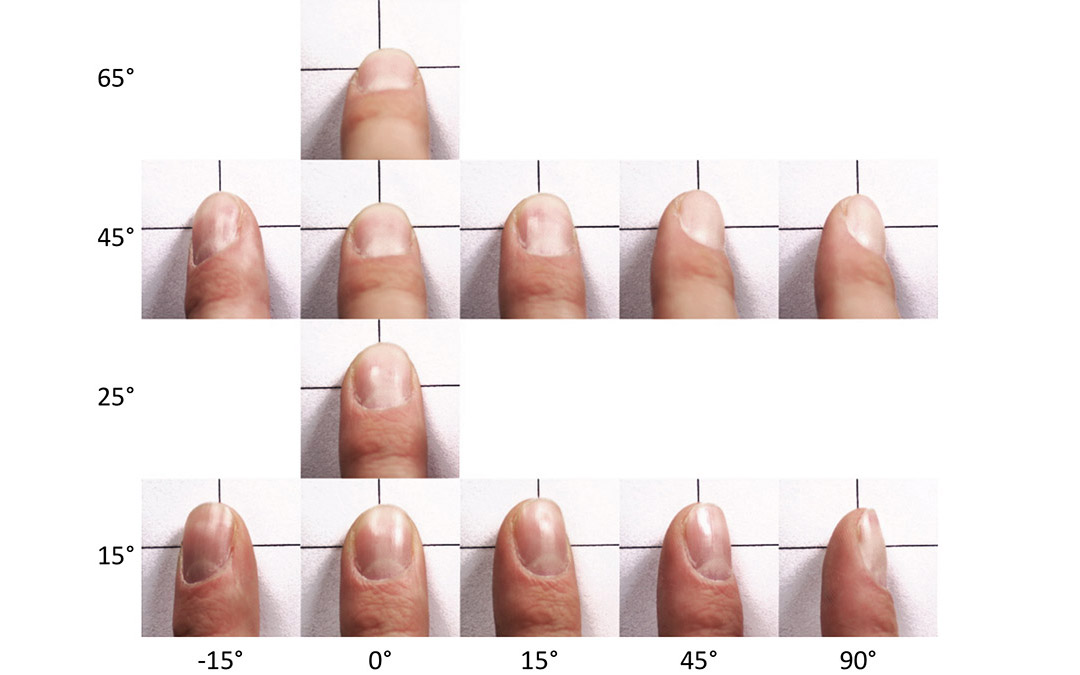

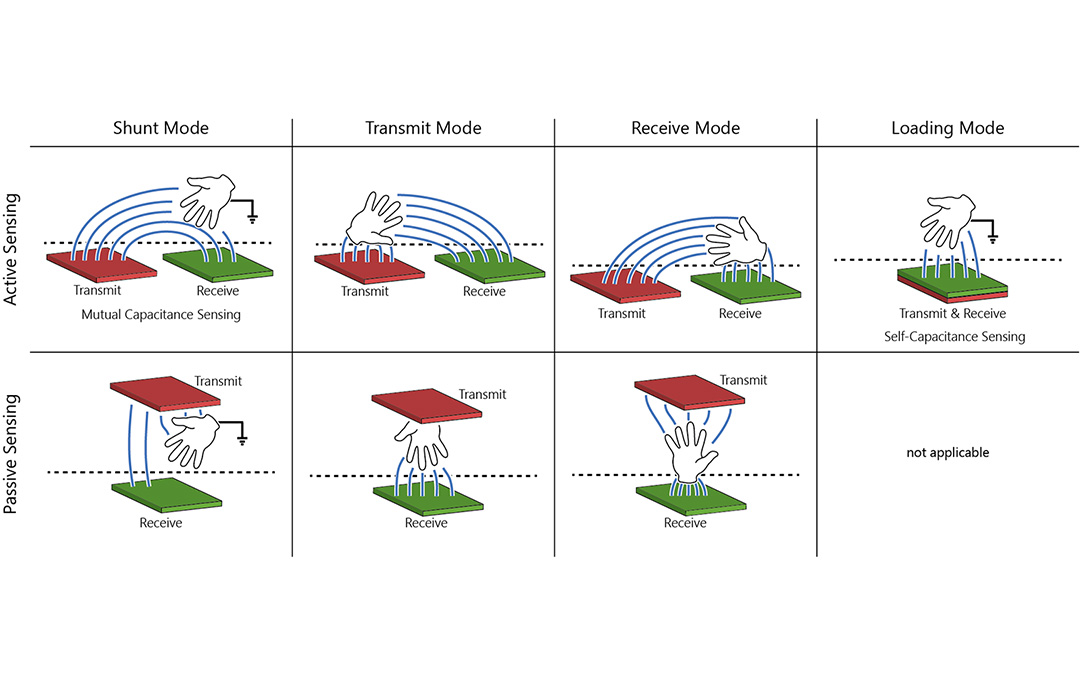

Touch input is dominantly detected using mutual-capacitance sensing, which measures the proximity of close-by objects that change the electric field between the sensor lines. The exponential drop-off in intensities with growing distance enables software to detect touch events, but does not reveal true contact areas. In this paper, we introduce CapContact, a novel method to precisely infer the contact area between the user’s finger and the surface from a single capacitive image. At 8x super-resolution, our convolutional neural network generates refined touch masks from 16-bit capacitive images as input, which can even discriminate adjacent touches that are not distinguishable with existing methods. We trained and evaluated our method using supervised learning on data from 10 participants who performed touch gestures. Our capture apparatus integrates optical touch sensing to obtain ground-truth contact through high-resolution frustrated total internal reflection. We compare our method with a baseline using bicubic upsampling as well as the ground truth from FTIR images. We separately evaluate our method’s performance in discriminating adjacent touches. CapContact successfully separated closely adjacent touch contacts in 494 of 570 cases (87%) compared to the baseline’s 43 of 570 cases (8%). Importantly, we demonstrate that our method accurately performs even at half of the sensing resolution at twice the grid-line pitch across the same surface area, challenging the current industry-wide standard of a ∼4mm sensing pitch. We conclude this paper with implications for capacitive touch sensing in general and for touch-input accuracy in particular.

Video

Reference

Paul Streli and Christian Holz. CapContact: Super-resolution Contact Areas from Capacitive Touchscreens. In Proceedings of ACM CHI 2021.

More images

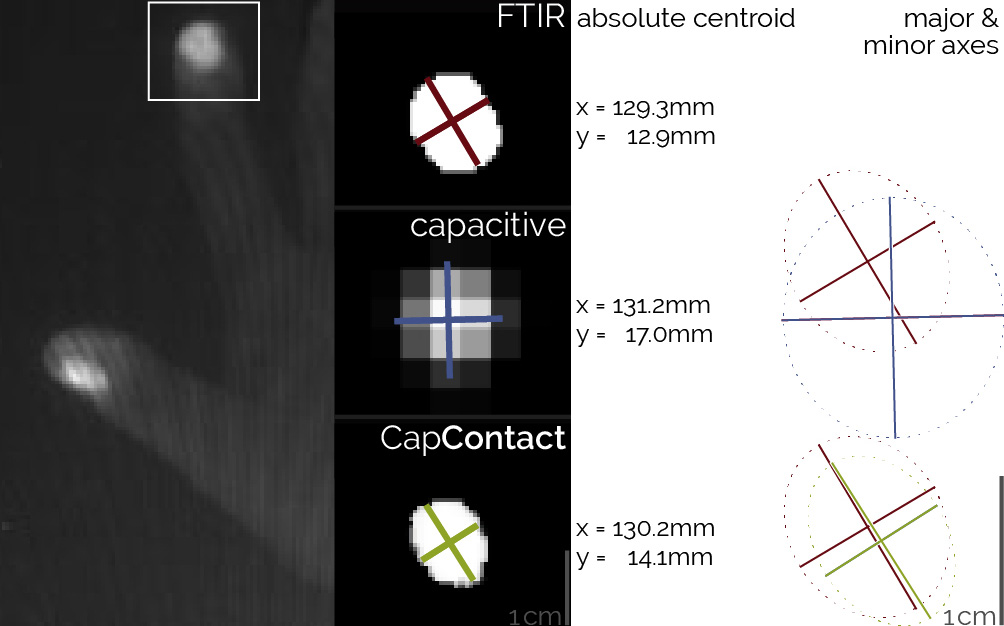

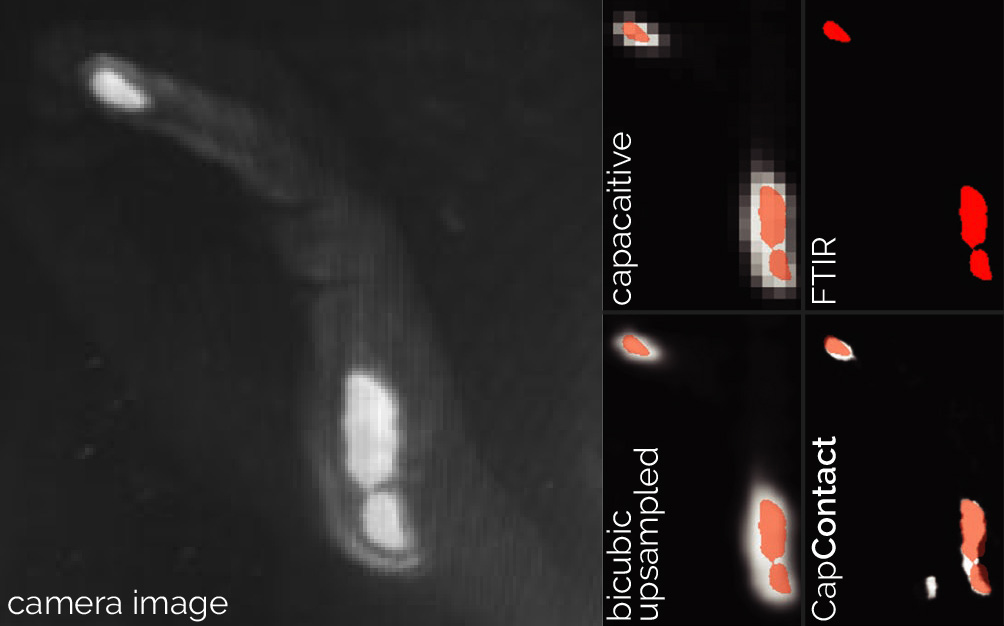

Figure 2: CapContact’s high-resolution reconstruction of the contact area between the user’s finger and the surface produces a more accurate input location of the touch event compared to the center of mass from the capacitive values.

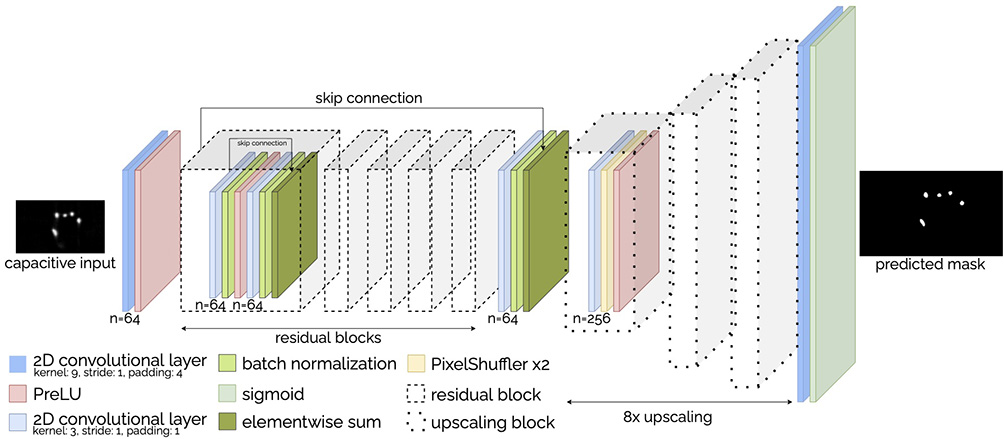

Figure 3: Our generator is a VGG-style fully-convolutional network. Five residual blocks extract the relevant features from the low-resolution capacitive input. Three consecutive sub-pixel convolution layers then upsample it 8×.

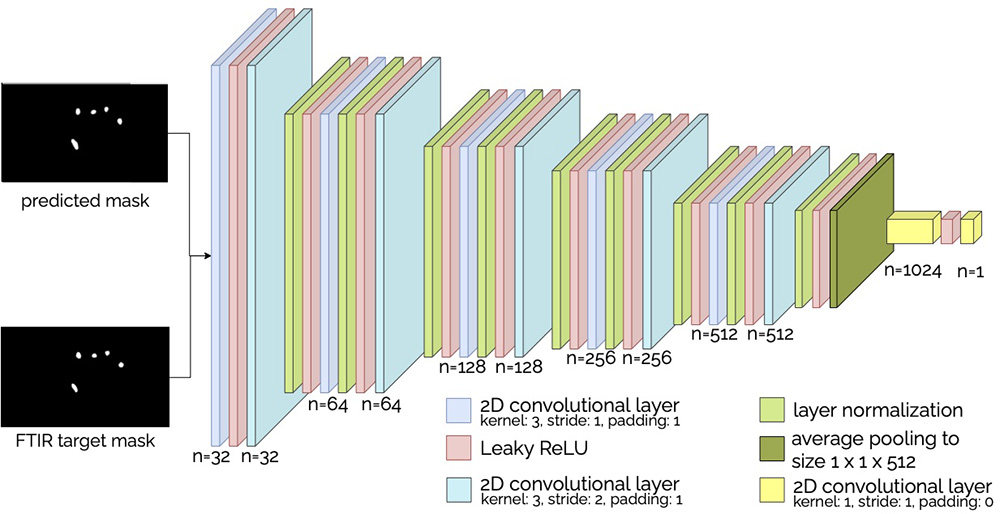

Figure 4: Our critic is based on a VGG-style convolutional neural network. Instead of batch normalization, we apply layer normalization to match the WGAN-GP loss. Our network uses ten convolutional layers to reduce the dimensions of the input image, before it reduces the input to a single dimension using average pooling.

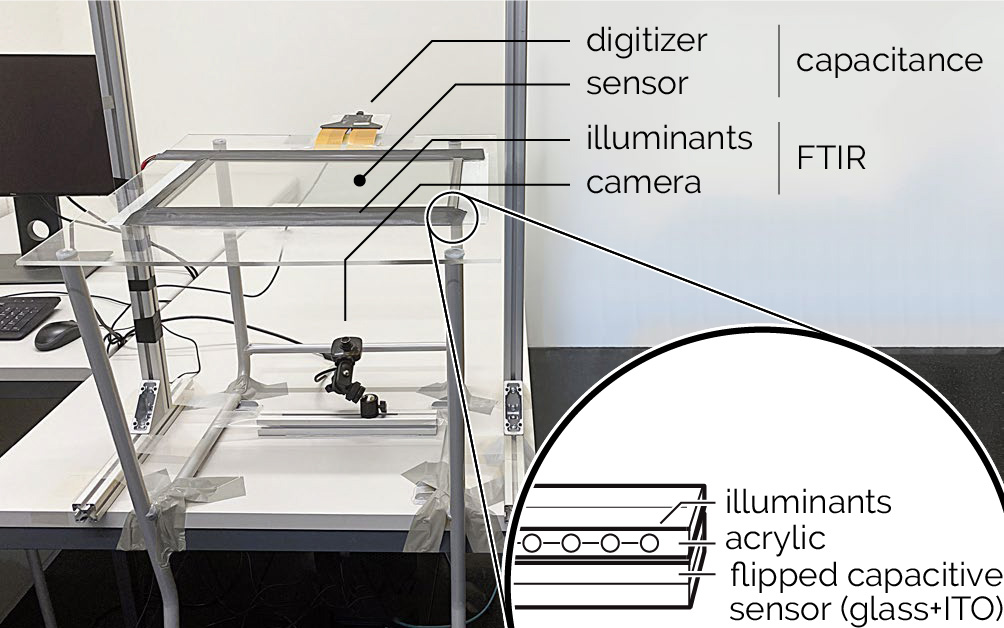

Figure 5: Our apparatus integrates mutual-capacitance touch sensing and optical touch sensing using frustrated total internal reflection to capture accurate contact areas. We flipped the capacitive sensor to expose the ITO layer to the top. A sheet of Plexiglas atop completed the FTIR setup.

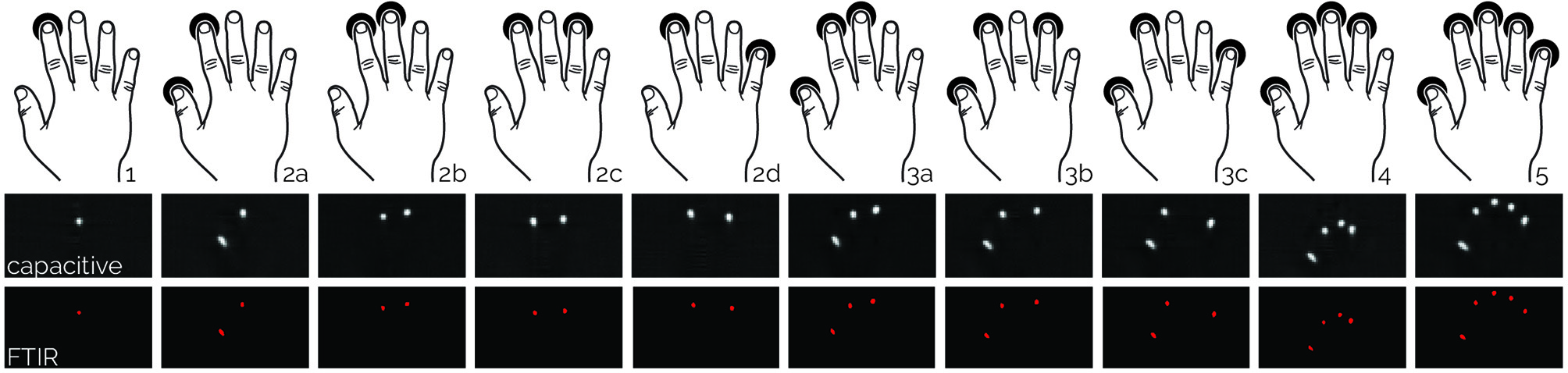

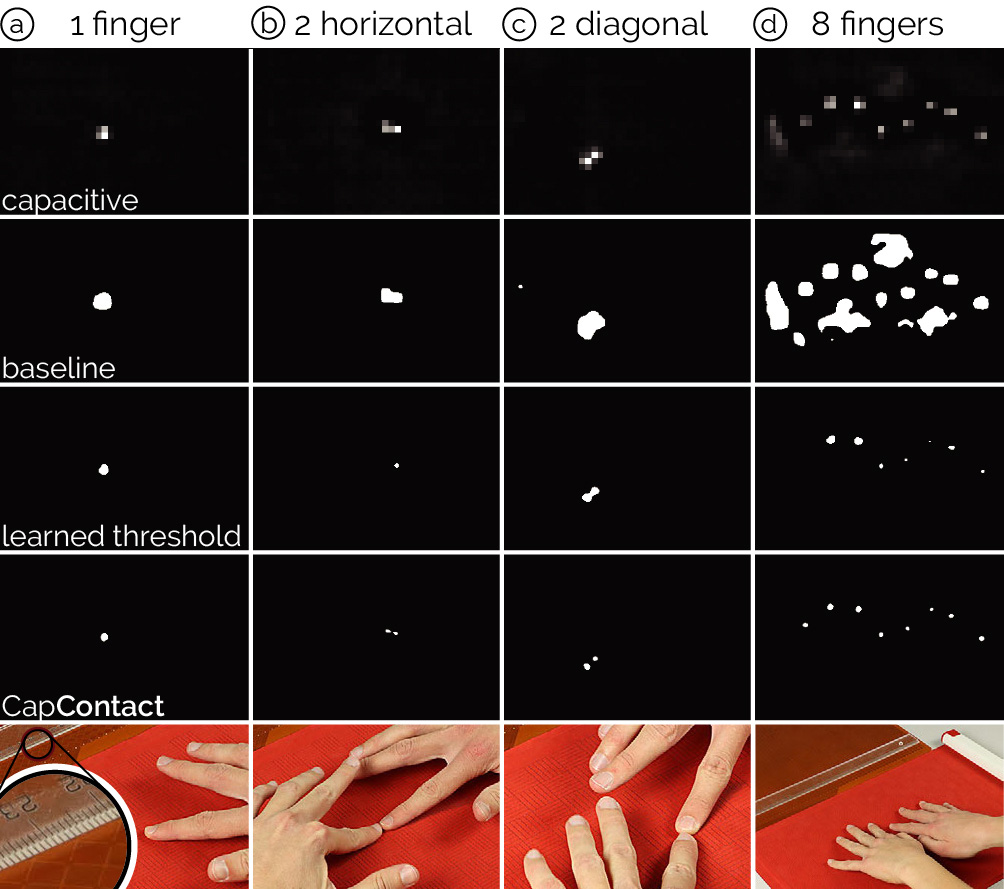

Figure 6: In our data capture study, participants produced a series of touch input and drag events using each of these finger combinations (black circles indicate touches), once at 0° yaw rotation and once at 90° yaw rotation. The figure includes representative capacitive images and their corresponding contact area images from the FTIR sensor for each pose.

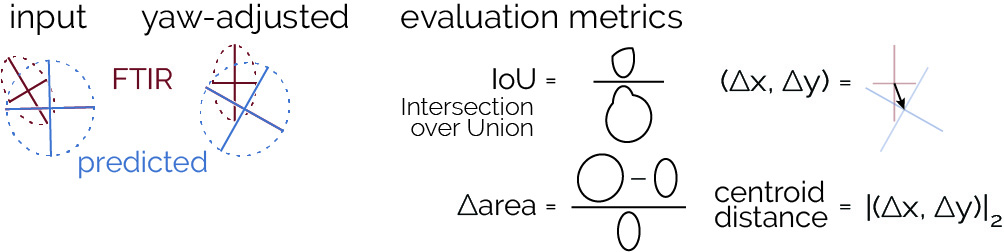

Figure 7: We compare predictions to the ground-truth contact mask using these metrics. For offsets, we first adjust for yaw. Not shown: percentage difference in aspect ratios.

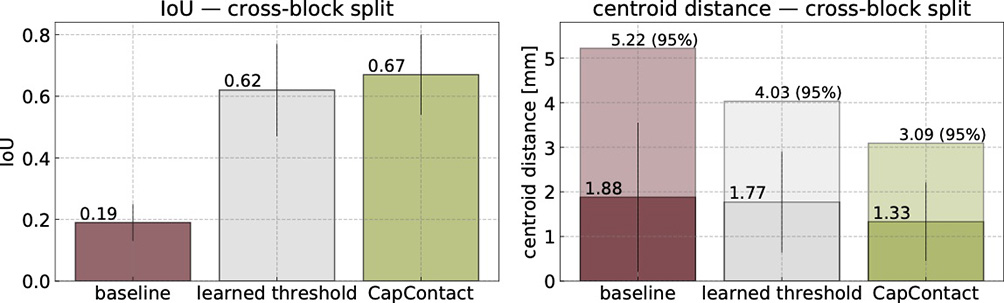

Figure 8: Exp.1—Tested across blocks within participants, CapContact and the learned threshold achieved a much higher IoU score than the baseline, improving it by factors of 3.5 and 3.2, respectively. On average, CapContact reduced the centroid error by ∼30% and the learned threshold by 6%.

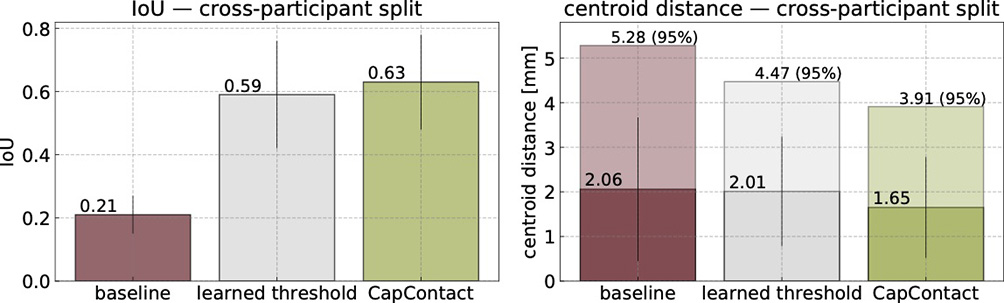

Figure 9: Exp. 2—Tested across participants, CapContact and the learned threshold achieved a much higher IoU score than the baseline, improving it by factors of 3 and 2.8, respectively. CapContact reduced the average centroid error by 20%, whereas the learned threshold reduced it by 2.5%.

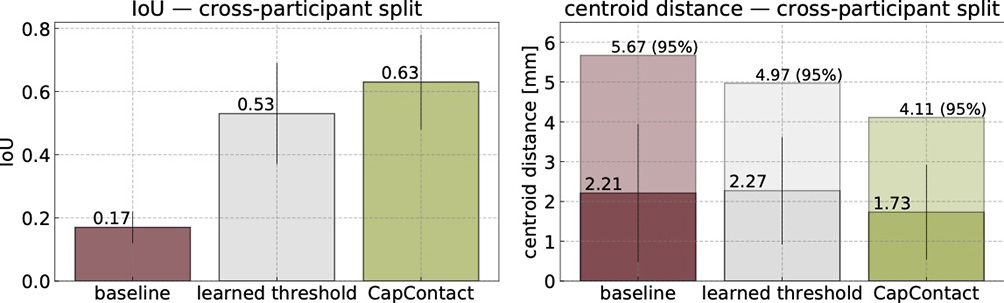

Figure 10: Exp.4—Half-resolution performance on touch metrics: CapContact and the learned threshold achieved an average IoU score that is 3.7× and 3.1× higher than the baseline, respectively. CapContact lowered the average centroid error by 22%, while the learned threshold was 3% worse.

Figure 11: Exp.6—CapContact running at half resolution (Project Zanzibar mat, 7 mm pitch). Solely trained on down-sized data from our capture study, CapContact robustly detects individual contact areas while all threshold-based methods suffer from noise and missed touch events.

Figure 12: Sample image of hand side resting on the surface when writing with a pen. Since our dataset to-date contains combinations of finger touches at various angles, but does not include more general and complex shapes, the fidelity of shape approximation is limited. While CapContact reconstructs shapes that are more accurate than the bicubic baseline, which produces an expansive imprint, our method still misses the fine crevasse between parts of the palm.

Acknowledgments

We are thankful to Microchip for supplying the ATMXT2954T2 touchscreen sensor IC as well as the ITO-based touch panel assembly used in our apparatus.