The Generalized Perceived Input Point Model

How to Double Touch Accuracy by Extracting Fingerprints

ACM CHI 2010Abstract

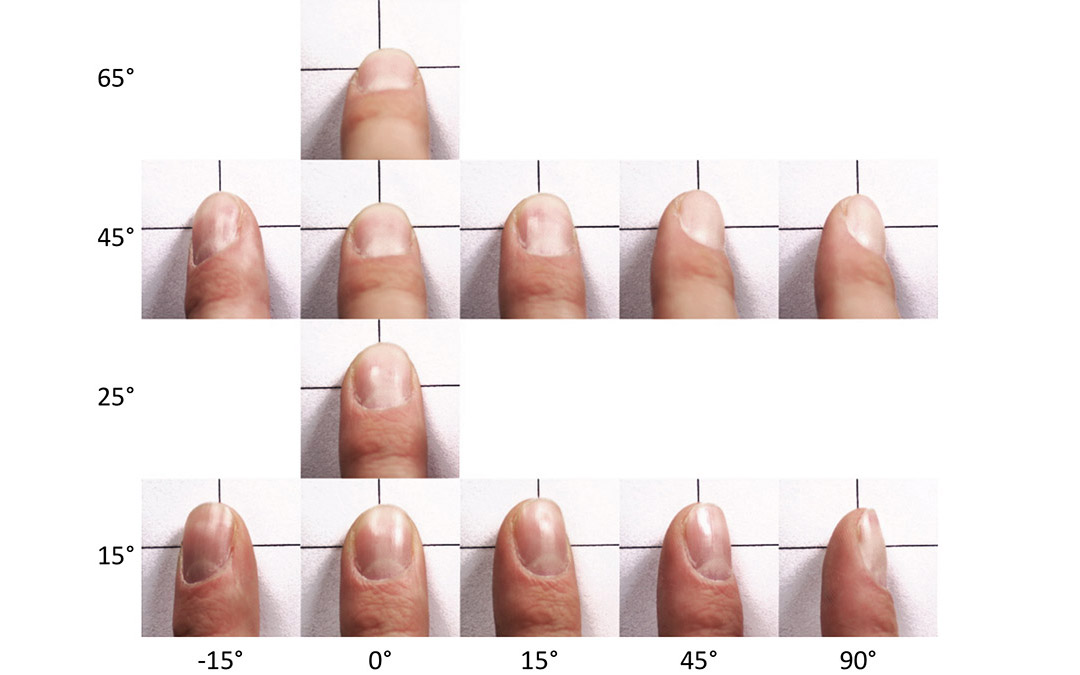

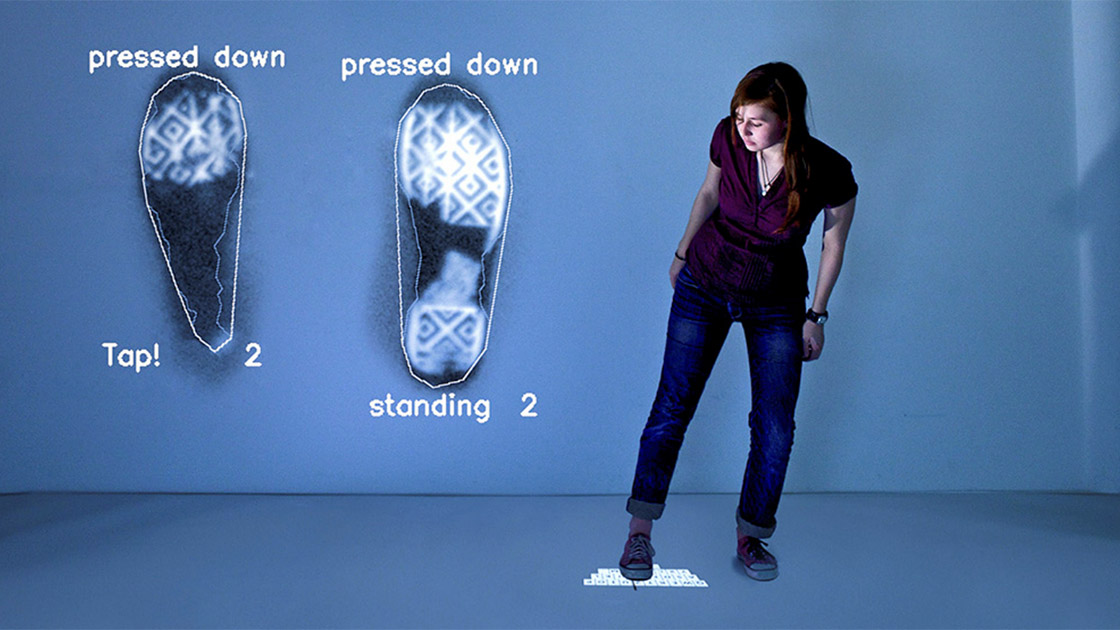

It is generally assumed that touch input cannot be accurate because of the fat finger problem, i.e., the softness of the fingertip combined with the occlusion of the target by the finger. In this paper, we show that this is not the case. We base our argument on a new model of touch inaccuracy. Our model is not based on the fat finger problem, but on the perceived input point model. In its published form, this model states that touch screens report touch location at an offset from the intended target. We generalize this model so that it represents offsets for individual finger postures and users. We thereby switch from the traditional 2D model of touch to a model that considers touch a phenomenon in 3-space. We report a user study, in which the generalized model explained 67% of the touch inaccuracy that was previously attributed to the fat finger problem. In the second half of this paper, we present two devices that exploit the new model in order to improve touch accuracy. Both model touch on per-posture and per-user basis in order to increase accuracy by applying respective offsets. Our Ridgepad prototype extracts posture and user ID from the user’s fingerprint during each touch interaction. In a user study, it achieved 1.8 times higher accuracy than a simulated capacitive baseline condition. A prototype based on optical tracking achieved even 3.3 times higher accuracy. The increase in accuracy can be used to make touch interfaces more reliable, to pack up to 3.3^2 > 10 times more controls into the same surface, or to bring touch input to very small mobile devices.

Reference

Christian Holz and Patrick Baudisch. The Generalized Perceived Input Point Model and How to Double Touch Accuracy by Extracting Fingerprints. In Proceedings of ACM CHI 2010.