Efficient Visual Appearance Optimization by Learning from Pior Preferences

ACM UIST 2025Abstract

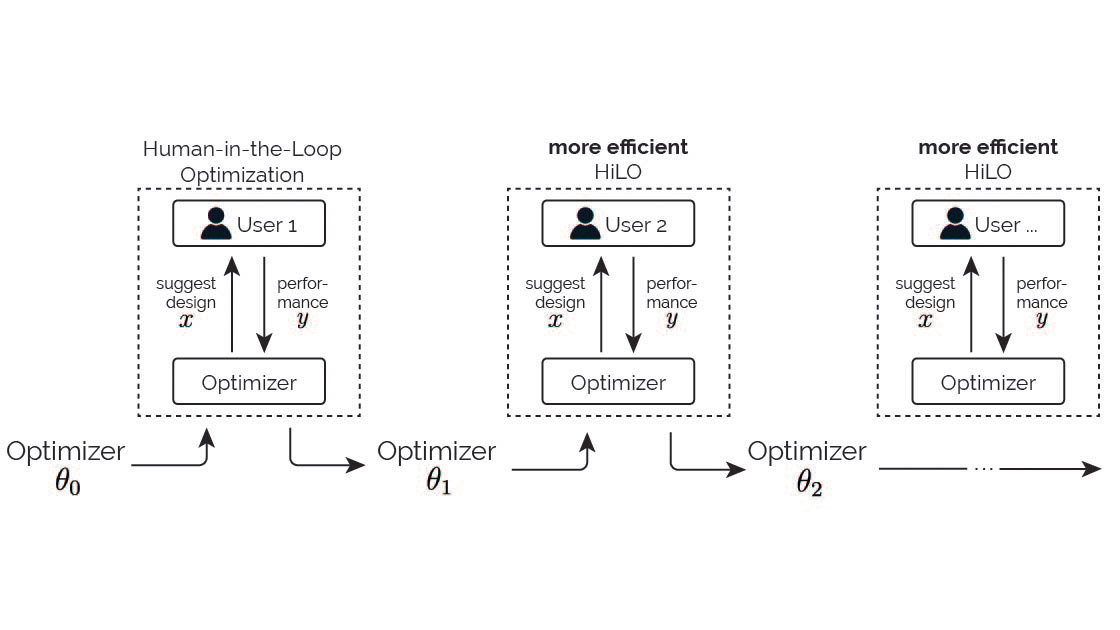

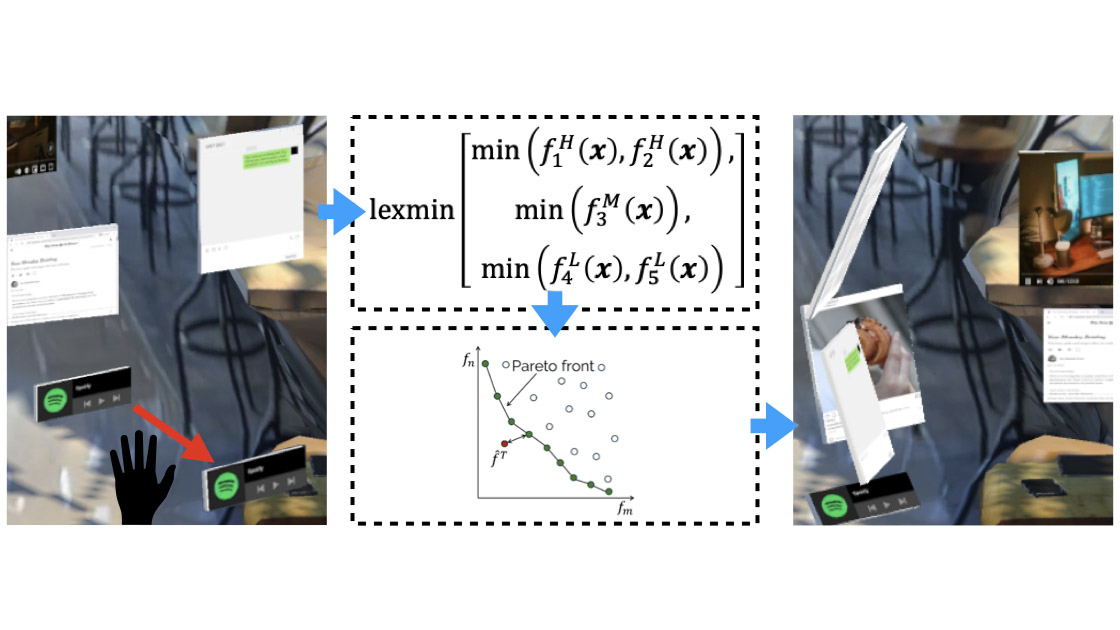

Adjusting visual parameters such as brightness and contrast is common in our everyday experiences. Finding the optimal parameter setting is challenging due to the large search space and the lack of an explicit objective function, leaving users to rely solely on their implicit preferences. Prior work has explored Preferential Bayesian Optimization (PBO) to address this challenge, involving users to iteratively select preferred designs from candidate sets. However, PBO often requires many rounds of preference comparisons, making it more suitable for designers than everyday end-users. We propose Meta-PO, a novel method that integrates PBO with meta-learning to improve sample efficiency. Specifically, Meta-PO infers prior users’ telligently suggest design candidates for the new users, enabling faster convergence and more personalized results. An experimental evaluation of our method for appearance design tasks on 2D and 3D content showed that participants achieved satisfactory appearance in 5.86 iterations using Meta-PO when participants shared similar goals with a population (e.g., tuning for a “warm” look) and in 8 iterations even generalizes across divergent goals (e.g., from “vintage”, “warm”, to “holiday”). Meta-PO makes personalized visual optimization more applicable to end-users through a generalizable, more efficient optimization conditioned on preferences, with the potential to scale interface personalization more broadly.

Video

Reference

Zhipeng Li, Yi-Chi Liao, and Christian Holz. Efficient Visual Appearance Optimization by Learning from Pior Preferences. In Proceedings of ACM UIST 2025.

Working Mechanism

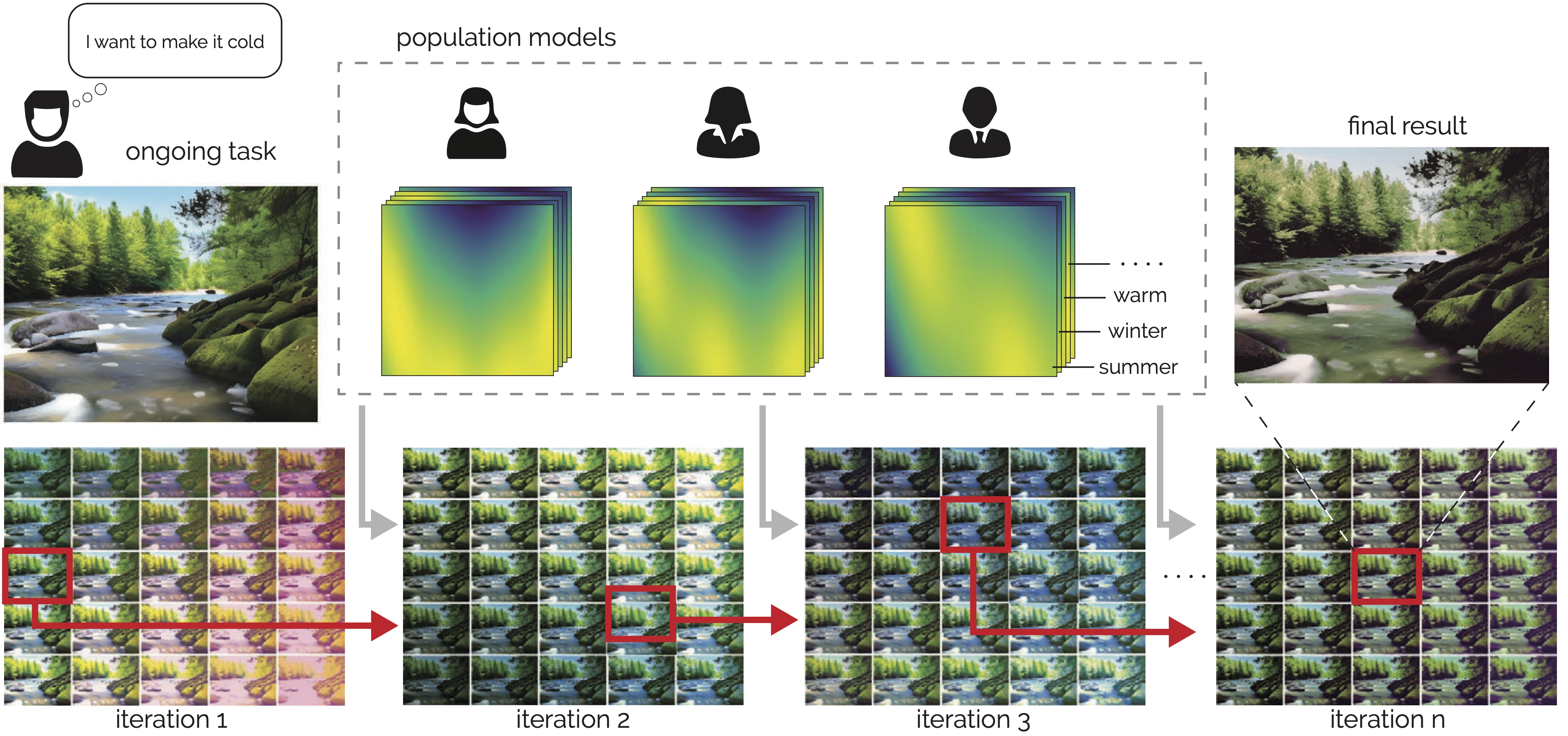

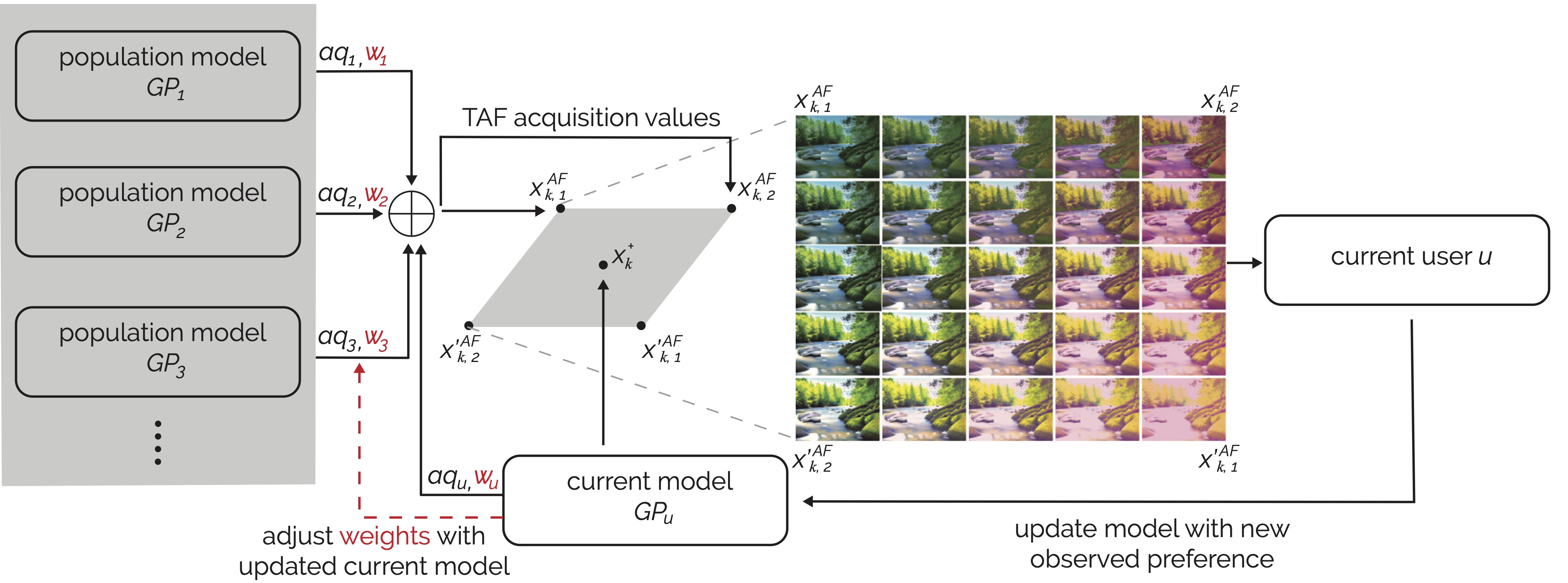

Figure 2. Meta-PO’s working mechanism during deployment. Meta-PO first stores a set of population models (shown in the gray area). During deployment, it constructs a 2D search plane per iteration using three distinct design points in a high-dimensional design space. The first point, $x_k^+$, is the most preferred design so far and serves as the plane’s center. The second point, $x_{k,1}^{AF}$, is selected by aggregating acquisition values from both the population models and the current model using TAF-R. The third point, $x_{k,2}^{AF}$, is derived from a two-step extension of TAF-R. Meta-PO then reflects these two points across $x_k^{+}$ to create $x_{k,1}^{‘AF}$ and $x_{k,2}^{‘AF}$. Jointly, these four points define the full 2D plane; Meta-PO then samples a grid of candidates within it and presents them to the user. The user’s selection updates the current model $GP_u$, which in turn allows TAF-R to adjust model weights based on the similarity between $GP_u$ and each population model $GP_i$. A new plane is constructed in the next iteration using these updated weights and new acquisition values.

Image Enhancement Interface

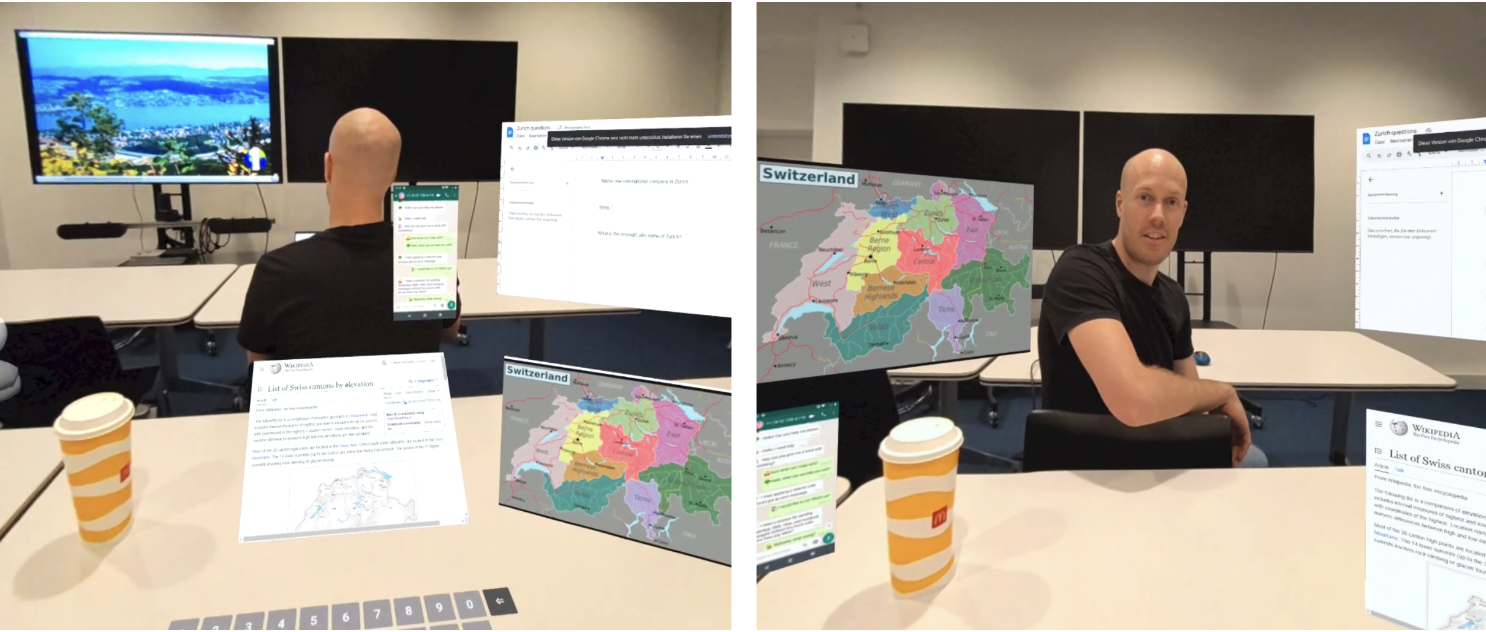

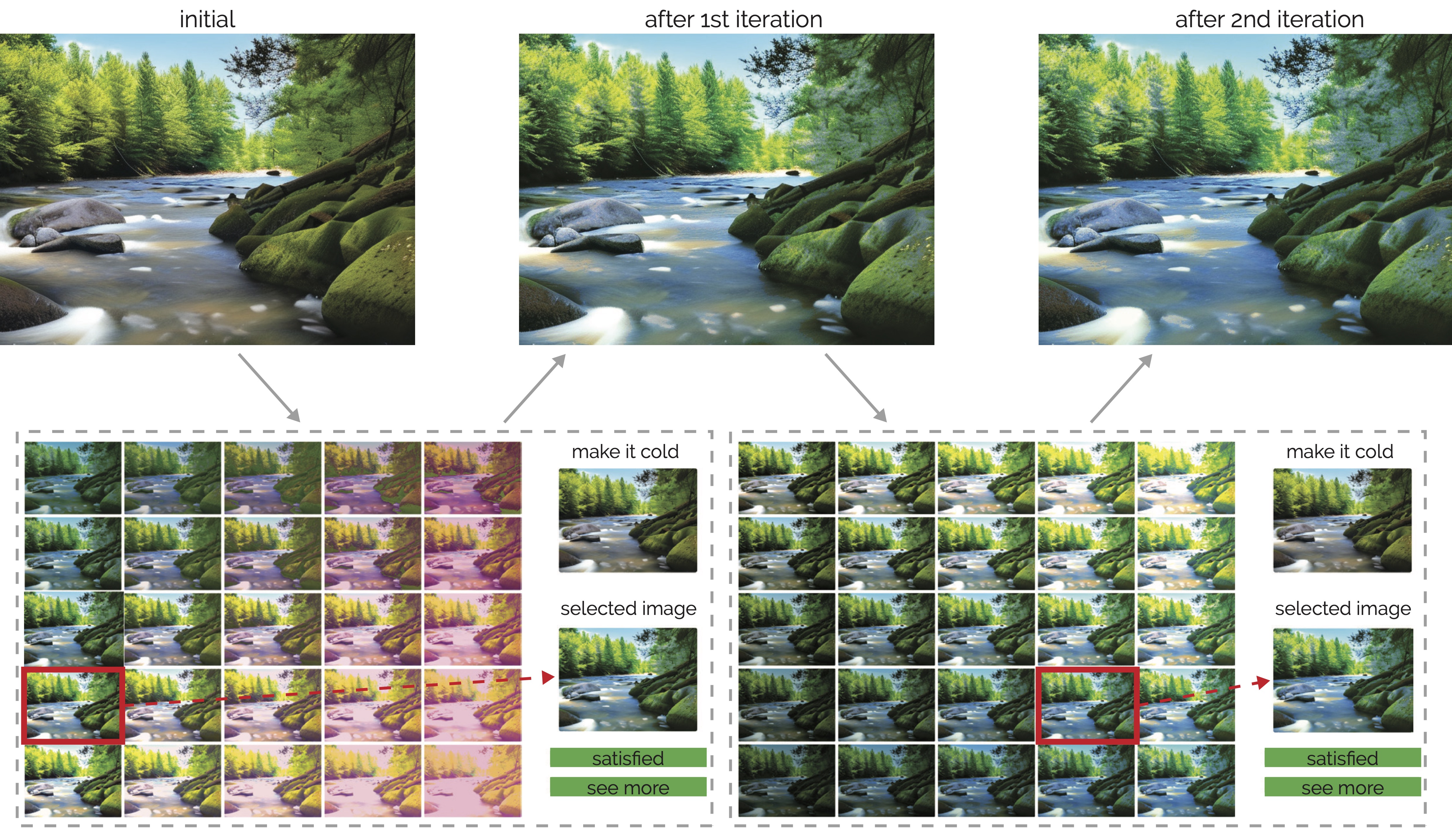

Figure 3. Users are presented with 25 candidate images and select the one that best aligns with their desired visual effect (`theme’). Our user study interface added the theme-based instruction (top right) and buttons to choose whether they were satisfied with the current iteration’s outcome or needed to see additional candidates.