Human Motion Capture from Loose and Sparse Inertial Sensors with Garment-aware Diffusion Models

IJCAI 2025Abstract

Motion capture using sparse inertial sensors has shown great promise due to its portability and lack of occlusion issues compared to camera-based tracking. Existing approaches typically assume that IMU sensors are tightly attached to the human body. However, this assumption often does not hold in real-world scenarios. In this paper, we present Garment Inertial Poser (GaIP), a method for estimating full-body poses from sparse and loosely attached IMU sensors. We first simulate IMU recordings using an existing garment-aware human motion dataset. Our transformer-based diffusion models synthesize loose IMU data and estimate human poses from this challenging loose IMU data. We also demonstrate that incorporating garment-related parameters during training on loose IMU data effectively maintains expressiveness and enhances the ability to capture variations introduced by looser or tighter garments. Our experiments show that our diffusion methods trained on simulated and synthetic data outperform state-of-the-art inertial full-body pose estimators, both quantitatively and qualitatively, opening up a promising direction for future research on motion capture from such realistic sensor placements.

Video

Reference

Andjela Ilic, Jiaxi Jiang, Paul Streli, Xintong Liu, and Christian Holz. Human Motion Capture from Loose and Sparse Inertial Sensors with Garment-aware Diffusion Models. In International Joint Conferences on Artificial Intelligence 2025 (IJCAI).

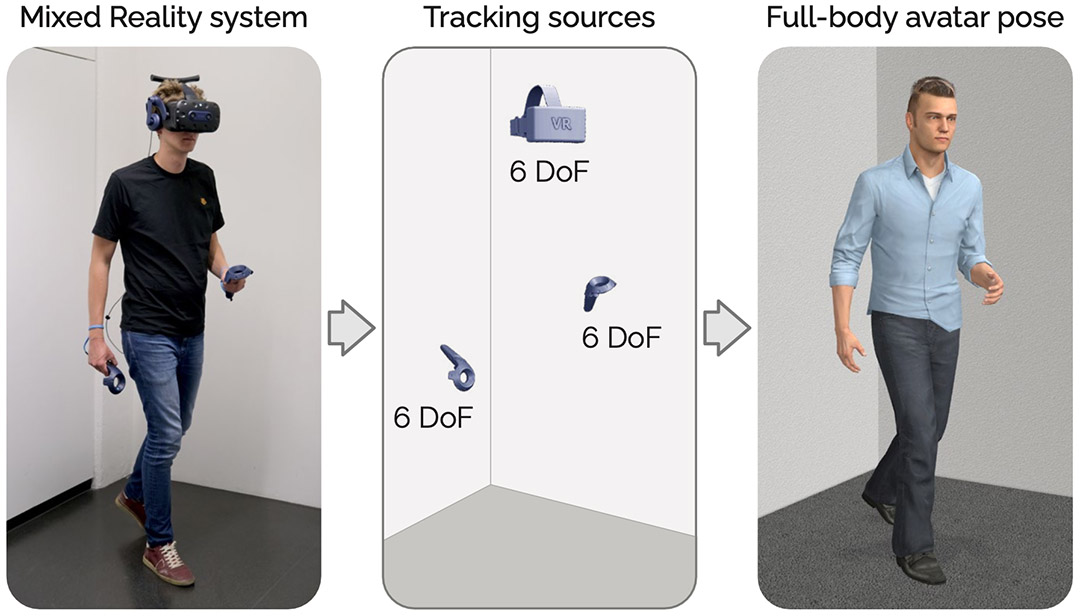

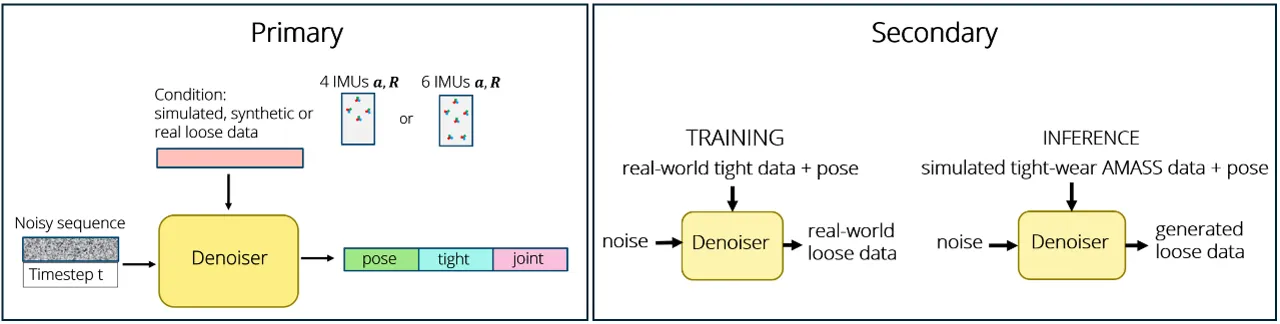

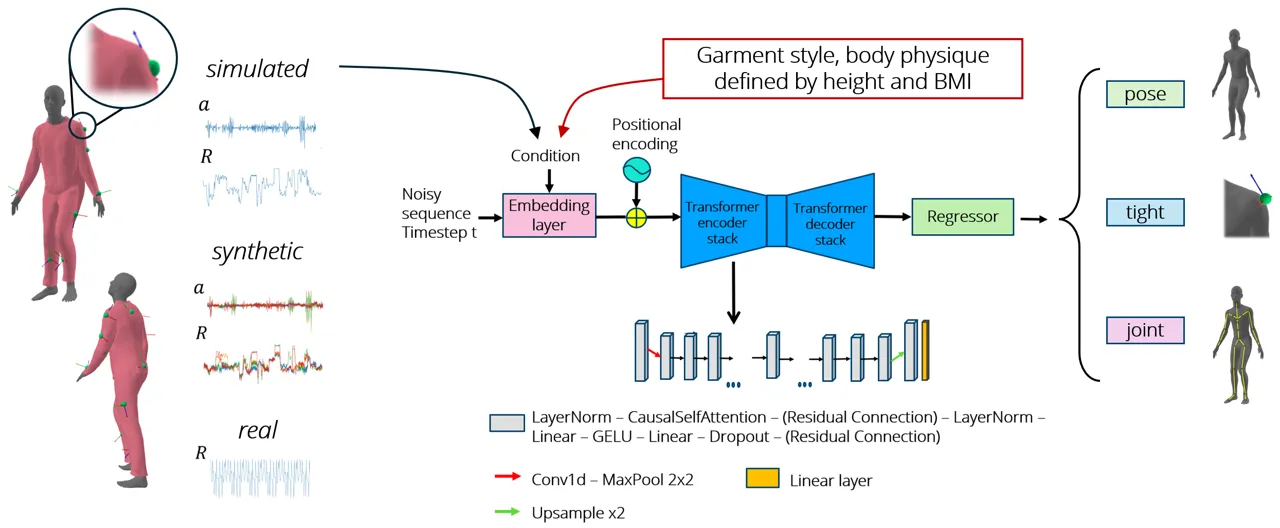

Diffusion Framework

Figure 2: Our diffusion framework consists of two models: a primary model for pose estimation and a secondary model for generating loose-wear IMU data. The primary model predicts SMPL joint rotations, joint positions, and tight-wear IMU signals. The secondary model, trained on real-world data, generates loosely attached IMU signals conditioned on tightly attached IMU signals and the corresponding pose. During inference, we use the secondary model to generate loose-wear IMU signals for AMASS poses, given the poses and simulated tight-wear IMU signals.

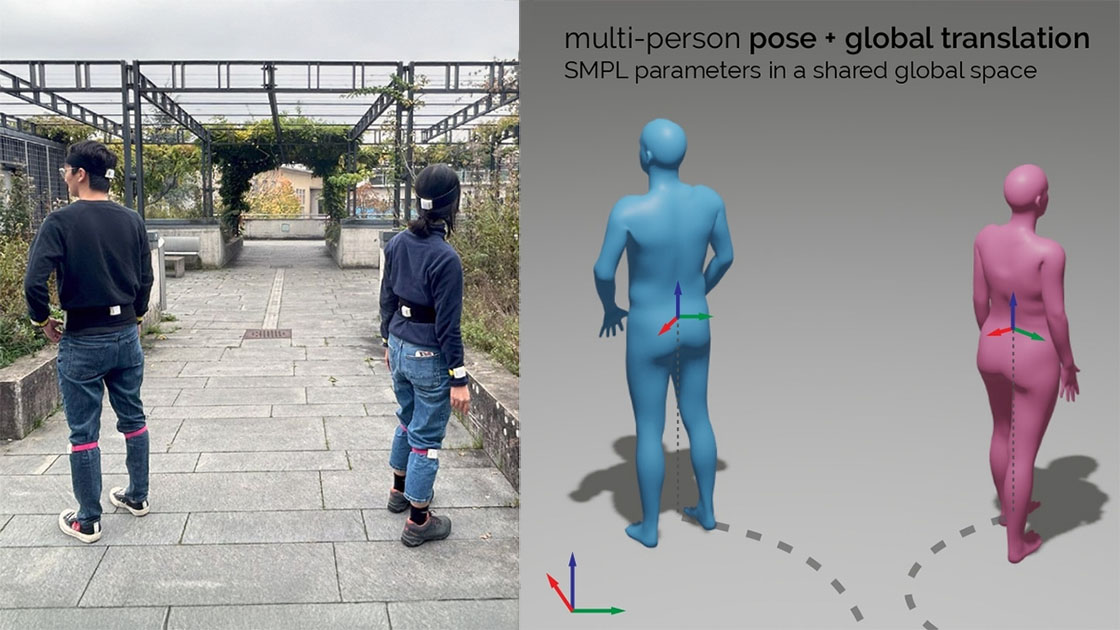

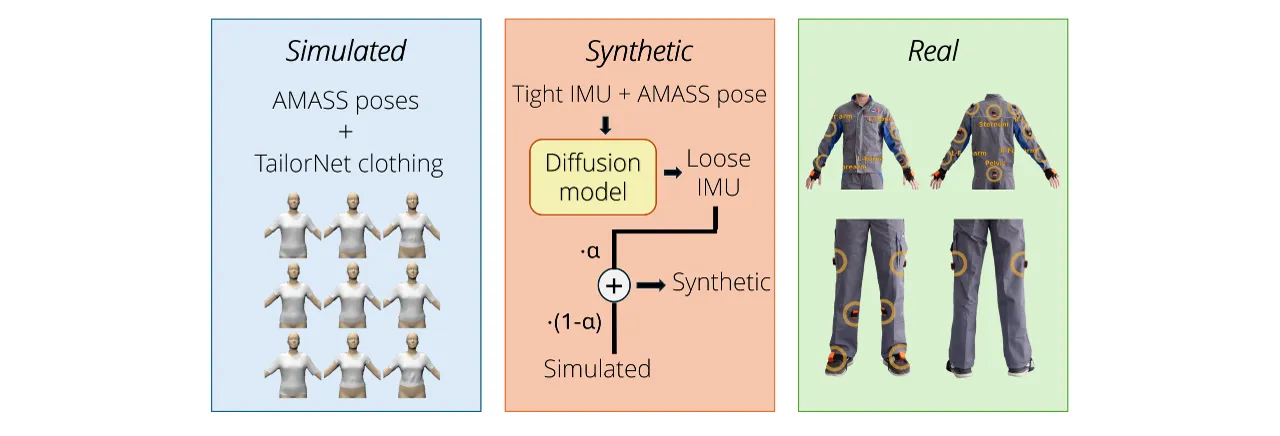

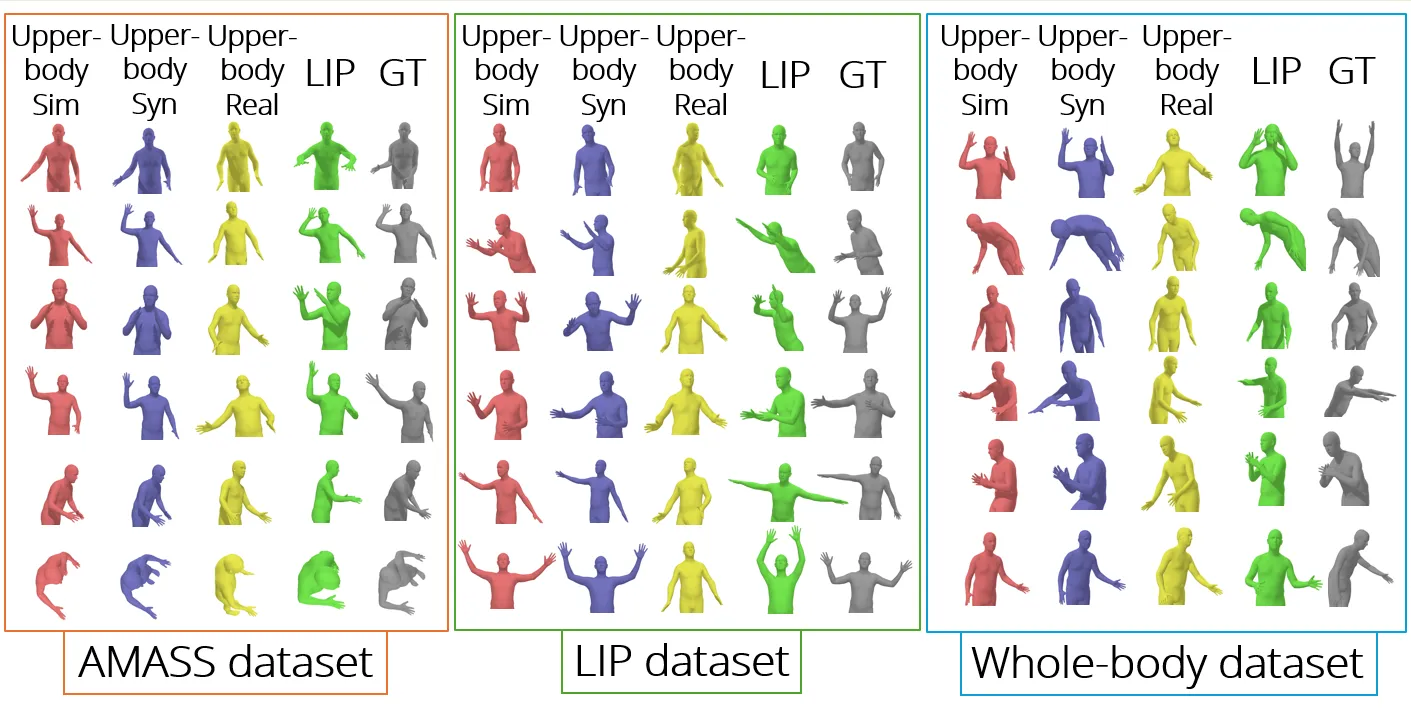

Training data

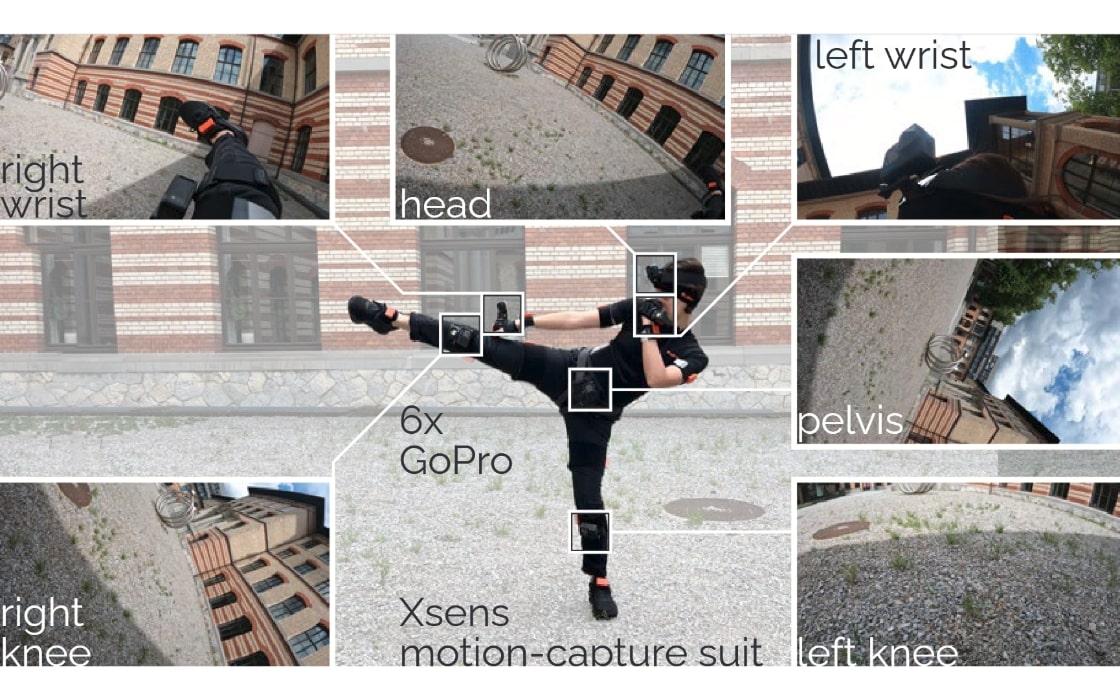

Figure 3: This task is challenged by data scarcity, as real-world datasets are rare, limited in size, or do not always cover the whole body, making them insufficient for effective training. To address this, we train our GaIP model using three types of datasets: simulated, synthetic, and real.

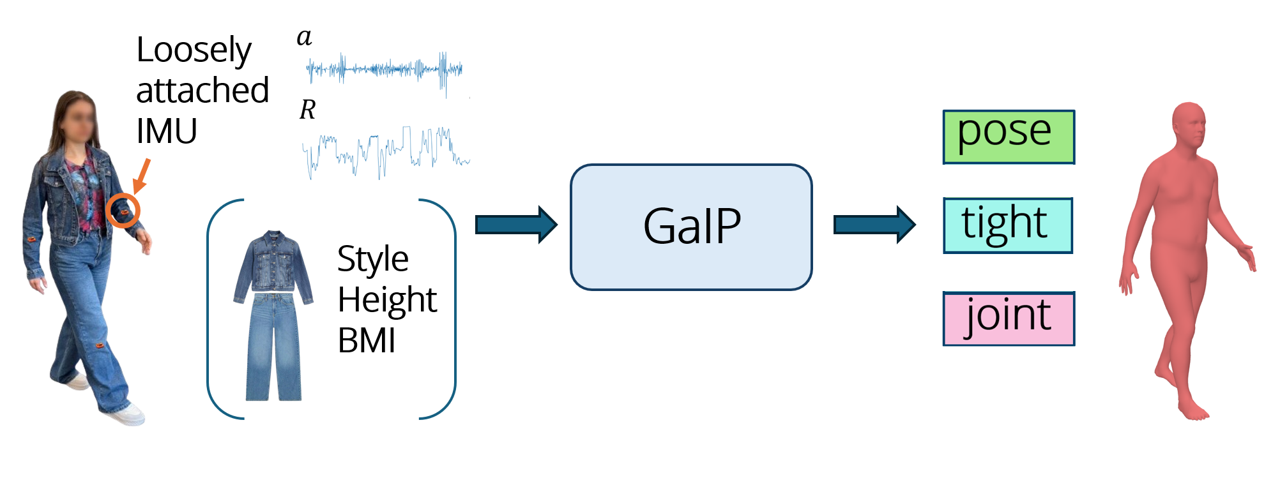

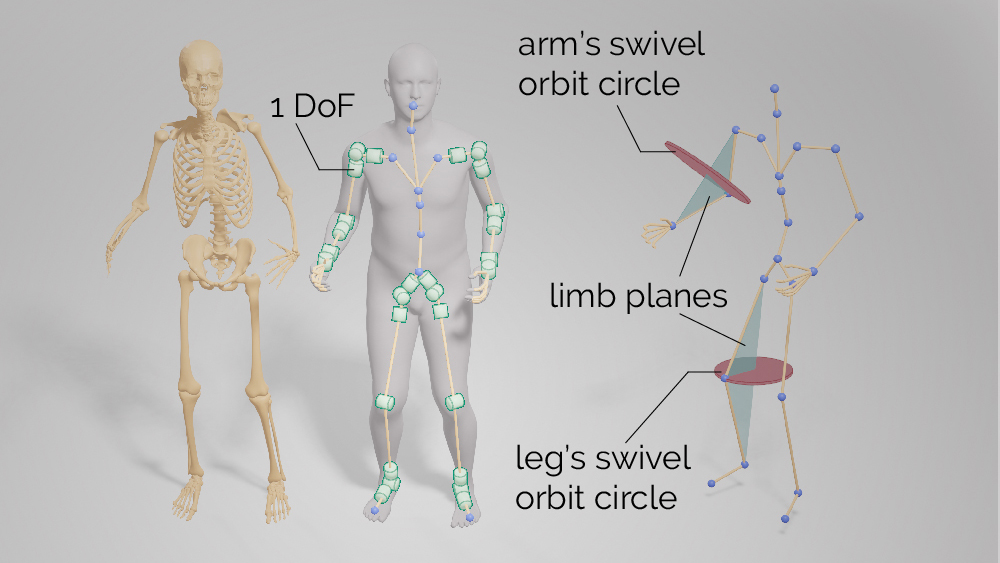

Method

Figure 4: GaIP takes as input either loose-wear IMU recordings of acceleration and rotation alone, or these inputs can be enriched with garment-related parameters (style, height, and body mass index). Using this information, GaIP estimates the body pose, joint positions, and tightly attached IMU signals. Architecturally, we employ a transformer-based denoiser within the DDPM framework.

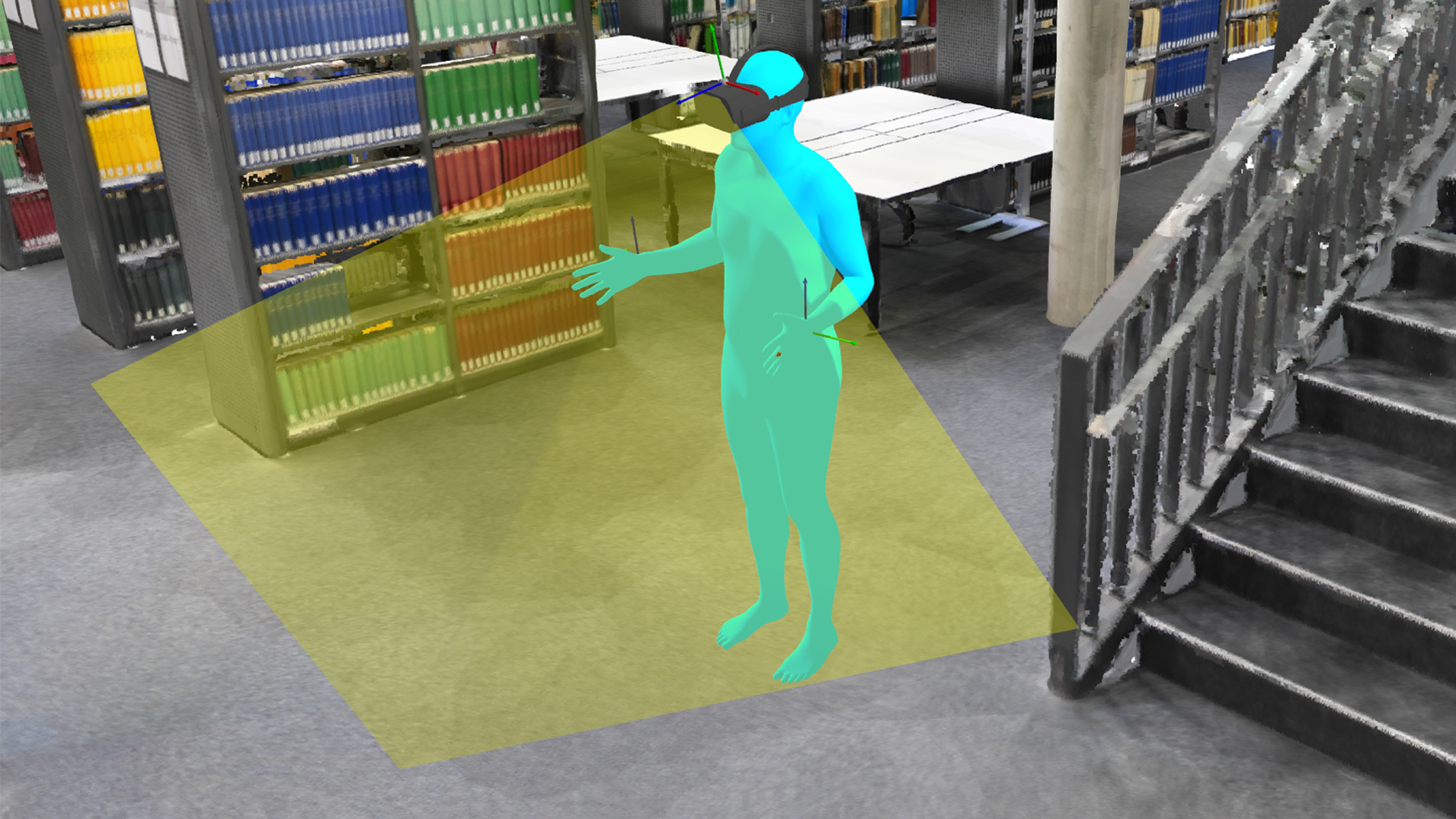

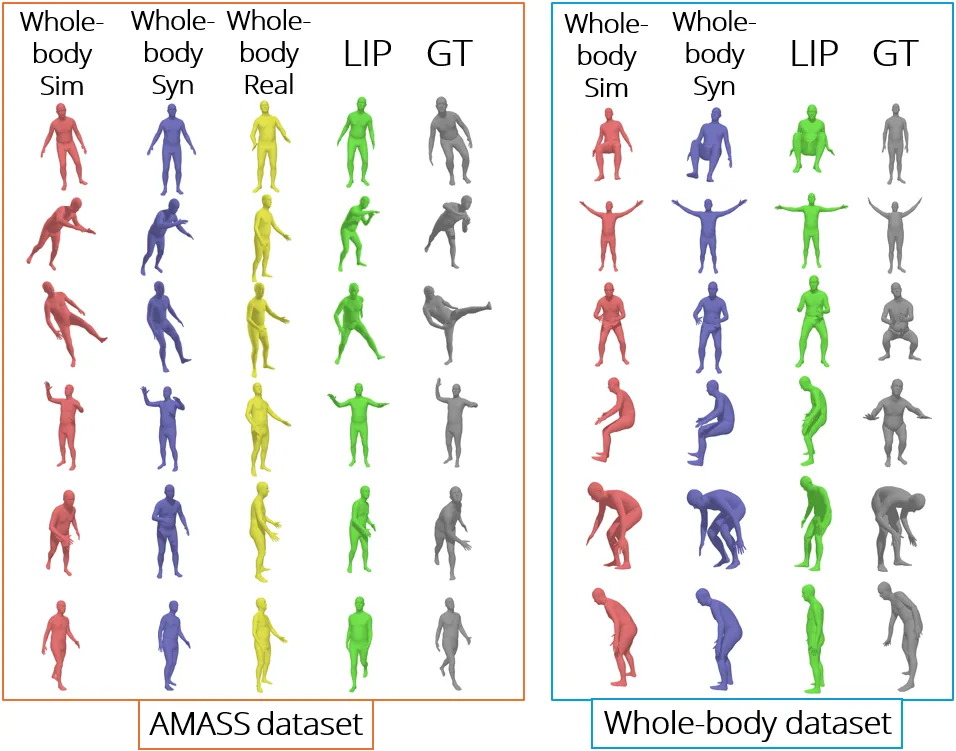

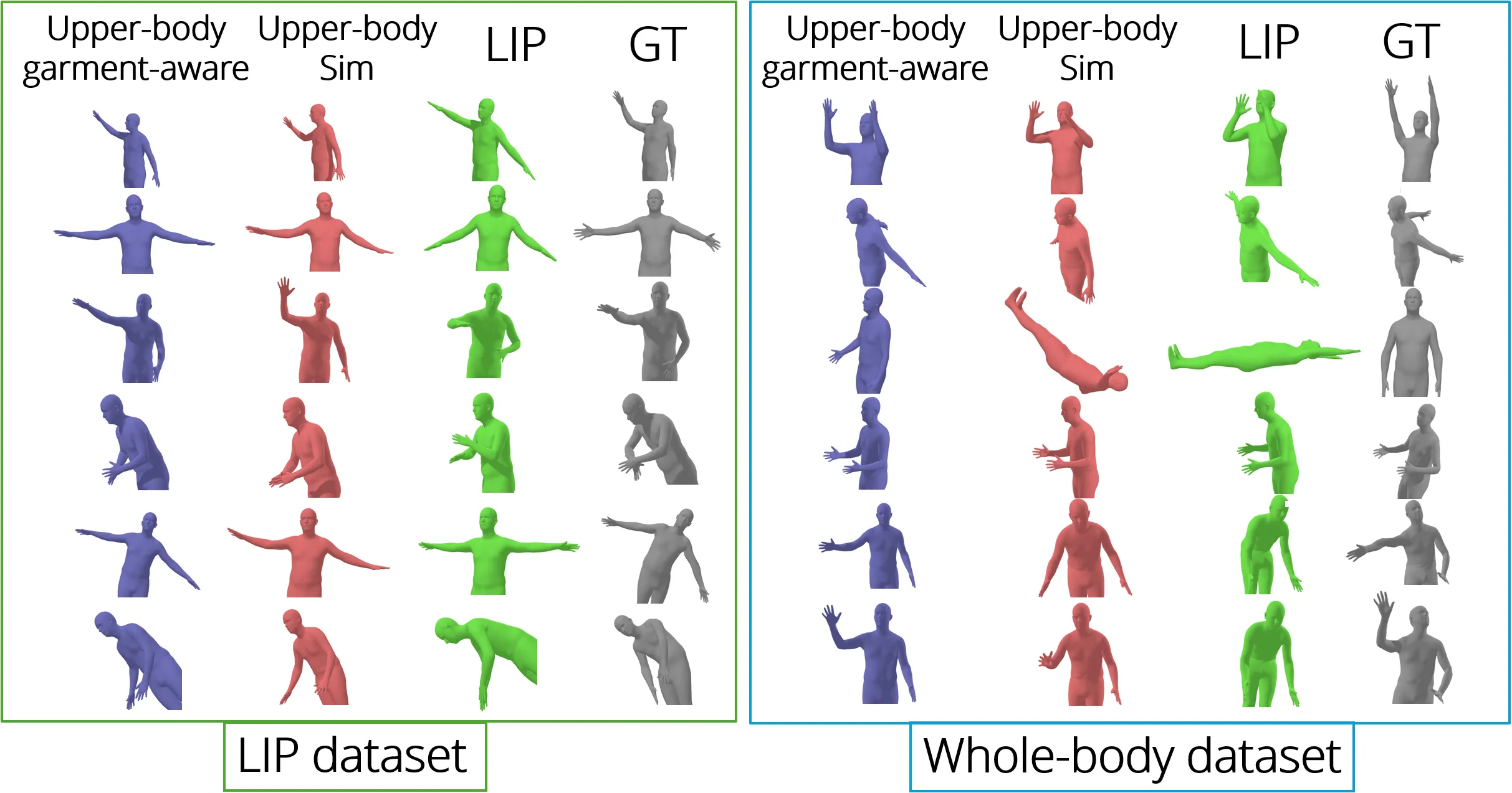

Results

Figure 5: Qualitative performance of the upper-body models conditioned on IMU data on 1) simulated AMASS dataset, 2) real-world upper-body LIP dataset, and 3) real-world whole-body dataset.

Figure 6: Qualitative performance of the full-body models conditioned on IMU data on 1) simulated AMASS dataset, and 2) real-world whole-body dataset.

Figure 7: Qualitative performance of the upper-body model conditioned on IMU data and garment-related parameters on 1) real-world upper-body LIP dataset, and 2) real-world whole-body dataset.